FINANCIAL MANAGEMENT SYSTEMS

VA Should Improve Its Requirements Development, Cost Estimate, and Schedule

Report to Congressional Committees

United States Government Accountability Office

View GAO‑25‑107256. For more information, contact Paula M. Rascona, (202) 512-9816 or rasconap@gao.gov; Brian P. Bothwell, (202) 512-6888 or bothwellb@gao.gov; or Vijay A. D’Souza, (202) 512-7650 or dsouzav@gao.gov.

Highlights of GAO‑25‑107256, a report to congressional committees

VA Should Improve Its Requirements Development, Cost Estimate, and Schedule

Why GAO Did This Study

VA’s core financial system is more than 30 years old. Two prior attempts to replace the system, beginning in 1998, failed after years of development and hundreds of millions of dollars in costs. The current program has spent $1.9 billion since 2016 and completed six incremental deployments of a new system. The current target date for program completion is 2031.

GAO was asked to review the progress of the program. This report examines the extent to which (1) the program’s cost estimate and schedule followed best practices; (2) requirements development and management efforts followed Agile best practices; and (3) the independent program reviews that VA conducted met key elements of effective reviews and addressed identified issues.

GAO evaluated program and independent review documentation, compared it with relevant best practices, and interviewed cognizant VA officials.

What GAO Recommends

GAO is making four recommendations to VA, including that the program fully implement Agile best practices for requirements development and management and that it incorporate key elements of effective independent reviews in VA policy. VA concurred with the recommendations and described actions the department will take to address them.

What GAO Found

In 2016, the Department of Veterans Affairs (VA) established the Financial Management Business Transformation program (the program) to replace its aging financial and acquisition systems. In 2021, GAO made two recommendations to VA to help ensure that the program’s cost estimate and schedule were consistent with GAO-identified best practices. They have not yet been implemented. VA continues to not fully or substantially meet best practices for developing and managing the cost estimate and schedule. As a result, they are unreliable. Without a reliable cost estimate and schedule, VA management risks not making fully informed and sound decisions.

Additionally, the program substantially or fully met five Agile best practices for requirements development and management and partially met the remaining three (see table). Regarding the three partially met practices, (1) if the program does not ensure complete and feasible requirements, it could be working on requirements that are not high priority; (2) without traceability, the program cannot establish that the work is contributing to its goals and providing value; and (3) by not balancing customer needs, the program could be developing functionality that is not immediately necessary.

|

Best practice |

GAO assessment |

|

Refine requirements |

● |

|

Test and validate the system as it is being developed |

● |

|

Ensure work is contributing to the completion of requirements |

◕ |

|

Elicit and prioritize requirements |

◕ |

|

Manage and further refine requirements |

◕ |

|

Ensure requirements are complete, feasible, and verifiable |

◑ |

|

Maintain traceability in requirements decomposition |

◑ |

|

Balance customer and user needs and constraints |

◑ |

Legend: ●=Fully met; ◕=Substantially met; ◑=Partially met; ◔=Minimally met; ○=Not met

Source: GAO analysis of Department of Veterans Affairs (VA) Financial Management Business Transformation program documentation. | GAO-25-107256

GAO also found that VA generally incorporated the five key elements of effective independent verification and validation (independent review) for the program. Specifically, the program incorporated nine of the 10 key sub-elements and partially implemented one sub-element on determining which programs are subject to independent review. Although the program generally incorporated the elements of an effective independent review, VA does not have a department-wide IT acquisition policy that requires independent review or incorporates the key elements. As a result, VA risks not consistently implementing independent reviews for other VA IT programs.

Regarding addressing identified issues, as of November 2024 the independent review team reported that the program resolved 93 percent of the findings and recommendations that the team identified from 2021 to 2024. This is a significant improvement compared to the 27 percent of recommendations reported implemented as of April 2020.

|

Abbreviations |

|

|

|

|

|

|

|

CWS |

Consolidated Wave Stack |

|

|

FMBT |

Financial Management Business Transformation program |

|

|

iFAMS |

Integrated Financial and Acquisition Management System |

|

|

IV&V |

independent verification and validation |

|

|

O&M |

operations and maintenance |

|

|

OIT |

Office of Information and Technology |

|

|

RTM |

requirements traceability matrix |

|

|

SQAS |

Systems Quality Assurance Service |

|

|

VA |

Department of Veterans Affairs |

|

|

VBA |

Veterans Benefits Administration |

|

|

VHA |

Veterans Health Administration |

|

|

WBS |

work breakdown structure |

|

This is a work of the U.S. government and is not subject to copyright protection in the United States. The published product may be reproduced and distributed in its entirety without further permission from GAO. However, because this work may contain copyrighted images or other material, permission from the copyright holder may be necessary if you wish to reproduce this material separately.

February 24, 2025

The Honorable Jerry Moran

Chairman

The Honorable Richard Blumenthal

Ranking Member

Committee on Veterans’ Affairs

United States Senate

The Honorable Mike Bost

Chairman

The Honorable Mark Takano

Ranking Member

Committee on Veterans’ Affairs

House of Representatives

The Department of Veterans Affairs (VA) is responsible for administering benefit programs for veterans, their families, and their survivors. These programs include those for pensions, education, disability compensation, home loans, life insurance, vocational rehabilitation, survivor support, medical care, and burial benefits.

VA’s core financial system is more than 30 years old and, according to VA, is extremely difficult to maintain, results in inefficient operations, requires complex manual work-arounds, and does not provide real-time integration between financial and acquisition information across the department. VA’s financial statement auditors have long reported a material weakness related to VA’s financial management systems.[1] Two previous attempts to replace its legacy system, beginning in 1998, failed after years of development and hundreds of millions of dollars in cost.[2] According to agency officials, VA has spent another $1.9 billion from the inception of its current program in fiscal year 2016 through fiscal year 2024.

In 2016, VA established the Financial Management Business Transformation program (FMBT). FMBT is intended to replace VA’s aging financial and acquisition systems with one integrated system to meet the department’s financial management goals and comply with legislation and directives.[3] VA’s November 2024 life cycle cost estimate for FMBT is $8.6 billion. This is an increase of approximately $942 million over the 2023 estimate of $7.6 billion.[4]

You asked us to review FMBT’s progress. In July 2024, we issued a report that addressed key project management practices on collaborating with stakeholders on changes to the program’s cost estimate and schedule, assessing user satisfaction and concerns, and managing program risks.[5] This report examines the extent to which (1) FMBT’s cost and schedule estimates followed best practices, (2) requirements development and management efforts for the program followed Agile best practices, and (3) the design and implementation of VA’s independent verification and validation (IV&V) efforts met key elements of effective IV&V and FMBT addressed identified issues.

To address our first objective, we reviewed FMBT’s October 2023 life cycle cost estimate and program integrated master schedule, dated April 2024, and evaluated supporting documentation.[6] We evaluated those characteristics of a reliable cost estimate and schedule that were less than substantially or fully met from our previous report.[7] We also compared the results of the cost and schedule analyses to results from our past work to determine whether the department’s cost estimate and schedule have improved in meeting GAO best practices. We determined the overall assessment rating for each cost and schedule characteristic by assigning each best practice assessment a numerical score from one to five and calculating the average of the scores to arrive at an overall assessment rating.

To address objective two, we reviewed key FMBT documentation, evaluated this documentation against the GAO Agile Assessment Guide, and interviewed department officials and key stakeholders.[8]

Regarding objective three, we assessed VA policies and procedures against selected leading industry IV&V practices.[9] In addition, we evaluated FMBT IV&V documentation and interviewed VA and FMBT officials. To assess whether VA has resolved issues identified through IV&V, we reviewed documentation on the status of IV&V defects, findings, and recommendations. Appendix I provides additional details on our objectives, scope, and methodology.

We conducted this performance audit from January 2024 to February 2025 in accordance with generally accepted government auditing standards. Those standards require that we plan and perform the audit to obtain sufficient, appropriate evidence to provide a reasonable basis for our findings and conclusions based on our audit objectives. We believe that the evidence obtained provides a reasonable basis for our findings and conclusions based on our audit objectives.

Background

Deployment of the Integrated Financial and Acquisition Management System

FMBT is currently migrating VA’s financial management systems to a software-as-a-service solution configured for the department and hosted in the VA cloud, referred to as Integrated Financial and Acquisition Management System (iFAMS).[10] VA awarded a systems integration contract in April 2018 to support FMBT through incremental deployments of iFAMS, referred to as waves. Each wave delivers federal financial management capabilities to support the functions and activities that VA administrations and staff offices conduct to carry out its mission.

As of October 2024, FMBT has completed six incremental deployments of iFAMS.[11] FMBT is currently working on its next planned deployments, which are due to be implemented at Veterans Benefits Administration (VBA) Loan Guaranty in May 2025 and Veterans Health Administration (VHA) Station 134 in June 2025.[12] FMBT also plans to begin work on the VBA Insurance wave, which is estimated to be implemented in May 2027.

As of September 2024, FMBT estimated program completion in 2031. However, VA program leadership has not yet determined final implementation dates for multiple deployments at VHA that will affect this timeline. FMBT is also anticipating budget cuts that may delay planned work. Specifically, the program budget was cut by over $100 million in VA’s budget request for fiscal year 2025, which according to program officials will delay the start of the VBA Insurance wave.

Prior GAO Recommendations on the FMBT Cost Estimate and Schedule

We previously reviewed the quality of FMBT’s 2019 cost estimate and reported our results in March 2021.[13] We identified 18 best practices associated with a reliable cost estimate, which are summarized into four characteristics: (1) comprehensive, (2) well-documented, (3) accurate, and (4) credible. According to GAO’s Cost Estimating and Assessment Guide, a cost estimate is considered reliable if the assessment ratings for each of the four characteristics are substantially or fully met.[14] If any of the characteristics are scored as not met, minimally met, or partially met, the cost estimate cannot be considered reliable.

In March 2021, we reported that the FMBT 2019 cost estimate was unreliable since it did not fully or substantially meet all characteristics associated with a reliable estimate.[15] Specifically, the cost estimate substantially met one of the four characteristics of a reliable estimate (well-documented), partially met two characteristics (comprehensive and accurate), and minimally met one characteristic (credible). We recommended that the FMBT Deputy Assistant Secretary take steps to help ensure that the program develops a reliable cost estimate using best practices described in GAO’s Cost Estimating and Assessment Guide. Specifically, VA needed to address those cost characteristics that we identified as partially or minimally met. VA concurred with this recommendation and has taken some actions to address it, but it is not yet fully implemented.

We previously reviewed the quality of FMBT’s 2020 schedule and reported our results in March 2021.[16] We identified 10 best practices associated with a reliable schedule, which are summarized into four characteristics: (1) comprehensive, (2) well-constructed, (3) credible, and (4) controlled. According to GAO’s Schedule Assessment Guide, a schedule is considered reliable if the assessment ratings for each of the four characteristics are substantially or fully met.[17] If any of the characteristics are scored as not met, minimally met, or partially met, the schedule cannot be considered reliable.

In March 2021, we reported that the 2020 integrated master schedule was unreliable as it did not fully or substantially meet all characteristics associated with a reliable schedule.[18] Specifically, the schedule substantially met one of the four characteristics of a reliable schedule (controlled) and partially met the other three characteristics. We recommended that the FMBT Deputy Assistant Secretary take steps to help ensure that the program develops a reliable schedule using best practices described in GAO’s Schedule Assessment Guide by addressing those schedule characteristics that were partially or minimally met. VA concurred with this recommendation and has taken some actions to address it.

Use of Agile Development Principles

In March 2023, VA released the most recent version of the FMBT Scaled Agile Framework.[19] Agile is an umbrella term for a variety of practices in software development, including requirements development and management. Generally, Agile emphasizes early and continuous software delivery, customer feedback cycles, regular delivery frequency, the use of collaborative teams, and measuring progress in terms of working software.[20] A Scaled Agile Framework provides guidance for roles and iterative events at different levels in an organization, tailored to each unique context. A glossary of key terms associated with Agile development can be found in appendix II.

Use of IV&V in IT System Development and Acquisition

IV&V (also referred to as independent review in this report) is a process where organizations can reduce the risks inherent in IT system development and acquisition efforts by having a knowledgeable party independent of the developer determine that the system or product meets the users’ needs and fulfills its intended purpose. The IV&V process starts by determining the program’s risks early in the life cycle and then identifying those that could be mitigated or lessened by performing additional reviews and quality assessments.

We have long recognized the use of IV&V as a leading practice for federal agencies in acquiring a product or IT system that is complex, large scale, or high risk.[21] Organizations can fully realize benefits of independent reviews by adopting certain key elements of IV&V. Based on industry standards and leading practices from across the federal government as well as past GAO reports, we identified five key elements and 10 sub-elements for effective IV&V for large and complex IT system development and acquisition programs.[22] These five key elements are (1) establish decision criteria and process, (2) establish independence, (3) define program scope, (4) define program resources, and (5) establish management and oversight. See appendix I for more information on how we identified the five key elements of effective IV&V and the related sub-elements.

The IV&V efforts for FMBT are planned, managed, and executed by VA’s Office of Information and Technology (OIT), Compliance, Risk, and Remediation, referred to as the IV&V team. The team is composed of government staff and contracted resources.[23] According to VA officials, recent FMBT IV&V efforts relate to implementation of the Consolidated Wave Stack, the only fully deployed wave to undergo testing since the start of the current IV&V contract. The team’s efforts are guided by overarching planning documents encompassing all FMBT waves. The team is currently conducting IV&V activities for FMBT at VBA and VHA.

The IV&V team’s activities have been reduced from those outlined in the original plan. The FMBT IV&V Execution Plan, which the team developed in response to funding cuts reported by the team, outlines a reduced scope of IV&V activities and lists specific activities cut. For example, the team is no longer performing quality assurance assessments, common assessments, system and software assessments, hardware and infrastructure assessments, and data migration activities.[24] We previously reported that, according to VA, FMBT mitigated a 2019 funding shortfall by reducing funding for the IV&V contractor and other contract support and pausing the VHA implementation waves.[25] FMBT also mitigated a 2020 funding shortfall by eliminating funding for IV&V contract support, among other measures.[26] Additionally, FMBT leadership rejected, due to insufficient funding, the team’s November 2023 recommendation to conduct additional IV&V tasks on the program. The team also stated that without FMBT providing additional funding for IV&V, the program would face significant reductions in test coverage.

VA Has Addressed Selected Best Practices, but the FMBT Cost Estimate and Schedule Remain Unreliable

VA Has Taken Steps to Improve FMBT Cost Estimate Accuracy, but It Remains Unreliable

We determined that the FMBT’s October 2023 cost estimate was not reliable because it did not fully or substantially meet all four characteristics associated with a reliable cost estimate. Specifically, we found that the estimate improved in the accurate characteristic to substantially met, but it remains partially met in the comprehensive characteristic and minimally met in the credible characteristic. The three characteristics of a reliable cost estimate we evaluated, their associated best practices, and the results of our assessment are summarized in table 1. See appendix III for more detail.

|

Overall GAO characteristic assessment |

Best practice |

2021 GAO best practice assessment |

2024 GAO best practice assessment |

|

Comprehensive Original 2021 assessment: Updated 2024 assessment: |

Includes all life cycle costs |

◑ |

◕ |

|

Is based on a technical baseline description that completely defines the program, reflects the current schedule, and is technically reasonable |

◑ |

◑ |

|

|

Is based on a work breakdown structure (WBS) that is product-oriented, traceable to the statement of work, and at an appropriate level of detail to ensure that cost elements are neither omitted nor double-counted |

◑ |

◑ |

|

|

Documents all cost-influencing ground rules and assumptions |

◑ |

◑ |

|

|

Accurate Original 2021 assessment: Partially met Updated 2024 assessment: Substantially met |

Is based on a model developed by estimating each WBS element using the best methodology from the data collected |

◑ |

◑ |

|

Is adjusted properly for inflation |

◔ |

◕ |

|

|

Contains few, if any, minor mistakes |

◑ |

◕ |

|

|

Is regularly updated to ensure it reflects program changes and actual costs |

◕ |

◕ |

|

|

Documents, explains, and reviews variances between planned and actual costs |

◑ |

◑ |

|

|

Is based on a historical record of cost estimating and actual experiences from other comparable programs |

◑ |

◑ |

|

|

Credible Original 2021 assessment: Updated 2024 assessment: |

Includes a sensitivity analysis that identifies a range of possible costs based on varying major assumptions, parameters, and data inputs |

◔ |

◔ |

|

Includes a risk and uncertainty analysis that quantifies the imperfectly understood risks and identifies the effects of changing key cost driver assumptions and factors |

◔ |

◔ |

|

|

Employs cross-checks—or alternate methodologies—on major cost elements to validate results |

○ |

○ |

|

|

Is compared to an independent cost estimate that is conducted by a group outside the acquiring organization to determine whether other estimating methods produce similar results |

○ |

◑ |

Legend:

FMBT = Financial Management Business Transformation program

VA = Department of Veterans Affairs

● = Met: VA provided evidence that satisfies the entire criterion

◕ = Substantially met: VA provided evidence that satisfies a large portion of the criterion

◑ = Partially met: VA provided evidence that satisfies about half of the criterion

◔ = Minimally met: VA provided evidence that satisfies a small portion of the criterion

○ = Not met: VA provided no evidence that satisfies any of the criterion

Source: GAO analysis of VA FMBT documentation. | GAO‑25‑107256

Notes: To determine the overall assessment for each characteristic, we assigned each best practice assessment a score based on a five-point scale: not met = 1, minimally met = 2, partially met = 3, substantially met = 4, and met = 5. We calculated the average of the individual best practice assessment scores to determine the overall assessment rating for each characteristic as follows: not met = 1.0 to 1.4, minimally met = 1.5 to 2.4, partially met = 2.5 to 3.4, substantially met = 3.5 to 4.4, and met = 4.5 to 5.0.

Italicized text denotes best practices that were not reevaluated as part of this review. See app. III for more details. This table does not represent all characteristics and best practices associated with a reliable cost estimate, as we did not reevaluate the well-documented characteristic and its best practices since we rated them as fully or substantially met in our 2021 report (GAO, Veterans Affairs: Ongoing Financial Management System Modernization Program Would Benefit from Improved Cost and Schedule Estimating, GAO‑21‑227 (Washington, D.C.: Mar. 24, 2021)). See app. III for more details on these best practices.

Remaining characteristics and their associated best practices that are not fully or substantially met continue to affect the reliability of the program’s cost estimate. For example, VA has continued to not employ cross-checks of major cost elements.[27] Unless an estimate employs cross-checks, the estimate will have less credibility because stakeholders will have no assurance that alternative estimating methodologies produce similar results. Additionally, while the cost estimate documentation and model contain ground rules and assumptions, this best practice is only partially met because (in part) it does not identify risks associated with assumptions or constraints.

Further, while the program obtained an independent cost estimate, it was not reconciled with the program office cost estimate. The cost estimate would be a more useful management tool if VA addressed these remaining weaknesses. We continue to believe that the recommendation from our prior report is valid and should be fully implemented. This would help ensure that VA management has the information necessary for fully informed and sound decision-making and to minimize the risk of cost overruns.

VA Has Taken Steps to Improve the FMBT Schedule, but It Remains Unreliable

We determined that the April 2024 schedule was not reliable because it did not fully or substantially meet all four characteristics associated with a reliable schedule. Specifically, we found that the schedule improved in the well-constructed characteristic to substantially met but remains partially met in the comprehensive and credible characteristics. The three characteristics of a reliable schedule that we evaluated, their associated best practices, and the results of our assessment are summarized in table 2. See appendix III for more detail.

|

Overall GAO characteristic assessment |

Best practice |

2021 GAO best practice assessment |

2024 GAO best practice assessment |

|

Comprehensive

Updated assessment: Partially met |

Capturing all activities |

◑ |

◕ |

|

Assigning resources to all activities |

◔ |

◔ |

|

|

Establishing the durations of all activities |

◕ |

◕ |

|

|

Well-constructed Original assessment: Partially met Updated assessment: Substantially met |

Sequencing all activities |

◑ |

◕ |

|

Confirming that the critical path is valid |

◑ |

◑ |

|

|

Ensuring reasonable total float |

◑ |

◕ |

|

|

Credible

Updated assessment: Partially met |

Verifying that the schedule can be traced horizontally and verticallya |

◑ |

◑ |

|

Conducting a schedule risk analysis |

◔ |

◑ |

Legend:

FMBT = Financial Management Business Transformation program

VA = Department of Veterans Affairs

● = Met: VA provided evidence that satisfies the entire criterion

◕ = Substantially met: VA provided evidence that satisfies a large portion of the criterion

◑ = Partially met: VA provided evidence that satisfies about half of the criterion

◔ = Minimally met: VA provided evidence that satisfies a small portion of the criterion

○ = Not met: VA provided no evidence that satisfies any of the criterion

Source: GAO analysis of VA FMBT documentation. | GAO‑25‑107256

Notes: To determine the overall assessment for each characteristic, we assigned each best practice assessment a score based on a five-point scale: not met = 1, minimally met = 2, partially met = 3, substantially met = 4, and met = 5. We calculated the average of the individual best practice assessment scores to determine the overall assessment rating for each characteristic as follows: not met = 1.0 to 1.4, minimally met = 1.5 to 2.4, partially met = 2.5 to 3.4, substantially met = 3.5 to 4.4, and met = 4.5 to 5.0.

Italicized text denotes best practices that were not reevaluated as part of this review. See app. III for more details. This table does not represent all characteristics and best practices associated with a reliable schedule, as we did not reevaluate the controlled characteristic and its best practices since they were rated as fully or substantially met in our prior report (GAO, Veterans Affairs: Ongoing Financial Management System Modernization Program Would Benefit from Improved Cost and Schedule Estimating, GAO‑21‑227 (Washington, D.C.: Mar. 24, 2021)). See app. III for more details on these best practices.

aHorizontal traceability ensures that the schedule is planned in a logical sequence, accounts for interdependence of activities, and provides a way to evaluate current status. Vertical traceability ensures that data are consistent between different levels of the schedule.

FMBT’s April 2024 schedule continued to have issues with vertical traceability, including inconsistent dates, between lower-level schedules and upper-level schedule milestones. Additionally, the program performed a schedule risk analysis that was limited in scope and not for the entire effort, affecting the reliability of the program’s schedule. Finally, while the program performs some resource tracking as part of its Agile processes, it had not assigned resources to activities within the integrated master schedule, limiting insight into current or projected resource allocation and increasing the risk of the program falling behind schedule. To address the remaining weaknesses, we continue to believe that the recommendation from our prior report is valid and should be fully implemented. This would help ensure that VA management has the information necessary for fully informed and sound decision-making and to minimize the risk of additional schedule delays.

FMBT Has Generally Followed Best Practices for Requirements Development and Management, but Improvements Are Needed

FMBT has substantially or fully met five of the eight Agile best practices for requirements development and management.[28] We determined that the best practices on ensuring the completeness, feasibility, and verifiability of requirements; maintaining requirements’ traceability; and balancing customer needs and constraints were partially met.[29] For Agile requirements development and management to be considered successful, all assessment ratings for each best practice must be substantially or fully met. Table 3 shows our assessment of the eight Agile best practices for requirements development and management (in order of “fully met” to “partially met” practices). We discuss the three best practices that are partially met in more detail in the sections below the table; for more information on all eight best practices, see appendix IV.

Table 3: Summary Assessment of VA FMBT Requirements Development and Management Efforts Against Agile Best Practices

|

Best practice |

GAO assessment |

|

Refine requirements |

● |

|

Test and validate the system as it is being developed |

● |

|

Ensure work is contributing to the completion of requirements |

◕ |

|

Elicit and prioritize requirements |

◕ |

|

Manage and further refine requirements |

◕ |

|

Ensure requirements are complete, feasible, and verifiable |

◑ |

|

Maintain traceability in requirements decomposition |

◑ |

|

Balance customer and user needs and constraints |

◑ |

Legend:

FMBT = Financial Management Business Transformation program

VA = Department of Veterans Affairs

● = Fully met: VA provided evidence that satisfies the entire criterion.

◕ = Substantially met: VA provided evidence that satisfies a large portion of the criterion.

◑ = Partially met: VA provided evidence that satisfies about half of the criterion.

◔ = Minimally met: VA provided evidence that satisfies a small portion of the criterion.

○ = Not met: VA provided no evidence that satisfies any of the criterion.

Source: GAO analysis of VA FMBT documentation. | GAO‑25‑107256

FMBT Does Not Consistently Ensure Requirements Are Complete, Feasible, and Verifiable

FMBT has documentation and policies that reflect best practices; however, the program is inconsistently applying its requirements development policies during program implementation. According to GAO’s Agile Assessment Guide, acceptance criteria, a definition of done, and a definition of ready are to be established for user stories[30] describing requirements prior to their development.[31] Additionally, in the FMBT Scaled Agile Framework policy document, standard definitions of done and ready are included, and the policy requires that a program apply these definitions in its user stories, at a minimum, during requirements development.[32] However, our analysis found that FMBT lists of user stories and requirements backlogs do not consistently include acceptance criteria and definitions of done and ready.

Program officials stated that the required standard definitions are consistently used regardless of whether they are included in program documentation. However, the program did not provide supporting documentation for this practice. Without clear acceptance criteria and definitions of done and ready, FMBT may not be working on the highest-priority requirements. Furthermore, well-defined acceptance criteria can help teams estimate a user story’s complexity.[33] If users and customers are not involved in the review and acceptance process for software functionality, there is an increased risk of software not meeting its intended purpose.

FMBT Lacks a Road Map for Requirements Traceability

FMBT has not developed an Agile road map[34] or comparable document to facilitate the traceability of requirements across the program as it is implemented.[35] Specifically, our analysis found that the program does not have an Agile road map and that the traceability of requirements across user story lists, backlogs, and requirements traceability matrices is irregular. While maintaining requirements traceability matrices is in line with Agile best practices, the lack of an Agile road map prevents the program from sharing, across different levels of the organization, what work is planned in the current and upcoming releases.

GAO’s Agile Assessment Guide calls for requirements to be traceable from the source requirements (e.g., epics, features)[36] to lower-level requirements (e.g., user stories) and vice versa, and the program is to use Agile artifacts, such as a road map, to verify requirements traceability.[37] A properly developed Agile road map associates features with releases, allowing a program to trace user stories back to high-level requirements.

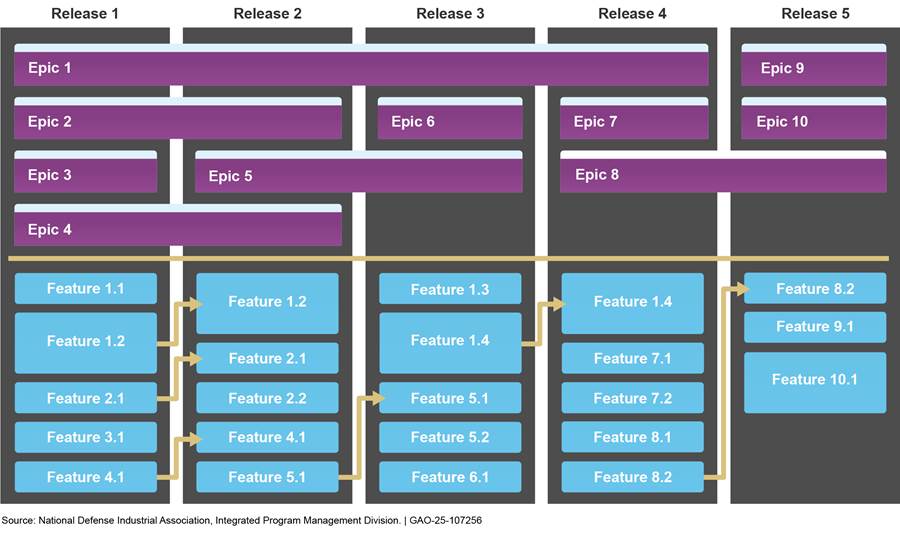

FMBT officials stated that budgetary constraints contributed to the program’s lack of an Agile road map. The program has an implementation timeline, which it refers to as a road map. This document only depicts the schedule of implementation wave deployments throughout the program’s life cycle. The timeline does not include epics and features that would show traceability between requirements. An example of an Agile road map is shown in figure 1.

Specifically, the program’s implementation timeline does not indicate when releases or other Agile planning events occur and provide any insight into when features or epics for the different implementation waves are expected to be developed. Ensuring alignment between the user stories delivered in an iteration and the goals of the program and organization via an agreed-upon artifact (such as a road map that tracks feature prioritization) is one way to exhibit the delivery of the features with the highest value. Without tools, like a road map, to facilitate frequent information dissemination, decision-makers may not have access to performance information and may not be able to act in a timely manner or make improvements or corrective actions. For example, without the ability to trace a user story back to high-level requirements, a program cannot justify whether it is meeting the commitments made to various oversight bodies or establish that its work is contributing to the program goals.

FMBT Requirements Development Process Does Not Adequately Balance Customer Needs

FMBT’s requirements development process does not prioritize requirements based on the value their development would provide to a customer, or “value of work.”[38] Specifically, our analysis found that while FMBT began assigning value of work with its most recent implementation wave, there is no evidence to suggest that the program uses the value of work to prioritize requirements.

According to GAO’s Agile Assessment Guide, one method of measuring the value of work is to consider how frequently a feature will be used in order to develop features that are of immediate value.[39] The guide further states that having a consistent process in place to measure the value of work ensures that requirements are developed based on relative value. For example, without clearly prioritizing work, the developers could work on features that are not “must haves” to the customer, resulting in the delivery of features that may not be used, which could contribute to schedule and cost overruns.

According to FMBT officials, the program is currently working toward a consistent process for measuring the value of work so that features are developed based on relative value. In the meantime, because the program is not yet using an Agile road map, it is unable to track features to ensure that it is prioritizing the highest-value requirements. If FMBT’s requirements development process does not account for the relative value of work, it may develop functionality that is not immediately necessary to meet customer needs. Additionally, if the program does not complete the highest-value requirements first, it risks leaving customers without necessary functionality.

VA IT Acquisition Policy Does Not Include Key Elements of Effective IV&V, but FMBT Has Mostly Addressed IV&V Findings

VA performed independent reviews on FMBT and generally incorporated the key elements of effective IV&V in these efforts.[40] Specifically, we determined that the IV&V team’s use of independent reviews for FMBT met nine key sub-elements and partially met one. However, VA’s IT acquisition policy did not require independent reviews or incorporate any of the 10 key sub-elements of effective IV&V from leading industry practices, as identified by GAO. Additionally, VA substantially addressed most IV&V-identified issues for FMBT.

VA’s Use of IV&V for FMBT Generally Incorporated Key Elements of Effective IV&V, but IT Acquisition Policy Did Not

Along with professional technical organizations, we have long reported on the benefits and use of effective IV&V as a leading practice for high-risk IT programs. Based on relevant leading industry practices referenced in a prior GAO report, there are five key elements of an effective IV&V with 10 sub-elements.[41] The five key elements are (1) establish decision criteria and process, (2) establish independence, (3) define program scope, (4) define program resources, and (5) establish management and oversight.

The team performed IV&V reviews on FMBT and generally incorporated the key elements in these efforts. Specifically, we determined that the team’s use of IV&V for FMBT met nine key sub-elements and partially met one (see table 4). We discuss the one key sub-element that is partially met in more detail below the table; more information on all 10 key sub-elements is included in appendix V.

|

Key elements and sub-elements of effective IV&V |

GAO assessment |

|

(1) Establish decision criteria and process. A risk-based, decision-making process is defined to determine whether or the extent to which programs are to be subject to IV&V, to include: |

|

|

1. establishing risk-based criteria for determining which programs, or aspects of programs, to subject to IV&V |

◑ |

|

2. establishing a process for using IV&V to improve the management of the IT acquisition/development program |

● |

|

(2) Establish independence. The degree of technical, managerial, and financial independence required of the personnel or agents performing IV&V is defined, including: |

|

|

3. technical, managerial, and financial independence requirements for the IV&V agent |

● |

|

4. a mechanism for reporting the results of IV&V to program oversight officials, as well as program management |

● |

|

(3) Define program scope. The scope of IV&V activities is defined, including: |

|

|

5. a definition of the program activities subject to IV&V |

● |

|

6. validation and verification compliance criteria for each program activity subject to IV&V |

● |

|

(4) Define program resources. The resources needed for IV&V are specified, including: |

|

|

7. the facilities, personnel, funding, tools, and techniques and methods |

● |

|

(5) Establish management and oversight. The management and oversight to be performed are specified, including: |

|

|

8. the process for responding to issues raised by the IV&V effort |

● |

|

9. the roles and responsibilities of all parties involved in the program |

● |

|

10. how the effectiveness of the IV&V effort will be evaluated |

● |

FMBT = Financial Management Business Transformation program

IV&V = independent verification and validation

VA = Department of Veterans Affairs

● = Met: VA provided evidence that satisfies the entire criterion

◑ = Partially met: VA provided evidence that satisfies about half of the criterion

○ = Not met: VA provided no evidence that satisfies any of the criterion

Source: GAO analysis of VA FMBT IV&V documentation. | GAO‑25‑107256

VA partially met the key sub-element related to establishing a risk-based process to determine which programs or aspects of the programs to subject to IV&V. This is because VA’s overall decision to perform independent reviews on FMBT was not based on a formal, risk-based process with documented criteria. However, the IV&V team did establish a risk-based process to determine which aspects of the program to subject to IV&V. Specifically, the team used a guide to identify areas of risk to scope its activities within each wave. This guide supported the team’s risk assessment, test preparation, and test execution processes for each FMBT wave.

We reviewed VA’s department-wide IT acquisition policies, procedures, and guidance. We found that these documents do not require independent reviews or incorporate any of the five key elements or 10 sub-elements of effective IV&V. VA’s policy did not include information regarding the IV&V process, procedures, or guidelines, and officials from various VA offices did not provide us with evidence of any related IT acquisition policies, procedures, or other guidance.

In response to our inquiry about why VA did not have an IT acquisition policy related to IV&V, VA acquisition officials stated that the department has not identified a specific need for such policy. OIT IV&V team officials stated that the department based its decision to use IV&V for FMBT primarily on the program’s designation as a major modernization effort. According to the team, the engagement and execution of IV&V has varied for VA programs and projects. To address this, team officials stated that they are developing a framework to determine which VA programs to subject to IV&V, aiming for implementation in 2026. According to team officials, they plan for this framework to use a standard set of criteria for making this determination, although it is not clear if it will incorporate any of the other key elements or sub-elements.

While VA has incorporated most elements of effective IV&V for FMBT, the absence of formal department-wide policies and procedures could hinder these efforts. Having a documented policy that contains the five elements can further ensure that the key elements will be addressed. Without department-wide IT acquisition policies, procedures, and guidance that require and incorporate elements of effective IV&V, VA risks not consistently implementing independent reviews and not meeting its goals for other VA IT programs. This includes the risk of delivering a system with significant defects.

VA Has Substantially Addressed Most Issues Identified Through IV&V Efforts

FMBT has responded to the IV&V team’s recommendations and has taken steps to improve system quality and performance. For example, the team identified that an iFAMS interface was missing specific requirement and acceptance criteria and recommended thoroughly outlining and documenting these criteria. FMBT agreed with this recommendation and took corrective action, and the team verified the successful resolution. Additionally, the team identified 26 critical and high-severity defects, which the program successfully addressed, as determined by the team.[42] As a result, FMBT has substantially addressed most of the defects, as well as findings and recommendations identified through its IV&V efforts.

The IV&V team reports both (1) defects and (2) findings and recommendations to FMBT management and tracks resolution internally. Defects are issues logged against explicitly stated FMBT requirements that were not met. The status of defects is tracked separately from findings and recommendations, which identify, document, and communicate potential areas of improvement. The team reports on defects and findings and recommendations through IV&V weekly status reports, bi-weekly leadership reports, monthly progress reports, and summary reports submitted at the conclusion of implementation wave testing events. See appendix V for more detailed information on the team’s defects, findings and recommendations processes, and the results of the program’s IV&V efforts.

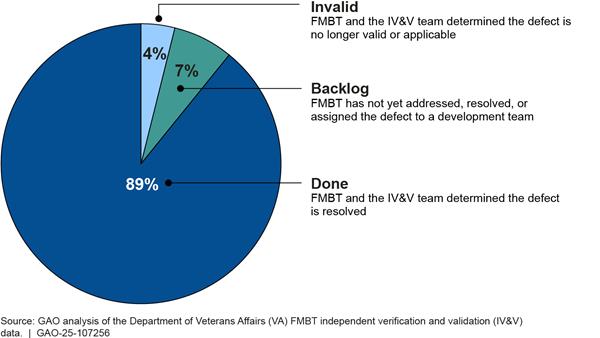

According to the IV&V team’s defect documentation, as of November 2024 the team reported that FMBT resolved 89 percent of the defects that the IV&V team identified from 2020 to 2024 (see fig. 2). For instance, FMBT resolved a separation of duty issue identified by the team related to travel voucher creation.

Figure 2: Status of VA Financial Management Business Transformation Program (FMBT) IV&V-Identified Defects, Nov. 2020 to Nov. 2024

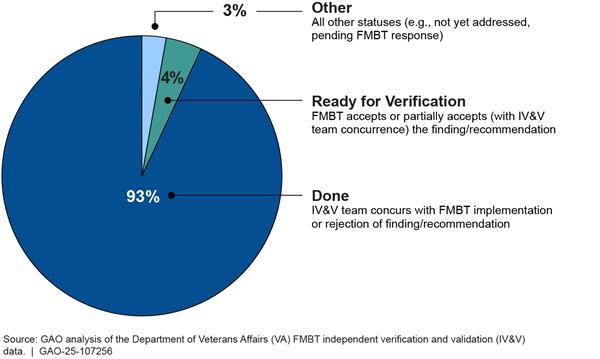

Further, according to the IV&V team’s findings and recommendations documentation, as of November 2024 FMBT resolved 93 percent of the findings and recommendations that the IV&V team identified from 2021 to 2024 (see fig. 3). This is a significant improvement compared to the 27 percent of IV&V recommendations that the team previously reported had been implemented as of April 3, 2020.[43]

Figure 3: Status of VA Financial Management Business Transformation Program (FMBT) IV&V-Identified Findings and Recommendations, Apr. 2021 to Nov. 2024

Conclusions

The program continues to not fully or substantially meet related characteristics and associated best practices, resulting in an unreliable cost estimate and schedule. Following cost estimation and scheduling best practices helps ensure that VA management has the information necessary for fully informed and sound decision-making and minimize the risk of cost overruns and schedule delays. Therefore, we reiterate the need for VA to implement our two prior recommendations related to ensuring the FMBT’s cost estimate and schedule are consistent with GAO-identified best practices.

FMBT’s requirements development and management activities substantially or fully met five Agile best practices and partially met the remaining three, which focused on ensuring the completeness, feasibility, and verifiability of requirements; maintaining requirements traceability; and balancing customer needs and constraints. If FMBT does not ensure complete and feasible requirements with clear definitions of done and ready, the program could be working on requirements that are not high priority. Without a road map to trace requirements across the program’s implementation life cycle, FMBT cannot establish that the work is contributing to its goals and providing value. By not balancing customer needs, the program could be developing functionality that is not immediately necessary.

VA’s use of independent reviews for FMBT generally incorporated the five key elements of effective IV&V; however, the department did not fully implement the sub-element on determining which programs to subject to independent reviews. VA does not have department-wide IT acquisition policy, procedures, or other guidance that require independent review or incorporate the key elements of effective IV&V. Without department-wide policy, procedures, and guidance that incorporate these key elements, VA increases the risk of not consistently implementing independent reviews and not meeting its goals for other VA IT programs.

Recommendations for Executive Action

We are making the following four recommendations to VA:

The Secretary of Veterans Affairs should ensure that the FMBT Deputy Assistant Secretary consistently documents and assesses the completeness of all requirements against acceptance criteria and definitions of done and ready, as required by the FMBT Scaled Agile Framework. (Recommendation 1)

The Secretary of Veterans Affairs should ensure that the FMBT Deputy Assistant Secretary facilitates requirements traceability by developing and implementing an Agile road map. This road map should align with best practices in Agile development to monitor the value of work completed and whether it meets stakeholder needs. (Recommendation 2)

The Secretary of Veterans Affairs should ensure that the FMBT Deputy Assistant Secretary balances customer needs and constraints by consistently assigning value of work during requirements development and prioritizing work based on relative value. (Recommendation 3)

The Secretary of Veterans Affairs should ensure that the Deputy Chief Information Officer of OIT incorporates and fully implements key elements of effective IV&V into OIT’s planned IV&V framework and acquisition policy, procedures, and guidance, including a formal, risk-based process for determining which VA IT programs to subject to independent reviews. (Recommendation 4)

Agency Comments

We provided a draft of this report to VA for review and comment. In its written comments, reproduced in appendix VI, VA concurred with our four recommendations and described actions it has taken and will take to address the issues we identified with FMBT. Those actions, if implemented as described, should address our recommendations.

We are sending copies of this report to the appropriate congressional committees, the Secretary of Veterans Affairs, and other interested parties. In addition, the report is available at no charge on the GAO website at https://www.gao.gov.

If you or your staff have any questions about this report, please contact Paula M. Rascona at (202) 512-9816 or rasconap@gao.gov, Brian Bothwell at (202) 512-6888 or bothwellb@gao.gov, or Vijay A. D’Souza at (202) 512-7650 or dsouzav@gao.gov. Contact points for our Offices of Congressional Relations and Public Affairs may be found on the last page of this report. GAO staff who made key contributions to this report are listed in appendix VII.

Paula M. Rascona

Director

Financial Management and Assurance

Brian P. Bothwell

Director

Science, Technology Assessment, and Analytics

Vijay A. D’Souza

Director

Information Technology and Cybersecurity

This report examines the extent to which (1) the Department of Veterans Affairs (VA) Financial Management Business Transformation program’s (FMBT) cost estimate and schedule followed best practices; (2) the program’s requirements development and management efforts followed Agile best practices; and (3) the design and implementation of VA’s independent verification and validation (IV&V) efforts for the program met key elements of effective IV&V and FMBT addressed identified issues.

To examine VA’s cost estimating practices, we reviewed documentation supporting FMBT’s October 2023 life cycle cost estimate—the most recent estimate available. (The four characteristics and 18 best practices for a reliable cost estimate are presented in table 6 in app. III.) The risk assessment component of internal control was significant to our review of the estimate, along with the underlying principles that management identify, analyze, and respond to (1) risks related to achieving the defined objectives and (2) significant changes that could affect the internal control system. To assess the reliability of the October 2023 cost estimate, we evaluated documentation supporting the estimate, such as the cost estimating models, the program’s October 2023 cost estimate report, and briefings provided to VA management. We assessed the cost estimate—including the methodologies, assumptions, and results—against best practices for developing a comprehensive, accurate, and credible cost estimate identified in GAO’s Cost Estimating and Assessment Guide.[44] We evaluated those characteristics of a reliable cost estimate that were less than substantially or fully met from our previous report. We did not reassess the well-documented characteristic or the best practices of a reliable cost estimate that were found substantially met or met in recommendation follow-up from our prior report.[45]

To examine VA’s scheduling practices, we reviewed documentation on FMBT’s April 2024 integrated master schedule—the most recent schedule available. (The four characteristics and 10 best practices for a reliable schedule are presented in table 7 in app. III.) To assess the reliability of the 2024 FMBT schedule, we evaluated documentation supporting the schedule, such as the integrated project schedules and program baseline. We assessed the schedule documentation against best practices for developing a comprehensive, well-constructed, and credible schedule, as identified in GAO’s Schedule Assessment Guide.[46] We evaluated those characteristics of a reliable schedule that were less than substantially or fully met from our previous report. We did not reassess the controlled characteristic of a reliable schedule as it was found substantially met in our prior report.

For both the program cost estimate and schedule, we rated best practices under each characteristic as follows:

· Met: VA provided complete evidence that satisfies the entire criterion.

· Substantially met: VA provided evidence that satisfies a large portion of the criterion.

· Partially met: VA provided evidence that satisfies about one-half of the criterion.

· Minimally met: VA provided evidence that satisfies a small portion of the criterion.

· Not met: VA provided no evidence that satisfies any of the criterion.

We assigned each best practice a score based on a five-point scale: not met = 1, minimally met = 2, partially met = 3, substantially met = 4, and met = 5. Then, we calculated the average of each characteristic’s best practice score to determine the overall assessment rating for each characteristic as follows: not met = 1.0 to 1.4, minimally met = 1.5 to 2.4, partially met = 2.5 to 3.4, substantially met = 3.5 to 4.4, and met = 4.5 to 5.0. We compared the results of the cost and schedule analyses to the results from our past work to determine whether the department’s cost estimate and schedule have improved in meeting GAO best practices.

Finally, we provided VA with draft versions of our detailed analyses of FMBT’s cost estimate and schedule so that department officials could verify the information on which we based our findings.

To determine the extent to which FMBT’s efforts for requirements development and management followed GAO best practices, we evaluated the program’s implementation of Agile principles against chapter 5 of GAO’s Agile Assessment Guide, which describes eight best practices for requirements development and management in Agile.[47] We reviewed FMBT documents, including program requirements backlogs, user story lists, requirements traceability matrices, and the FMBT Scaled Agile Framework document to conduct our initial analysis.[48] Then, we met with program officials and leadership to obtain their perspectives on requirements management in an Agile environment and any additional information relevant to our scoring. We then provided the draft analysis to FMBT officials for any additional comment or clarification. We incorporated new information as appropriate.

To assess the design and implementation of VA’s IV&V efforts, we first reviewed the five key elements for effective IV&V identified in a prior GAO report and the 10 sub-elements.[49] To ensure the continued relevance and appropriateness of the key elements of effective IV&V as evaluation criteria, we reviewed the latest versions of the relevant IV&V leading industry practices referenced in the prior report. Specifically, we reviewed the (1) Institute of Electrical and Electronics Engineers, IEEE Standard for System, Software, and Hardware Verification and Validation;[50] (2) International Organization for Standardization, International Electrotechnical Commission, and Institute of Electrical and Electronics Engineers, Systems and Software Engineering—System Life Cycle Processes;[51] (3) International Organization for Standardization, International Electrotechnical Commission, and Institute of Electrical and Electronics Engineers, Systems and Software Engineering—Software Life Cycle Processes;[52] and (4) the Software Engineering Institute, CMMI® for Development.[53] Based on this review, we further clarified the five key elements and 10 sub-elements.

Next, we interviewed VA officials and obtained and reviewed VA IT acquisition policies, procedures, and guidance and documentation related to FMBT’s IV&V efforts. We assessed this documentation against the five key elements and 10 sub-elements of effective IV&V.

To determine the extent to which VA addressed FMBT issues identified through its IV&V efforts, we reviewed documentation on the status of the IV&V team’s identified defects, as well as findings and recommendations as of November 2024. Specifically, we summarized this information through our review of the FMBT IV&V defects, as well as findings and recommendations tracking spreadsheets as of November 2024.

The control activities component of internal control was significant to our evaluation of FMBT’s IV&V efforts, along with the underlying principles that management design control activities to achieve objectives and respond to risks and implement control activities through policies. We assessed the reliability of the IV&V team’s identified defects. We also assessed findings and recommendations data through our review of related documentation, interviews with VA officials, and performance of manual data testing to determine the extent to which the related data fields and key elements were complete and whether there were any incomplete or missing data, or obvious errors. We determined that the team’s identified defects, and findings and recommendations documentation were reliable for the purposes of our engagement.

We conducted this performance audit from January 2024 to February 2025 in accordance with generally accepted government auditing standards. Those standards require that we plan and perform the audit to obtain sufficient, appropriate evidence to provide a reasonable basis for our findings and conclusions based on our audit objectives. We believe that the evidence obtained provides a reasonable basis for our findings and conclusions based on our audit objectives.

Table 5 defines key terms associated with Agile development that are used in this report.

|

Term |

Definition |

|

Acceptance criteria |

Criteria by which a work item (usually a user story) is judged to be successful or not. Acceptance criteria are developed to identify when the user story has been completed and meets the preset standards for quality and production readiness. |

|

Agile |

An umbrella term for a variety of best practices in software development. Agile software development supports the practice of shorter software delivery. Specifically, Agile calls for the delivery of software requirements in small and manageable predetermined increments based on an “inspect and adapt” approach where the requirements change frequently and software is released in increments. More a philosophy than a methodology, Agile emphasizes early and continuous software delivery, fast feedback cycles, rhythmic delivery cadence, the use of collaborative teams, and measuring progress in terms of working software. There are many specific methodologies that fall under this category, including Scrum, eXtreme Programming, and Kanban. |

|

Backlog |

A list of features, user stories, and tasks to be addressed by the team or program, ordered from highest to lowest priority. Newly discovered requirements or defects are added to the backlog. |

|

Backlog refinement |

The process for keeping the backlog updated by adding detail and revisiting the order and estimates assigned to work that teams agree to be necessary. This allows details to emerge as knowledge increases through feedback and learning cycles. |

|

Cadence |

The rhythm and predictability that a team enjoys by delivering in consistent time boxes. |

|

Capacity |

The quantity of resources available to perform useful work. |

|

Customer |

Synonymous with business sponsor because the customer requires the product or service. The customer may or may not be a user. The customer is an integral part of the development and has specific responsibilities depending on the Agile methods used. The customer wants continuous improvement of products and services. |

|

Definition of done |

A predefined set of criteria that must be met before a work item is considered complete. The definition of done serves as a checklist, identifying all activities/artifacts besides working code that must be completed for a feature to be ready for deployment or release, including testing, documentation, training material development, and certifications. |

|

Definition of ready |

A predefined set of criteria specifying the level of detail needed before teams can begin development on a user story. Since detailed requirements evolve throughout the lifespan of the program, a definition of ready helps to ensure that participants work on only the most current and highly ranked requirements and that those requirements always reflect any updates to plans, activities, and work products. |

|

Epic |

A large user story that can span one or more releases and is progressively refined into features and then into smaller user stories that are at the appropriate level for daily work tasks and captured in the backlog. Epics are used as placeholders to keep track of and prioritize larger ideas. In the Financial Management Business Transformation program (FMBT), an epic generally takes more than one program increment but less than 1 year to complete. |

|

Feature |

A specific amount of work that can be developed within one or two reporting periods. It can be further segmented into user stories. The functionality is described with enough detail that it can remain stable throughout its development and integration into working software. In FMBT, a feature takes more than one sprint but less than one program increment to complete. |

|

Iteration |

A predefined, time-boxed and recurring period of time in which working software is created. Instead of relying on extensive planning and design, an iteration relies on rework informed by customer feedback. In FMBT, the recommended iteration duration is 4 weeks. |

|

Product |

A tangible item produced to create specific value to satisfy a want or requirement. |

|

Product owner |

The person who is accountable for ensuring business value is delivered by creating customer-centric items (typically user stories), ordering them, and maintaining them in the backlog. The product owner defines acceptance criteria for user stories. In Scrum, the product owner is the sole person/entity responsible for managing the backlog. The product owner’s duties typically include clearly expressing the backlog items, prioritizing the backlog items to reflect goals and missions, keeping the backlog visible to all, optimizing the value of development work, ensuring that the developers fully understand the backlog items, and deciding when a feature is “done.” A product owner should be available to the team within a reasonable time for both decision-making and empowerment. |

|

Program increment |

A time box in which working software is created across multiple iterations/sprints. In FMBT, a program increment lasts for four iterations/sprints (16 weeks). |

|

Regression testing |

A type of software testing that verifies that software that was previously developed and tested still performs correctly after it was changed or interfaced with other software. These changes may include software enhancements, patches, configuration changes, and so forth. During regression testing, new software bugs or regressions may be discovered. |

|

Release/implementation wave |

A planning segment of requirements (typically captured as features or user stories in the backlog) that implements needed capabilities. FMBT describes segments of its implementation process as “waves.” |

|

Requirement |

A condition or capability needed by a customer to solve a problem or achieve an objective. |

|

Requirements traceability matrix |

A tool for demonstrating that low-level requirements are traceable to high-level requirements. A requirements traceability matrix ensures that all functional requirements defined are tested and are traceable to features, user stories, and process flows. |

|

Road map |

A high-level plan that outlines a set of releases and the associated features. The road map is intended to be continuously revised as the plan evolves. |

|

Scaled Agile framework |

A governance model used to align and collaborate product delivery for modest-to-large numbers of Agile software development teams. The framework provides guidance for roles, inputs, and processes for teams, programs, large solutions, and portfolios. It is also intended to provide a scalable and flexible governance framework that defines roles, artifacts, and processes for Agile software development across all levels of an organization. |

|

Sprint |

See iteration. In FMBT, the recommended sprint duration is 4 weeks. |

|

Stakeholder |

Anyone who has an interest in the program, specifically parties who may be affected by a decision made by or about the program, or who could influence the implementation of the program’s decisions. Stakeholder engagement is a key part of corporate social responsibility and for achieving the program’s vision. A group or individual with a relationship to a program change, a program need, or the solution can be considered a stakeholder. |

|

Time box |

A previously agreed-upon period of time during which a person or a team works steadily toward completing a product. |

|

User story |

The smallest level of detail in an Agile program. A requirement definition written in everyday or business language, a user story is a communication tool written by or for customers to guide developers. It can also be written by developers to express nonfunctional requirements, such as security, performance, or quality. Full system requirements consist of a body of user stories. User stories are used in all levels of Agile planning and execution. An individual user story captures the “who,” “what,” and “why” of a requirement in a simple, concise way, and can be limited in detail by what can be handwritten on a small paper notecard (also called “story”). In FMBT, a user story is a work item that takes less than a 4-week sprint to complete. |

Source: GAO; Department of Veterans Affairs FMBT documentation. │ GAO‑25‑107256

In March 2020, we issued GAO’s Cost Estimating and Assessment Guide, where we identified 18 best practices associated with a reliable cost estimate, which are summarized into four characteristics: (1) comprehensive, (2) well-documented, (3) accurate, and (4) credible.[54] According to the guide, a cost estimate is considered reliable if the assessment ratings for each of the four characteristics are substantially or fully met. If any of the characteristics are scored as not met, minimally met, or partially met, the cost estimate cannot be considered reliable.

In our March 2021 report, we found that the 2019 Financial Management Business Transformation program (FMBT) cost estimate was unreliable since it did not fully or substantially meet all characteristics associated with a reliable estimate.[55] In this review, we reevaluated those characteristics of a reliable schedule that were less than substantially or fully met: (1) comprehensive, (2) accurate, and (3) credible. We found that the 2023 FMBT cost estimate was unreliable since it did not fully or substantially meet all characteristics of a reliable estimate. See detailed summaries of our findings for each characteristic of a reliable cost estimate and its associated best practices in table 6.

|

Overall GAO characteristic assessment |

Best practice |

GAO best practice assessment |

Summary |

|

Comprehensive Original assessment: Partially met Updated assessment: Partially met |

The cost estimate includes all life cycle costs. |

Original assessment: partially met 2024 assessment: substantially met |

The FMBT life cycle cost estimate extends far enough into the future to include sufficient cost information for the last implementation wave as well as operations and support for the program as a whole. By including all costs within the life cycle cost estimate, such as interface costs with future systems, this best practice can improve to be fully met. |

|

The cost estimate is based on a technical baseline description that completely defines the program, reflects the current schedule, and is technically reasonable. |

Original assessment: partially met 2024 assessment: partially met |

Appropriate experts developed a technical baseline, which was updated as requirements became better defined. To fully meet this best practice, the technical baseline documentation should contain approving authority signatures, consistency between documents, and a risk discussion or identification of risk. For example, there is a discrepancy in the number of interfaces between the cost estimate and technical baseline. |

|

|

The cost estimate is based on a work breakdown structure (WBS) that is product-oriented, traceable to the statement of work, and at an appropriate level of detail to ensure that cost elements are neither omitted nor double-counted.a |

Original assessment: partially met 2024 assessment: partially met |

The life cycle cost estimate has a WBS in terms of a cost element structure that contains some common elements and three levels of indenture that break larger products down into progressive levels of detail. By improving the cost element structure to use wave names instead of generic terms, match the schedule WBS, properly assign child element costs to a single parent element, include all common elements, provide insight into high-cost elements, include a dictionary that details child elements, and is updated as the estimate is refined, this best practice can improve to be fully met. |

|

|

The cost estimate documents all cost-influencing ground rules and assumptions. |

Original assessment: partially met 2024 assessment: partially met |

The life cycle cost estimate contains ground rules and assumptions. By identifying risks associated with assumptions, constraints, or dependencies, using cost influencing assumptions as inputs to the sensitivity and uncertainty analyses, and ensuring that the estimate aligns with all assumptions, this best practice can improve to be fully met. |

|

|

Well-documented Original assessment: Substantially met Updated assessment: N/A |

The cost estimate shows the source data used, the reliability of the data, and the cost estimating methodology used to derive each element’s cost. |

Original assessment: substantially met 2024 assessment: N/A |

The well-documented characteristic was originally scored as substantially met overall. Thus, the 2023 cost estimate was not reevaluated for these best practices as part of this review. |

|

The cost estimate describes how the estimate was developed so that a cost analyst unfamiliar with the program could understand what was done and replicate it. |

Original assessment: substantially met 2024 assessment: N/A |

||

|

The cost estimate discusses the technical baseline description and the data in the technical baseline are consistent with the cost estimate. |

Original assessment: partially met 2024 assessment: N/A |

||

|

The cost estimate provides evidence that management reviewed and accepted it. |

Original assessment: partially met 2024 assessment: N/A |

||

|

Accurate Original assessment: Partially met Updated assessment: Substantially met |

The cost estimate is based on a model developed by estimating each WBS element using the best methodology from the data collected. |

Original assessment: partially met 2024 assessment: partially met |

Documentation describes methodologies used. By ensuring that the cost estimating methodology used to estimate interface costs uses applicable data, and wave costs are informed by requirements, among other things, this best practice can improve to be fully met. |

|

The cost estimate is adjusted properly for inflation. |

Original assessment: minimally met 2024 assessment: N/A |

This best practice was assessed as substantially met in 2022 during audit follow-up efforts for our original assessment. Thus, the 2023 cost estimate was not reevaluated for this best practice as part of this review. |

|

|

The cost estimate contains few, if any, minor mistakes. |

Original assessment: partially met 2024 assessment: substantially met |

Some mistakes we previously identified were corrected, showing improvement in the updated estimate. By correcting the remaining mistakes and ensuring that the cost estimate has consistent inputs and accurate normalization and escalation, among other things, this best practice can improve to be fully met. |

|

|

The cost estimate is regularly updated to ensure that it reflects program changes and actual costs. |

Original assessment: substantially met 2024 assessment: N/A |

This best practice was originally scored as substantially met and thus the 2023 cost estimate was not reevaluated for this best practice as part of this review. |

|

|

The cost estimate documents, explains, and reviews variances between planned and actual costs. |

Original assessment: partially met 2024 assessment: partially met |

In our 2024 and original analysis, we found that actual costs are captured in the cost model along with differences between planned and actual costs. This best practice can improve to be fully met by explaining a threshold variance such that all elements whose actual costs exceed the threshold are reviewed, and including lessons learned to inform future estimates. |

|

|

The cost estimate is based on a historical record of cost estimating and actual experiences from other comparable programs. |

Original assessment: partially met 2024 assessment: partially met |

In our 2024 and original analysis, we found that data sources such as awarded contracts were used to inform the cost estimate. VA stated that there were no comparable programs or data available in the department, despite the program collecting actual FMBT costs from 2018 forward. By addressing the reliability of the data sources used to estimate costs for the program, as well as knowledge about the data sources, this best practice can improve to be fully met. |

|

|

Credible Original assessment: Minimally met Updated assessment: Minimally met |

The cost estimate includes a sensitivity analysis that identifies a range of possible costs based on varying major assumptions, parameters, and data inputs. |

Original assessment: minimally met 2024 assessment: minimally met |

The 2023 life cycle cost estimate report documents a sensitivity analysis. By improving the cost estimate sensitivity analysis to vary input variables and underlying assumptions for the top three cost drivers examined, among other things, this best practice can improve to be fully met. |

|

|

The cost estimate includes a risk and uncertainty analysis that quantifies the imperfectly understood risks and identifies the effects of changing key cost driver assumptions and factors. |

Original assessment: minimally met 2024 assessment: minimally met |

We found that the 2023 life cycle cost estimate contains a risk analysis; however, issues remain. This best practice can improve to be fully met by including a risk and uncertainty analysis within the cost estimate that quantifies risks and uncertainties of individual cost element structure elements, assumptions, or data used; considers correlation; allocates the risk-adjusted estimate to cost element structure elements; and identifies contingency for achieving the desired confidence level. |

|

|

The cost estimate employs cross-checks—or alternate methodologies—on major cost elements to validate results. |

Original assessment: not met 2024 assessment: not met |

VA has not performed cross-checks on the major cost elements. |

|

|

The cost estimate is compared to an independent cost estimate conducted by a group outside the acquiring organization to determine whether other estimating methods produce similar results. |

Original assessment: not met 2024 assessment: partially met |