ARTIFICIAL INTELLIGENCE

Generative AI Use and Management at Federal Agencies

Report to Congressional Requesters

United States Government Accountability Office

A report to congressional requesters.

For more information, contact Candice N. Wright at WrightC@gao.gov and Kevin Walsh at WalshK@gao.gov.

What GAO Found

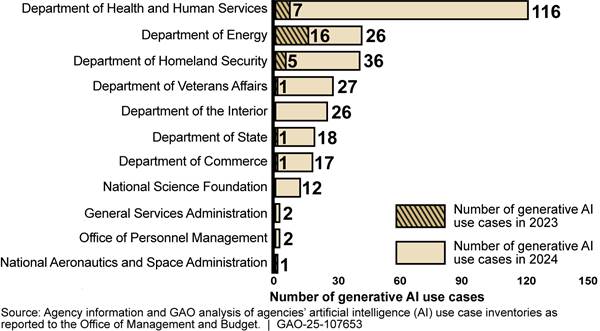

Across the 11 selected agencies GAO reviewed with artificial intelligence (AI) inventories, the total number of reported AI use cases nearly doubled from 571 in 2023 to 1,110 in 2024. At the same time, generative AI use cases increased about nine-fold, from 32 to 282. See the figure for the numbers of generative AI use cases agencies submitted to the Office of Management and Budget (OMB).

Generative AI offers potential benefits. In the mission-support area, the technology could improve written communications, information access efficiency, and program status tracking. Program-specific examples include:

· The Department of Veterans Affairs started an effort to automate various medical imaging processes to enhance veterans’ dignostic services.

· To support containment of the poliovirus, the Department of Health and Human Services initiated an effort to extract information from publications and identify outbreaks in areas previously thought to be polio-free.

Agency officials told GAO that they face several challenges to using generative AI, such as: complying with existing federal policies and guidance, having sufficient technical resources and budget, and maintaining up-to-date appropriate use policies. For example, officials at 10 of 12 selected agencies said existing federal policy, such as data privacy policy, could present obstacles to adoption. Furthermore, officials at four agencies told GAO that the technology’s rapid evolution can complicate establishment of generative AI policies and practices.

Agencies are beginning to take steps to address challenges by, among other things, (1) leveraging available AI frameworks and guidance to inform their own policies and (2) engaging in collaborative efforts with other agencies. During this time, executive branch AI guidance was significantly revised in early 2025. Accordingly, in conjunction with efforts to address challenges, agencies are incorporating these revisions into their management of generative AI.

Why GAO Did This Study

Recent growth in AI capabilities has spurred a corresponding rise in public interest. Developments in generative AI—which can create text, images, audio, video, and other content when prompted by a user—have revolutionized how the technology can be used in many industries. However, generative AI has risks such as spreading misinformation and presenting national security and environmental risks.

GAO was asked to describe federal agencies’ efforts to pursue generative AI. This report is the fourth in a body of work on generative AI. GAO’s objectives included describing selected agencies’ ongoing and planned uses of generative AI and resulting potential benefits as well as describing agencies’ challenges in using and managing generative AI and efforts to address these challenges.

GAO selected 12 agencies that publicly reported having generative AI use cases in 2023 or 2024. GAO reviewed AI use case inventories submitted by 11 agencies (the Department of Defense is exempt from the requirement). GAO also analyzed challenges reported by the 12 agencies and categorized those most frequently mentioned. Additionally, GAO interviewed officials from OMB and the Office of Science and Technology Policy about their government-wide policies and guidance on generative AI.

Abbreviations

AI artificial intelligence

CAIO Chief Artificial Intelligence Officer

CIO Chief Information Officer

DHS Department of Homeland Security

DOD Department of Defense

DOE Department of Energy

DOI Department of the Interior

EO executive order

GSA General Services Administration

HHS Department of Health and Human Services

NASA National Aeronautics and Space Administration

NIST National Institute of Standards and Technology

NSF National Science Foundation

OMB Office of Management and Budget

OPM Office of Personnel Management

OSTP Office of Science and Technology Policy

RMF risk management framework

VA Department of Veterans Affairs

This is a work of the U.S. government and is not subject to copyright protection in the United States. The published product may be reproduced and distributed in its entirety without further permission from GAO. However, because this work may contain copyrighted images or other material, permission from the copyright holder may be necessary if you wish to reproduce this material separately.

July 29, 2025

The Honorable Gary C. Peters

Ranking Member

Committee on Homeland Security and Governmental Affairs

United States Senate

The Honorable Edward J. Markey

United States Senate

The rise of artificial intelligence (AI) has created growing excitement and much debate about its potential to revolutionize entire industries, particularly with recent developments in generative AI. These developments have led to uses that can create text, images, audio, video, and other content when prompted by a user. Although recent estimates are highly variable, generative AI could add trillions of dollars to the global economy over the next decade. In the near term, generative AI may increase productivity and transform daily tasks in many industries. However, it may also help spread misinformation and present national security and environmental risks. As federal agencies deploy generative AI to help improve operations and service delivery, the Office of Management and Budget (OMB) has directed agencies to accelerate the federal use of AI technologies by prioritizing innovation, governance, and public trust.[1]

This report is the fourth in a body of work on generative AI.[2] You asked us to review federal agencies’ efforts to pursue generative AI. This report describes: (1) selected agencies’ use or planned use of generative AI; potential benefits of implementing the technology, and collaborative efforts to advance the technology; (2) challenges facing selected agencies’ current and future use and management of generative AI; and (3) selected agencies’ use of frameworks and guidance to inform policies and practices and address challenges. To address these objectives, we selected 12 federal agencies that publicly reported having generative AI use cases in 2023 or 2024.[3] Those 12 agencies are the Departments of Commerce, Defense (DOD), Energy (DOE), Health and Human Services (HHS), Homeland Security (DHS), the Interior (DOI), State, and Veterans Affairs (VA); the General Services Administration (GSA); the National Aeronautics and Space Administration (NASA); the National Science Foundation (NSF); and the Office of Personnel Management (OPM).

For the first objective, we reviewed 11 of 12 selected agencies’ AI use case inventories as submitted to OMB. We did not include DOD in this part of our review because it is exempt from certain statutory and public AI use case inventory reporting requirements.[4] Using OMB’s 2023 and 2024 consolidated AI use case inventories, we confirmed with the agencies which of those use cases employ generative AI.

We assessed the reliability of agencies’ AI inventories, by examining the data for duplicate or missing entries, among other steps. We determined that the data were sufficiently reliable for providing a general overview of the number and type of AI and generative AI use cases reported by agencies.

For the second and third objectives, across all 12 selected agencies, we collected and reviewed agency officials’ views on the challenges they are facing and the policies and practices of agencies using generative AI. We conducted a literature search to supplement and confirm agency information on the challenges agencies face with generative AI use and management. Additionally, we reviewed documentation and interviewed officials from OMB and the Office of Science and Technology Policy (OSTP) about their government-wide policies and guidance on generative AI. Appendix I contains additional information on our objectives, scope, and methodology.

We conducted this performance audit from June 2024 to July 2025 in accordance with generally accepted government auditing standards. Those standards require that we plan and perform the audit to obtain sufficient, appropriate evidence to provide a reasonable basis for our findings and conclusions based on our audit objectives. We believe that the evidence obtained provides a reasonable basis for our findings and conclusions based on our audit objectives.

Background

Generative AI Technology Overview

Generative AI systems create outputs using algorithms, which are often trained on text and images obtained from the internet. Technological advancements in the underlying systems and architectures since 2017, combined with the open availability of AI tools to the public starting in late 2022, have led to widespread use. The technology is continuously evolving, with rapidly emerging capabilities that could revolutionize entire industries. As we previously reported, generative AI could offer agencies benefits in summarizing information, enabling automation, and improving productivity.[5]

However, despite continued growth in capabilities, generative AI systems are not cognitive, lack human judgment, and may pose numerous risks. In July 2024, the National Institute of Standards and Technology (NIST) published a document defining risks that are novel to or exacerbated by generative AI.[6] For example, NIST stated that generative AI can cause data privacy risks due to unauthorized use, disclosure, or de-anonymization of biometric, health, or other personally identifiable information or sensitive data.

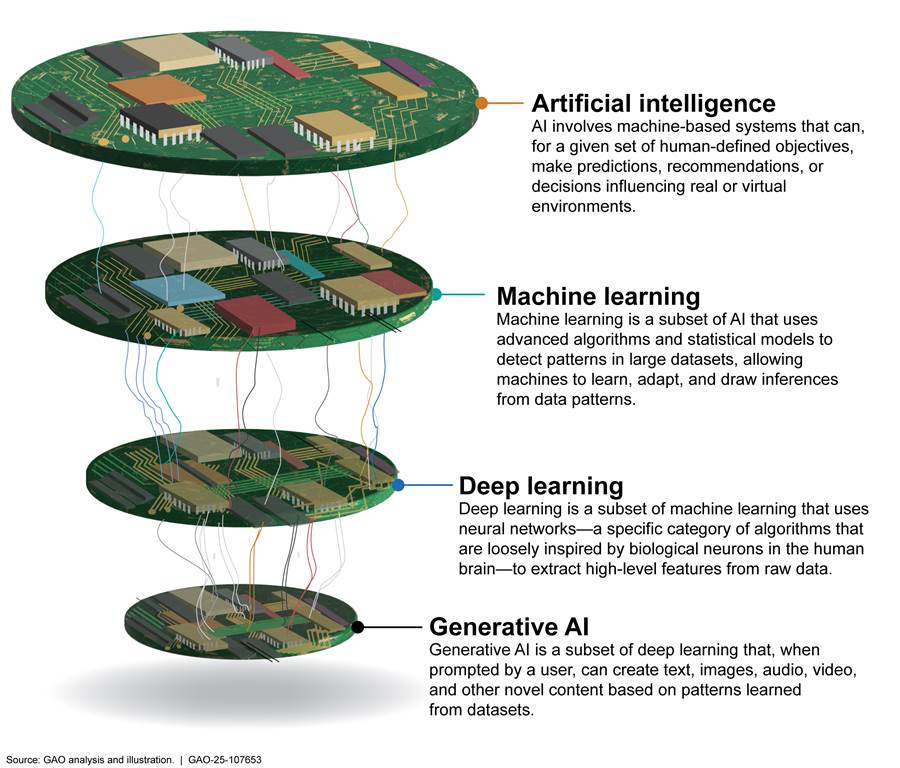

Users can solicit outputs from a generative AI system by using inputs called “prompts”—questions or descriptions entered by a user to generate and refine the results. Many of the available generative AI systems allow users to prompt the system in natural, or ordinary, language, such as a text query typed into a chat box. The ability to create, or generate, novel content separates generative AI from other types of AI.[7] Figure 1 shows generative AI’s relationship to other fields of study in AI.

Figure 1: Generative Artificial Intelligence (AI) in Relation to Other Types of AI

Evolution of Executive Orders and Federal Guidance for AI and Generative AI

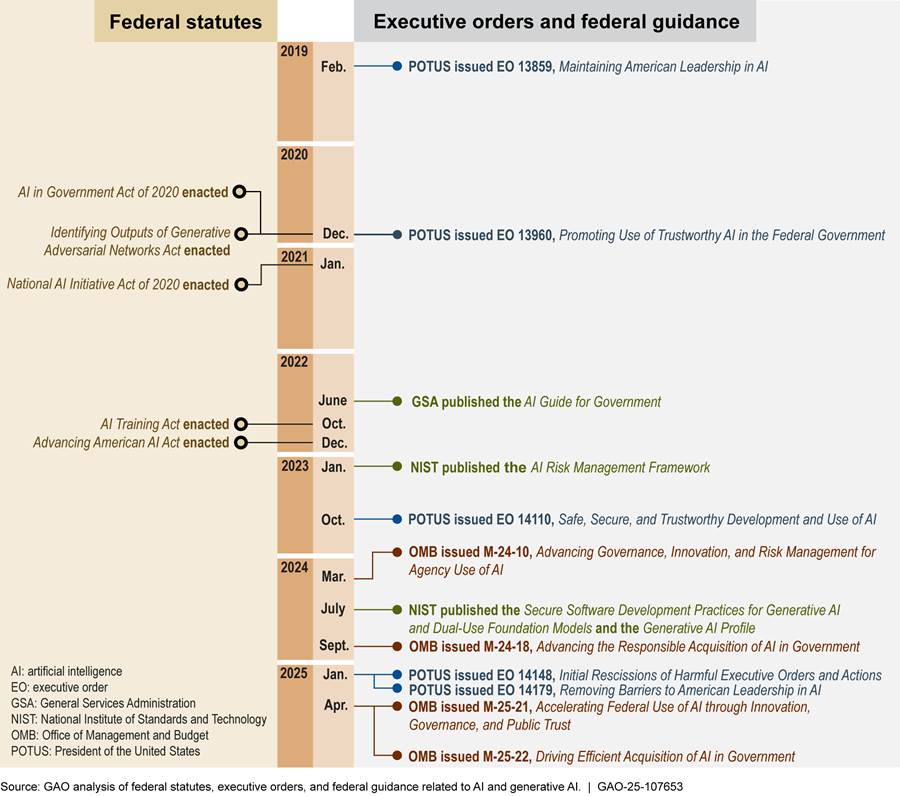

Federal agencies' efforts to implement AI are guided by federal law, executive actions, and federal guidance. Since 2019, various laws have been enacted, and executive orders (EO) and guidance have been issued to assist federal agencies in implementing AI. For example:

· In February 2019, the President issued EO 13859, establishing the American AI Initiative, which promoted AI research and development investment and coordination, among other things.[8]

· In December 2020, the President issued EO 13960, promoting the use of trustworthy AI, which focused on operational AI and established a common set of principles for the design, development, acquisition, and use of AI in the federal government.[9]

· In October 2023, the President issued EO 14110, which aimed to advance a coordinated, federal government-wide approach to the development and safe and responsible use of AI.[10]

· In March 2024, OMB issued M-24-10, which aimed to advance agencies’ AI governance, innovation, and risk management.[11] In addition, in September 2024, OMB issued M-24-18 which aimed to advance the government’s responsible acquisition of AI.[12]

· In January 2025, EO 14148 was issued and rescinded EO 14110.[13] Later in January 2025, the President issued EO 14179, which updated U.S. policy on AI and directed the development and submission of an AI action plan by July 22, 2025.[14]

· In April 2025, as directed by EO 14179, OMB issued M-25-21, which rescinded and replaced M-24-10.[15] This memorandum requires agencies to develop a policy for acceptable use of generative AI, among other AI requirements. In addition, OMB issued M-25-22, which rescinded and replaced M-24-18.[16] This memorandum provides guidance to agencies to improve their ability to acquire AI responsibly.

In addition to executive orders and OMB policy memorandums, selected agencies have published government-wide guidance for AI, and for generative AI specifically.

· GSA AI Guide for Government. This guide is intended to help government decision-makers by offering clarity and guidance on defining AI, understanding its capabilities and limitations, and explaining how agencies could apply it to their mission areas.[17] For example, the guide identifies key AI terminology and steps agencies could take to develop their AI workforce.

· NIST Secure Software Development Practices for Generative AI and Dual-Use Foundation Models. This document expands on NIST’s Secure Software Development Framework by incorporating practices for generative AI and other advanced general-purpose models.[18] It documents potential generative AI development risk factors and strategies to address them.

Figure 2 provides a timeline of key federal efforts to advance AI and generative AI since 2019.

Figure 2: Timeline of Key Federal Efforts to Advance AI and Generative AI

AI Use Case Inventory Requirements

In December 2020, EO 13960 established the foundational requirement for AI use case inventories.[19] The order directed federal agencies to prepare and maintain inventories of their unclassified and nonsensitive current and planned uses of AI. The inventory requirement supports broader goals of increasing transparency, improving interagency coordination, and promoting the responsible and lawful use of AI. In December 2022, the Advancing American AI Act was enacted, which, among other things, codified various requirements for agencies’ AI use case inventories and directed agencies to:

· prepare and maintain an inventory of the AI use cases of the agency, including current and planned uses;

· share agency inventories with other agencies; and

· make agency inventories available to the public.[20]

In October 2021, the Federal Chief Information Officers (CIO) Council released 2021 Guidance for Creating Agency Inventories of Artificial Intelligence Use Cases. This guidance provided agencies with a structured approach to identifying and reporting their AI use cases in compliance with EO 13960.[21] In 2023, the Federal CIO Council updated this guidance by releasing Guidance for Creating Agency Inventories of AI Use Cases Per EO 13960. It gave agencies the criteria, format, and mechanisms to create and make public their annual AI use case inventories.[22]

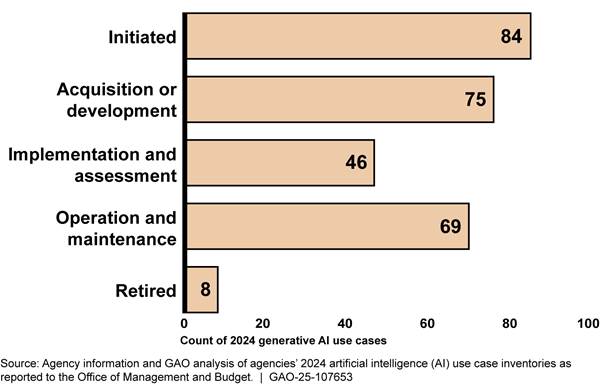

Most recently, in August 2024, the Federal CIO Council released Guidance for 2024 Agency Artificial Intelligence Reporting per EO 14110 and subsequent supplemental information. This guidance and information offered detailed instructions for agencies on the criteria, format, and mechanisms for reporting their 2024 AI use cases.[23] The supplemental information also defined the following stages of development to guide agencies’ identification of each use case’s developmental status:

· Initiated. The need for an AI use case has been expressed and its intended purpose and high-level requirements are documented.

· Acquisition or development. An AI use case has been identified for development or is currently under development with the necessary IT tools and data infrastructure available for use.

· Implementation and assessment. The AI system associated with the use case is currently undergoing functionality and security testing.

· Operation and maintenance. The AI use case has been integrated into agency operations and is being monitored for performance.

· Retired. The AI use case has been retired or is in the process of being retired.

This 2024 guidance also established requirements for agencies to provide the topic area for each use case in their inventories. The Federal CIO Council provided examples for each of the 11 use case topic areas. Table 1 shows these topic areas and a summarized description based on the examples provided for each area.

Table 1: AI Use Case Inventory Topic Areas, 2024

|

Topic area |

AI use case description |

|

Diplomacy & trade |

Supporting international relations, humanitarian assistance, and global trade logistics. |

|

Education & workforce |

Enhancing educational processes, workforce training, employee accommodations, and administrative efficiency. |

|

Emergency management |

Predicting, managing, and responding to emergencies and disasters. |

|

Energy & environment |

Promoting energy innovation, environmental management, and the safety of energy facilities. |

|

Government services |

Improving access, efficiency, and effectiveness in delivering government benefits, services, and communications. |

|

Health & medical |

Aiding health care delivery, medical research, patient monitoring, data management, diagnostics, and drug safety. |

|

Law & justice |

Supporting law enforcement, investigations, border security, immigration services, and related activities. |

|

Mission-enabling (internal agency support) |

Supporting internal agency operations such as finance, human resources, facilities management, cybersecurity, procurement, administrative tasks, and information technology services. |

|

Science & space |

Supporting research and exploration in computer, earth, physical, life, and space sciences. |

|

Transportation |

Enhancing vehicle operations and infrastructure maintenance. |

|

Other |

Other uses that do not fit into the categories listed above, requiring an alternative categorization. |

Source: GAO analysis of the Federal Chief Information Officers Council documentation. | GAO‑25‑107653

In April 2025, OMB issued M-25-21 (which replaced M-24-10) and directed agencies to continue to inventory their AI use cases at least annually, submit their inventories to OMB, and post public versions on their websites. It also encourages agencies to update the public versions of their inventories on an ongoing basis to reflect their current use of AI.[24] Additionally, M-25-10 requires agencies to:

· Identify high-impact AI use cases. Agencies are required to determine whether an AI use case qualifies as “high-impact” because of its potential effects on privacy or access to certain benefits and services, among other things.[25] Chief AI Officers (CAIO) are responsible for centrally tracking high-impact use cases and related determinations.

· Document risk management actions. Agencies are required to complete an AI Impact Assessment before deploying any high-impact AI use case and implement the minimum risk management practices outlined in the memorandum. CAIOs may waive specific requirements based on a written, system-specific, and context-specific risk assessment, and must track waivers centrally.

· Publicly disclose and notify OMB of waivers and determinations. Agencies are required to publicly release a summary describing each individual determination and waiver related to high-impact AI use cases, consistent with applicable law and government-wide policy. CAIOs are to report the scope, justification, and supporting evidence for each waiver to OMB within 30 days of granting or revoking it.

Selected Agencies Reported Generative AI Growth, Potential Benefits, and Collaborative Efforts

Generative AI Use is Escalating Rapidly

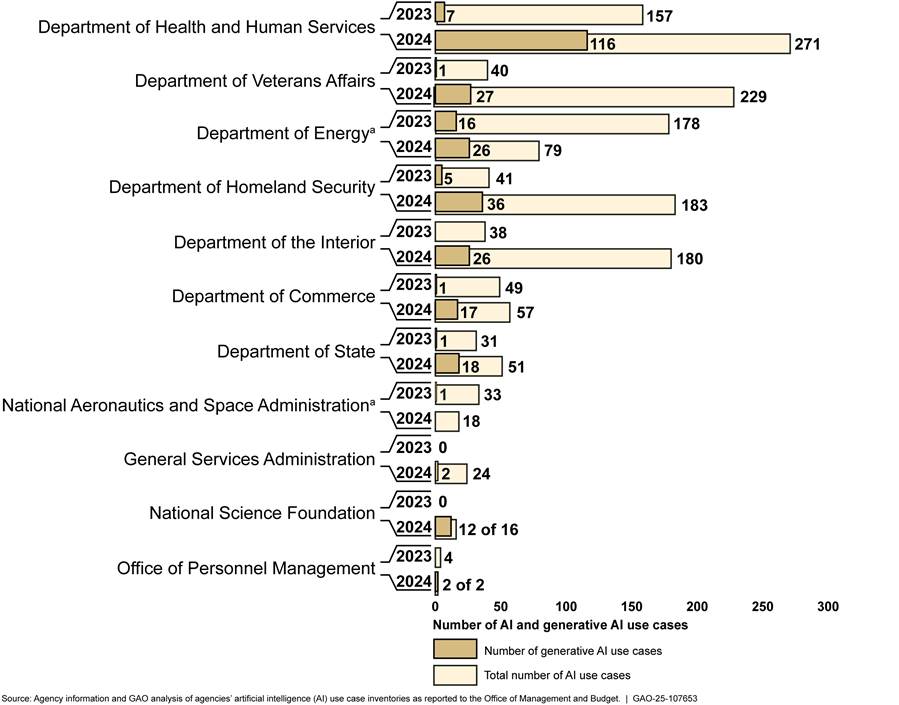

For the 11 selected agencies we reviewed with AI inventories, the number of reported AI use cases nearly doubled from 571 in 2023 to 1,110 in 2024. Generative AI use cases increased nearly nine-fold from 32 in 2023 to 282 in 2024. Our analysis of the agency inventories showed that generative AI use expanded in all but one of the agencies we reviewed. In 2023, seven of the 11 agencies we reviewed (all agencies except DOI, GSA, NSF, and OPM) identified use cases that employed generative AI technologies.[26] In 2024, 10 of 11 agencies (all agencies except NASA) reported generative AI use cases. Figure 3 shows the numbers of AI use cases submitted to OMB during 2023 and 2024 and the numbers of those that agencies identified as using generative AI.[27]

Figure 3: Selected Agencies’ Reported AI and Generative AI Use Cases During 2023 and 2024, by Total Number of AI Use Cases

aAccording to officials from the Department of Energy and the National Aeronautics and Space Administration, a clarification in the definition of research and development within the Federal Chief Information Officers Council’s 2024 reporting guidance resulted in a year-to-year drop in their total number of AI use cases.

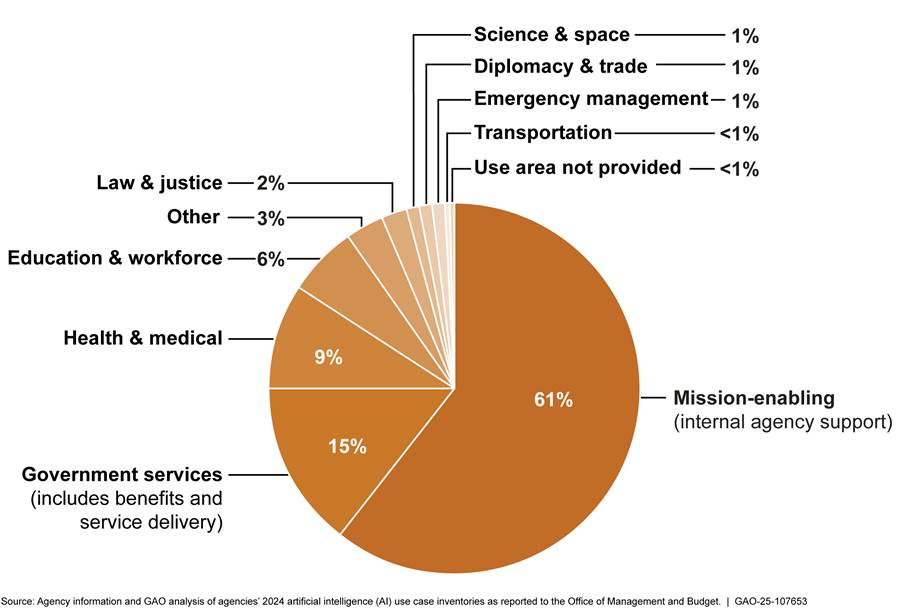

Our analysis of reported 2024 AI use case inventories revealed that selected agencies are using or are planning to use generative AI technologies that provide a range of expected benefits. A little more than half of these use cases (61 percent) are included in the topic area referred to as mission-enabling functions. This topic area covers mission-support activities such as improving the quality of written communications, enhancing efficiency of information access, summarizing reporting, and tracking program status. The next two topic areas with the highest number of generative AI use cases are government services (15 percent) and health and medicine (9 percent). These three topic areas account for 84 percent of the 282 generative AI use cases reported in 2024 by our selected agencies. We discuss examples in three topic areas in the following section. Figure 4 shows the percentage of generative AI use cases in each of the topic areas.

Figure 4: Selected Agencies’ Generative AI Use Cases Across Different Topic Areas, 2024

Note: In 2024, selected agencies did not report generative AI use cases in the “Energy & environment” topic area.

In addition, selected agencies reported in 2024 that their 282 generative AI use cases ranged across different development stages, with the greatest number of use cases in the initiated phase. Specifically, 84 use cases, or 30 percent, were in the initial phase of development, where a need has been expressed and an intended purpose and requirements have been documented. Another 75 of these use cases, or 27 percent, were in the acquisition or development phase. The remaining 123 use cases were in an implementation and assessment phase, an operation and maintenance phase, or have been retired from use. Figure 5 shows the counts of generative AI use cases across different development stages.

Figure 5: Selected Agencies’ Generative AI Use Cases Across Different Development Stages, 2024

Selected Agencies Anticipate Benefits from Generative AI Use Cases

Selected agencies reported potential benefits within their 2024 AI use case inventories. Details on how federal agencies may benefit from the use of generative AI across the three most widely reported topic areas are described below:

· Mission-support functions. Agencies are primarily using or planning to use generative AI to support internal agency operations, such as streamlining processes and improving communication. For instance, agencies have used generative AI to enhance the quality of written communications, facilitating clearer and more effective information access. Additionally, generative-AI-driven tools have been implemented for information searching, enabling personnel to retrieve relevant data more efficiently. Furthermore, report summarization is another area where agencies reported that generative AI can contribute to operational efficiency by condensing extensive documents into concise summaries, aiding in quicker comprehension and decision-making. Specific examples of reported mission-support generative AI use cases include:

· DHS’s Customs and Border Protection’s source code development tool is intended to allow users to develop software faster and more efficiently using a generative AI coding assistant.

· DOE’s AskOEDI is intended to allow users to get answers to questions about specific Open Energy Data Initiative datasets, including inquiries about the equipment, assumptions, and methodologies used in the origination of a dataset, along with more abstract questions, such as the applicability of data to specific research fields.

· Government services. The integration of generative AI into government services can enhance citizen engagement and streamline service delivery. For example, chatbots and virtual assistants powered by generative AI can provide timely responses to public inquiries, improving accessibility and user experience. Moreover, generative AI can analyze public feedback to identify areas for service improvement, ensuring that government offerings align with citizen needs. Specific examples include:

· DHS’s U.S. Citizenship and Immigration Services is developing a system to support document translation services. The effort involves integrating AI and generative AI models to perform language translation tasks across a variety of document types, generating translated documents and language-specific text for the agency. This system is expected to improve the agency’s ability to provide accurate and timely translations.

· HHS’s National Institutes of Health is pursuing a system focused on automating the tagging of still images. The system is being designed to identify image files and generate metadata or descriptive tags attached to those files. The agency hopes to improve the categorization and retrieval of large volumes of image-based data across the National Library of Medicine’s existing metadata management systems, improving the efficiency and utility of image file management for research and administrative purposes.

· Health and medicine. In the health and medical sector, agencies have adopted generative AI to advance research and improve public health outcomes. As we have previously reported, AI technologies can assist in analyzing complex medical data, leading to more accurate diagnostics and personalized treatment plans.[28] For example, AI models can summarize patient electronic health records, medications, and chronic condition information to aid in clinical decision-making. Specific examples of reported generative AI use cases for health and medicine include:

· VA is developing a generative AI use to automate various medical imaging processes. This use may enhance VA’s ability to analyze medical images, integrate existing and new data workflows, and create summary diagnostic reports.

· HHS developed a generative AI use for polio containment that extracts information from available sources to identify the poliovirus in geographical locations previously thought to be polio-free, supporting containment of the virus.

Selected Agencies Are Leading and Supporting Collaborative Efforts to Advance Generative AI

In addition to the agencies’ current and planned use of generative AI, multiple agencies reported collaborative efforts to advance generative AI technologies, including resource sharing and joint research endeavors. These efforts could benefit selected agencies by enhancing the government's capacity to develop and implement generative AI solutions effectively. For example:

· Development of a government-wide AI resource concept. According to GSA officials, the Federal CIO Council's Innovation Committee is leading an initiative involving GSA, DOE, NSF, and Commerce’s National Oceanic and Atmospheric Administration to create a government-wide approach for identifying and sharing existing AI resources. NSF officials have emphasized that the government's overall ability to implement AI solutions, such as generative AI systems, is strengthened when the community shares information on products and capabilities, treating the development of such capabilities as a federal enterprise.

· Interagency collaboration on AI applications for wildfire management. DOI is collaborating with the U.S. Department of Agriculture's Forest Service to explore generative AI use cases for wildfire management, particularly concerning wildfires that may impact government facilities. According to researchers, generative AI can be used to apply predictive modeling to a wildfire. This would enable forecasting the fire’s likely path, intensity, and growth rate, thereby allowing firefighters and evacuation teams to improve response to wildfires.[29]

· GSA's Federal AI Hackathon for enhancing digital services. GSA, in partnership with other federal entities, hosted the Federal AI Hackathon on July 31, 2024, in Washington, D.C., Atlanta, and New York City. The event focused on optimizing government websites and digital services, by using generative AI to make them more efficient, user-friendly, and responsive to users' needs. Participants engaged in competitions using generative AI tools to write code, propose standards, and create features that increase the reliability of AI-provided responses.

In addition, OMB M-25-21 states that by July 2025, OMB will convene and chair an interagency council to maximize agency efficiencies by coordinating the development and use of AI in their programs and operations, such as promoting shared templates, technical resources, and exemplary uses of AI.[30]

Selected Agencies Reported Several Generative AI Challenges

The 12 selected agencies reported that they face several challenges in using and managing generative AI.

Complying with existing federal policies and guidance. Selected agencies are required to adhere to existing and recently issued federal policy and guidance when using generative AI. However, officials from 10 of the 12 selected agencies (Commerce, DHS, DOD, DOE, DOI, GSA, NASA, NSF, State, and VA) shared that existing federal AI policy may not account for or could present obstacles to the adoption of generative AI. For example, in the related technology areas of cybersecurity, data privacy, and IT acquisitions, there is a substantial amount of applicable federal law, policy, and guidance that agencies are to follow. Adhering to these guidance documents could prove difficult given the risks associated with generative AI. VA officials also noted that existing privacy policy can prohibit information sharing with other agencies, which can prevent effective collaboration on generative AI risks and advancements. In addition, officials from four selected agencies (GSA, HHS, NASA, and NSF) told us that the technology’s rapid evolution can complicate the agencies’ establishment of flexible generative AI policies and practices.

Having sufficient technical resources and budget. Generative AI can require infrastructure with significant computational and technical resources. Eight selected agencies (Commerce, DHS, DOD, NSF, GSA, NASA, OPM, and VA) reported challenges in obtaining or accessing the needed technical resources. For example, DOD and NASA officials told us that they could perform additional advanced research using generative AI but are limited by their access to advanced computing infrastructure required to perform those tasks.[31] In addition, seven selected agencies (Commerce, DOD, DOI, DHS, GSA, NSF, and VA) reported challenges related to having the funding needed to establish these resources and support desired generative AI initiatives. OPM officials cited the cost of hiring new generative AI experts, while Commerce officials cited balancing investments for generative AI with other IT modernization efforts as contributing factors.

Acquiring generative AI tools. Over half of the selected agencies are primarily procuring generative AI products and services in lieu of internal development. However, GSA, NASA, and VA officials reported experiencing delays in acquiring commercial generative AI products and services, including cloud-based services, because of the time needed to obtain Federal Risk and Authorization Management Program authorizations.[32] According to the literature we reviewed, these delays can be exacerbated when the provider is unfamiliar with federal procurement requirements.

Hiring and developing an AI workforce. Six selected agencies (Commerce, DOD, NASA, OPM, State, and VA) reported challenges in attracting and developing individuals with expertise in generative AI. These agencies can also be affected by competition with the private sector for similarly skilled professionals. Furthermore, these agencies also reported difficulties in establishing and providing ongoing education and technical skill development of their current workforce. These agencies cited various constraints, such as NASA officials citing the need for resources to establish training programs and maintaining training content as the technology rapidly evolves.

Maintaining up-to-date appropriate use policies. As selected agencies look to adopt generative AI, six of these agencies (Commerce, DHS, DOE, GSA, NSF, and State) reported difficulties in maintaining current and appropriate use policies for generative AI. GSA officials told us that new generative AI technology developments may outpace the agency’s ability to maintain appropriate use policies for the technology. Further exacerbating this challenge, DOE officials told us they face difficulties in maintaining appropriate use policies that fully define the technology, its potential capabilities, and its limitations.

Addressing bias and ensuring reliability. Officials at five of the 12 selected agencies (DHS, DOD, DOE, NASA, and State) told us that generative AI can produce biased outputs or hallucinations—outputs that seem plausible but are ultimately false. DOD and DOE officials further stated that generative AI systems are subject to cybersecurity threats and that work is needed to mitigate cybersecurity vulnerabilities. As described in NIST’s Artificial Intelligence Risk Management Framework (AI RMF), generative AI tools employed by agencies should aim to reliably produce results that are consistent and resilient in response to adverse events.[33] Ensuring reliability involves establishing protections against AI system vulnerabilities—including cybersecurity threats—that would prevent the system from performing as designed.

Lacking transparency in system inputs and outputs. DOD and NASA officials told us that some users lack the expertise to understand how a generative AI system produces its results and stated that users’ can experience a false sense of certainty in the system’s outputs. In addition, there is often not documentation about the algorithms used to generate results. For example, DOD officials told us that it remains unclear how generative AI systems arrive at certain outputs, and this lack of transparency can reduce user confidence in system outputs. As described in NIST’s AI RMF, generative AI tools employed by agencies should prioritize transparency, not only in their training data but also in their data sources and decision-making processes.[34]

Securing classified and sensitive data. DOD officials told us that generative AI models could aggregate various unclassified information contained in the model’s training data and unintentionally output classified information. Agencies are required to ensure that personal, controlled unclassified information, and classified data used in the training and deploying of generative AI models are kept secure and compliant with federal requirements. However, officials at five selected agencies (Commerce, DOD, DOE, NASA, and VA) told us that strict data security requirements may prevent them from performing generative AI research in certain agency mission areas.

Amid Changing Requirements, Selected Agencies Are Establishing Generative AI Policies and Practices

Selected Agencies Use Frameworks and Guidance to Inform Generative AI Policies and Practices

To inform their generative AI policies and begin to address challenges, selected agencies reported using a suite of frameworks and other relevant guidance to assist in their use and management of generative AI. Frameworks are meant to be voluntary, non-sector specific approaches to industry standards. Frameworks such as NIST’s AI RMF may increase the trustworthiness of generative AI systems and foster their responsible design, development, deployment, and use. Additionally, agencies such as the Congressional Research Service and NIST have published primers and guidance to assist agencies in understanding and operationalizing generative AI technologies.

For example, selected agencies reported using the following AI frameworks and other guidance to guide their generative AI policies and practices:

· NIST AI RMF and Generative AI Profile. NIST’s AI RMF highlights that AI risk management is a key component of responsible development and use of AI systems.[35] This framework is a resource to organizations designing, developing, deploying, or using AI systems to help manage the many risks of AI and promote trustworthy and responsible development and use of AI systems. It describes how organizations can frame the risks related to AI and outlines the characteristics of trustworthy AI systems.

· GAO AI Accountability Framework. This framework serves as a resource for federal agencies that are considering and implementing AI systems, including generative AI systems.[36] The framework identifies four principles (i.e., governance, data, performance, and monitoring) and describes key practices for each principle to consider when implementing AI systems. The framework helps organizations that build, purchase, and deploy AI understand how AI systems will be evaluated. OPM and DOE reported using this resource to guide their generative AI policies and practices.

· Congressional Research Service Generative Artificial Intelligence and Data Privacy: A Primer. This primer provides a summary of generative AI technology and considerations for implementing the technology.[37] Specifically, it describes what generative AI is, identifies major generative AI developers and services, describes how generative AI models are made, and describes data and privacy considerations when using generative AI. DOE reported using this primer to guide its generative AI policies and practices.

As previously described, agencies have used additional guidance to guide their use and implementation of generative AI beyond those listed above. For example, agencies reported using guidance, such as: OMB M-24-10, EO 14110, the Advancing American AI Act, and the AI in Government Act.

Selected Agencies Are Establishing Policies and Practices to Help Address Generative AI Challenges

As the AI policy landscape evolves, selected agencies are developing and updating their own guidance intended to govern their use and management of generative AI. These policies and practices can help address generative AI challenges previously described. For example, selected agencies have developed the following policies and practices to mitigate challenges with the use and management of generative AI:

· Appropriate use. While agencies reported challenges with maintaining appropriate use, 11 of the 12 selected agencies (Commerce, DOD, DOI, DOE, DHS, GSA, HHS, NASA, NSF, State, and VA) reported that they have established specific guidelines on the appropriate use of generative AI. In July 2024, DOD published a memorandum to ensure responsible and safe procurement, development, and deployment of generative AI at the agency.[38] In addition, in October 2023, DHS published a policy that outlined requirements for approved commercial generative AI use by DHS personnel and how to safely use the technology in appropriate applications.[39] Additionally, all 12 agencies reported training staff on the protection, dissemination, and disposition of federal information while using generative AI. For example, DHS provides basic user awareness training for commercial generative AI tools. In addition, DOD officials noted that effective and responsible adoption of generative AI does not always require generative AI-unique policy or guidance.

· Risk management. DOE established a generative AI reference guide that outlines generative-AI-specific risk management. Specifically, the guide highlights additional risk considerations when using generative AI, such as hallucinations, misinterpretations, deepfakes,[40] and intellectual property infringements. In addition, DOD officials told us that DOD established reporting guidance for all AI activities that include implementing risk management practices. Further, DOD, DOI, and NSF reported that their generative AI risk management policies and practices do not differ from their agency’s standard risk management for AI systems.

· Data security. Selected agencies have taken action to safeguard classified and sensitive data and protect intellectual property while using generative AI. Specifically, all 12 selected agencies that allow the use of generative AI reported that the agency limits or restricts that use in some capacity. For example, GSA and DHS limit commercial generative AI use to publicly available information only, in order to prevent sensitive data from being collected through generative AI tools. These sensitive data include personally identifiable information, controlled unclassified information, and classified information. In addition, VA prohibits employees’ use of web-based, publicly available generative AI services with sensitive VA data.

Officials at all 12 selected agencies told us they are working toward implementing the new AI requirements in OMB’s April 2025 memorandum, M-25-21. Doing so will provide opportunities to develop and publicly release AI strategies for identifying and removing barriers and addressing challenges previously cited. These strategies are to include, among other things, plans to address infrastructure and workforce needs, processes to facilitate AI investment or procurement, and plans to ensure access to quality data for AI and data traceability. In addition, the memorandum (1) encourages agencies to promote the trust of AI systems and (2) directs agencies to develop a generative AI policy that establishes safeguards and oversight mechanisms.

Agency Comments

We provided a draft of this report to Commerce, DOD, DOE, HHS, DHS, DOI, GSA, NASA, NSF, OMB, OPM, OSTP, State, and VA for their review and comment. Three agencies (HHS, OPM, and VA) provided technical comments, which we incorporated as appropriate. Ten agencies (Commerce, DHS, DOD, DOE, GSA, DOI, NASA, NSF, OSTP, and State) did not have comments on the report. OMB did not respond to our request for comments.

We are sending copies of this report to the appropriate congressional committees; the Secretaries of the Departments of Commerce, Defense, Energy, Health and Human Services, Homeland Security, the Interior, State, and Veterans Affairs; the Administrators of the General Services Administration and National Aeronautics and Space Administration; the Directors of the National Science Foundation, the Office of Management and Budget, the Office of Personnel Management, and the Office of Science and Technology Policy; and other interested parties. In addition, the report is available at no charge on the GAO website at https://www.gao.gov.

If you or your staff have any questions about this report, please contact Candice Wright at WrightC@gao.gov and Kevin Walsh at WalshK@gao.gov. Contact points for our Offices of Congressional Relations and Public Affairs may be found on the last page of this report. GAO staff who made key contributions to this report are listed in appendix II.

Candice N. Wright

Director, Science, Technology Assessment, and Analytics

Kevin Walsh

Director

Information Technology and Cybersecurity

This report, the fourth in a body of work on generative artificial intelligence (AI), describes: (1) selected agencies’ use or planned use of generative AI, potential benefits of implementing the technology, and collaborative efforts to advance the technology; (2) challenges facing selected agencies’ current and future use and management of generative AI; and (3) selected agencies’ use of frameworks and guidance to inform policies and practices and address challenges.

To address these objectives, we selected 12 federal agencies that publicly reported having generative AI use cases. Specifically, these selected agencies publicly disclosed generative AI use cases in their 2023 AI use case inventories or publicly disclosed and actively worked on additional generative AI use cases in 2024. Those 12 agencies are the Departments of Commerce, Defense (DOD), Energy, Health and Human Services, Homeland Security, the Interior, State, and Veterans Affairs (VA); the General Services Administration; the National Aeronautics and Space Administration; the National Science Foundation; and the Office of Personnel Management. We also engaged with the Office of Management and Budget (OMB) and the Office of Science and Technology Policy (OSTP) in their roles as federal policymakers for generative AI.

To address our first objective, we reviewed 11 of the 12 selected agencies’ 2023 and 2024 AI use case inventories as submitted to OMB. We did not include DOD in this part of our review because they are exempt from certain statutory and public AI use case inventory reporting requirements.[41] Using OMB’s 2023 consolidated AI use case inventory, we analyzed agencies’ AI use case information, including agencies’ total number of AI use cases and number of generative AI use cases. Similarly for 2024, we analyzed the above information as well as agencies’ use case topic areas, stages of development, and potential benefits.[42]

To identify use cases that employ generative AI, we analyzed agencies’ AI use case information and validated our analysis with selected agencies. Using OMB’s 2023 and 2024 consolidated AI use case inventories, we reviewed agencies’ data, such as use case names and summaries, to identify all use cases that possibly employ generative AI. We asked agencies to verify our analysis and incorporated corrections as necessary. However, VA did not provide its review within the required time frame. In the absence of VA’s review, we relied on our own analysis of the inventory data to identify generative AI use cases for VA.

To assess the reliability of agencies’ AI inventories, we reviewed a recent GAO report and documentation supporting the development of AI inventories and gathered information from selected agencies. We previously recommended that 15 federal agencies should update their AI use case inventories to include required information and take steps to ensure the data align with guidance.[43] Using federal guidance requiring and supporting the development of AI inventories, we assessed agencies’ inventory data for duplicate entries, errors, or missing data.[44] From our review, we determined that agencies’ AI inventory data had issues with quality, including duplicate entries and empty data fields. While we recognize the inventory data are not completely accurate, we found that the data are sufficiently reliable for providing a general overview of the total number of AI use cases and number of generative AI use cases reported by federal agencies in 2023 and 2024, as well as providing some details about those that use generative AI.

To address our second objective, across all 12 selected agencies, we interviewed agency officials and collected and reviewed information on challenges facing agencies’ current and future use and management of generative AI. We also conducted a literature search to supplement information on the challenges agencies face with generative AI use and management. We analyzed the challenges collected from all agencies and categorized those most frequently mentioned. Additionally, we reviewed documentation and interviewed or reviewed written responses from OMB and OSTP officials about their government-wide policies and guidance on generative AI.

To address our third objective, across all 12 selected agencies, we interviewed agency officials and collected and reviewed information on agencies’ management of the current and future use of generative AI. We collected and reviewed recent federal statutes, executive orders, and federal guidance to identify agency requirements regarding the implementation of generative AI. We also reviewed frameworks used by agencies to guide their development of generative AI policies and practices. Furthermore, we analyzed evidence provided by each agency and determined how agencies’ policies and practices may help mitigate challenges described in objective 2. Additionally, we reviewed documentation and interviewed officials from OMB and OSTP about their government-wide policies and guidance on generative AI.

We conducted this performance audit from June 2024 to July 2025 in accordance with generally accepted government auditing standards. Those standards require that we plan and perform the audit to obtain sufficient, appropriate evidence to provide a reasonable basis for our findings and conclusions based on our audit objectives. We believe that the evidence obtained provides a reasonable basis for our findings and conclusions based on our audit objectives.

GAO Contacts

Candice N. Wright, wrightc@gao.gov

Kevin Walsh, WalshK@gao.gov

Staff Acknowledgments

In addition to the contacts named above, Darnita Akers (Assistant Director), Jessica Steele (Assistant Director), Wes Wilhelm (Analyst-in-Charge), Alan Daigle, Mark Kuykendall, Curtis R. Martin, Matthew Metz, Hamsini Sivalenka, Andrew Stavisky, Ashley Stewart, and Nathan Tranquilli made key contributions to this report.

Artificial Intelligence: Generative AI’s Environmental and Human Effects. GAO‑25‑107172. Washington, D.C.: April 22, 2025.

Artificial Intelligence: DHS Needs to Improve Risk Assessment Guidance for Critical Infrastructure Sectors. GAO‑25‑107435. Washington, D.C.: December 18, 2024.

Healthcare Cybersecurity: HHS Continues to Have Challenges as Lead Agency. GAO‑25‑107755. Washington, D.C.: November 13, 2024.

Artificial Intelligence: Generative AI Training, Development, and Deployment Considerations. GAO‑25‑107651. Washington, D.C.: October 22, 2024.

Artificial Intelligence: Agencies Are Implementing Management and Personnel Requirements. GAO‑24‑107332. Washington, D.C.: September 9, 2024.

Science & Tech Spotlight: Generative AI in Health Care. GAO‑24‑107634. Washington, D.C.: September 9, 2024.

Artificial Intelligence: Generative AI Technologies and Their Commercial Applications. GAO‑24‑106946. Washington, D.C.: June 20, 2024.

Science & Tech Spotlight: Combating Deepfakes. GAO‑24‑107292. Washington, D.C.: March 11, 2024.

Artificial Intelligence: Actions Needed to Improve DOD’s Workforce Management. GAO‑24‑105645. Washington, D.C.: December 14, 2023.

Artificial Intelligence: Agencies Have Begun Implementation but Need to Complete Key Requirements. GAO‑24‑105980. Washington, D.C.: December 12, 2023.

Artificial Intelligence in Natural Hazard Modeling: Severe Storms, Hurricanes, Floods, and Wildfires. GAO‑24‑106213. Washington, D.C.: December 14, 2023.

Science & Tech Spotlight: Generative AI. GAO‑23‑106782. Washington, D.C.: June 13, 2023.

Artificial Intelligence in Health Care: Benefits and Challenges of Machine Learning Technologies for Medical Diagnostics. GAO‑22‑104629. Washington, D.C.: September 29, 2022.

Artificial Intelligence: DOD Should Improve Strategies, Inventory Process, and Collaboration Guidance. GAO‑22‑105834. Washington, D.C.: March 30, 2022.

Artificial Intelligence: An Accountability Framework for Federal Agencies and Other Entities. GAO‑21‑519SP. Washington, D.C.: June 30, 2021.

Science & Tech Spotlight: Deepfakes. GAO‑20‑379SP. Washington, D.C.: February 20, 2020.

The Government Accountability Office, the audit, evaluation, and investigative arm of Congress, exists to support Congress in meeting its constitutional responsibilities and to help improve the performance and accountability of the federal government for the American people. GAO examines the use of public funds; evaluates federal programs and policies; and provides analyses, recommendations, and other assistance to help Congress make informed oversight, policy, and funding decisions. GAO’s commitment to good government is reflected in its core values of accountability, integrity, and reliability.

Obtaining Copies of GAO Reports and Testimony

The fastest and easiest way to obtain copies of GAO documents at no cost is through our website. Each weekday afternoon, GAO posts on its website newly released reports, testimony, and correspondence. You can also subscribe to GAO’s email updates to receive notification of newly posted products.

Order by Phone

The price of each GAO publication reflects GAO’s actual cost of production and distribution and depends on the number of pages in the publication and whether the publication is printed in color or black and white. Pricing and ordering information is posted on GAO’s website, https://www.gao.gov/ordering.htm.

Place orders by calling (202) 512-6000, toll free (866) 801-7077,

or

TDD (202) 512-2537.

Orders may be paid for using American Express, Discover Card, MasterCard, Visa, check, or money order. Call for additional information.

Connect with GAO

Connect with GAO on X,

LinkedIn, Instagram, and YouTube.

Subscribe to our Email Updates. Listen to our Podcasts.

Visit GAO on the web at https://www.gao.gov.

To Report Fraud, Waste, and Abuse in Federal Programs

Contact FraudNet:

Website: https://www.gao.gov/about/what-gao-does/fraudnet

Automated answering system: (800) 424-5454

Media Relations

Sarah Kaczmarek, Managing Director, Media@gao.gov

Congressional Relations

A. Nicole Clowers, Managing Director, CongRel@gao.gov

General Inquiries

[1]Office of Management and Budget, Accelerating Federal Use of AI through Innovation, Governance, and Public Trust, M-25-21 (Apr. 3, 2025).

[2]GAO, Artificial Intelligence: Generative AI’s Environmental and Human Effects, GAO‑25‑107172 (Washington, D.C.: Apr. 22, 2025); Artificial Intelligence: Generative AI Training, Development, and Deployment Techniques, GAO‑25‑107651 (Washington, D.C.: Oct. 22, 2024); and Artificial Intelligence: Generative AI Technologies and Their Commercial Applications, GAO‑24‑106946 (Washington, D.C.: June 20, 2024).

[3]According to the Federal Chief Information Officers Council, an AI use case refers to the specific scenario in which AI is designed, developed, procured, or used to advance the execution of agencies' missions and their delivery of programs and services, enhance decision-making, or provide the public with a particular benefit.

[4]Advancing American AI Act, Title 72, Subchapter B of the James M. Inhofe National Defense Authorization Act for Fiscal Year 2023, Pub. Law 117-263, 136 Stat. 2395, 3668-3676 (2022) codified at 40 U.S.C. § 11301 note.

[5]GAO, Science & Tech Spotlight: Generative AI, GAO‑23‑106782 (Washington, D.C.: June 13, 2023).

[6]National Institute of Standards and Technology, Artificial Intelligence Risk Management Framework: Generative Artificial Intelligence Profile, NIST AI 600-1 (July 2024).

[7]For additional information on generative AI, see GAO‑25‑107172, GAO‑25‑107651, GAO‑24‑106946, and GAO‑23‑106782.

[8]Exec. Order 13859, Maintaining American Leadership in Artificial Intelligence (Feb. 11, 2019).

[9]Exec. Order 13960, Promoting the Use of Trustworthy Artificial Intelligence in the Federal Government (Dec. 3, 2020).

[10]Exec. Order 14110, Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence (Oct. 30, 2023).

[11]Office of Management and Budget, Advancing Governance, Innovation, and Risk Management for Agency Use of Artificial Intelligence, M-24-10 (Mar. 28, 2024).

[12]Office of Management and Budget, Advancing the Responsible Acquisition of Artificial Intelligence in Government, M-24-18 (Sept. 24, 2024).

[13]Exec. Order 14148, Initial Rescissions of Harmful Executive Orders and Actions (Jan. 20, 2025).

[14]Exec. Order 14179, Removing Barriers to American Leadership in Artificial Intelligence (Jan. 23, 2025).

[15]Exec. Order 14179; OMB, M-25-21.

[16]Office of Management and Budget, Driving Efficient Acquisition of Artificial Intelligence in Government, M-25-22 (Apr. 3, 2025).

[17]General Services Administration, AI Guide for Government (June 2022).

[18]National Institute of Standards and Technology (NIST), Secure Software Development Practices for Generative AI and Dual-Use Foundation Models, SP 800-218A (July 2024), providing generative AI-specific guidance in tandem with NIST, Secure Software Development Framework, SP 800-218 (February 2022).

[19]Exec. Order 13960.

[20]Advancing American AI Act, Pub. Law 117-263, 136 Stat. 2395, 3668, (2022), codified at 40 U.S.C. § 11301 note.

[21]Federal Chief Information Officers Council, 2021 Guidance for Creating Agency Inventories of Artificial Intelligence Use Cases (Oct. 2021).

[22]Federal Chief Information Officers Council, Guidance for Creating Agency Inventories of AI Use Cases Per EO 13960 (2023).

[23]Federal Chief Information Officers Council, Guidance for 2024 Agency Artificial Intelligence Reporting per EO 14110 (Aug. 2024). This guidance was issued in response to Exec. Order 14110 and that executive order was rescinded on January 20, 2025.

[24]OMB, M-25-21.

[25]This memorandum defines “high-impact” AI as “AI with an output that serves as a principal basis for decisions or actions with legal, material, binding, or significant effect on: 1. an individual or entity's civil rights, civil liberties, or privacy; or 2. an individual or entity's access to education, housing, insurance, credit, employment, and other programs; 3. an individual or entity's access to critical government resources or services; 4. human health and safety; 5. critical infrastructure or public safety; or 6. strategic assets or resources, including high-value property and information marked as sensitive or classified by the Federal Government.” It also provides a non-exhaustive list of categories where the use of AI is expected to be high-impact, which includes potential uses such as aiding the transport, safety, design, development, or use of hazardous chemicals or biological agents.

[26]As previously described, DOD is exempt from certain statutory and public AI use case reporting requirements.

[27]The number of generative AI use cases for the Department of Veterans Affairs (VA) is based on GAO’s analysis. VA did not provide its review within the required time frame. In the absence of VA’s review, we relied on our own analysis of the inventory data to identify generative AI use cases for VA.

[28]GAO, Science & Tech Spotlight: Generative AI in Health Care, GAO‑24‑107634 (Washington, D.C.: Sept. 9, 2024); Artificial Intelligence in Health Care: Benefits and Challenges of Machine Learning Technologies for Medical Diagnostics, GAO‑22‑104629 (Washington, D.C.: Sept. 29, 2022).

[29]Bryan Shaddy et al., “Generative Algorithms for Fusion of Physics-Based Wildfire Spread Models with Satellite Data for Initializing Wildfire Forecasts,” Artificial Intelligence for the Earth Systems, vol. 3, no. 3 (2024): https://doi.org/10.1175/AIES‑D‑23‑0087.1. For more information about AI in natural hazard modeling, including the uses of AI and machine learning for wildfire modeling, see our Technology Assessment, “Artificial Intelligence in Natural Hazard Modeling”, GAO‑24‑106213.

[30]OMB, M-25-21.

[31]According to Department of Energy (DOE) officials, DOE is working with other agencies to conduct generative AI research and other work by providing access to DOE’s supercomputers.

[32]We previously reported in GAO‑24‑106591 that the Office of Management and Budget established the Federal Risk and Authorization Management Program in 2011 to facilitate the adoption and use of cloud services. The program is intended to provide a standardized approach for selecting and authorizing the use of cloud services that meet federal security requirements.

[33]National Institute of Standards and Technology, Artificial Intelligence Risk Management Framework, NIST AI 100-1 (January 2023).

[34]NIST, NIST AI 100-1.

[35]NIST, NIST AI 100-1.

[36]GAO, Artificial Intelligence: An Accountability Framework for Federal Agencies and Other Entities, GAO‑21‑519SP (Washington, D.C.: June 30, 2021).

[37]Congressional Research Service, Generative Artificial Intelligence and Data Privacy: A Primer (May 23, 2023).

[38]Department of Defense Chief Digital and Artificial Intelligence Officer, Guidelines and Guardrails to Inform Governance of Generative Artificial Intelligence, (July 12, 2024).

[39]Department of Homeland Security, Use of Commercial Generative Artificial Intelligence (AI) Tools, Policy Statement 139-07, (October 24, 2023).

[40]A deepfake is a video, photo, or audio recording that seems real but has been manipulated with AI. The underlying technology can replace faces, manipulate facial expressions, synthesize faces, and synthesize speech. Deepfakes can depict someone appearing to say or do something that they in fact never said or did. GAO, Science & Tech Spotlight: Deepfakes, GAO‑20‑379SP (Washington, D.C.: Feb. 20, 2020).

[41]Advancing American AI Act, Title 72, Subchapter B of the James M. Inhofe National Defense Authorization Act for Fiscal Year 2023, Pub. Law 117-263, 136 Stat. 2395, 3668-3676 (2022) codified at 40 U.S.C. § 11301 note.

[42]In August 2024, the Federal Chief Information Officers (CIO) Council issued updated AI use case inventory guidance that further clarified agencies’ reporting requirements for use case topic areas and stages of development. Federal CIO Council, Guidance for 2024 Agency Artificial Intelligence Reporting per EO 14110 (Aug. 2024).

[43]Nine of the 15 agencies are in this work’s scope: the Departments of Commerce, Energy, Health and Human Services, Homeland Security, the Interior, State, and Veterans Affairs; the General Services Administration; and the National Aeronautics and Space Administration. As of July 2025, all nine recommendations to these agencies remain open; GAO, Artificial Intelligence: Agencies Have Begun Implementation but Need to Complete Key Requirements, GAO‑24‑105980 (Washington, D.C.: Dec. 12, 2023).

[44]Federal Chief Information Officers (CIO) Council, Guidance for 2024 Agency Artificial Intelligence Reporting per EO 14110 (Aug. 2024); Federal CIO Council, Guidance for Creating Agency Inventories of AI Use Cases Per EO 13960 (2023).