ARTIFICIAL INTELLIGENCE

Federal Efforts Guided by Requirements and Advisory Groups

Report to Congressional Addressees

United States Government Accountability Office

A report to congressional addressees.

For more information, contact: Kevin Walsh at walshk@gao.gov.

What GAO Found

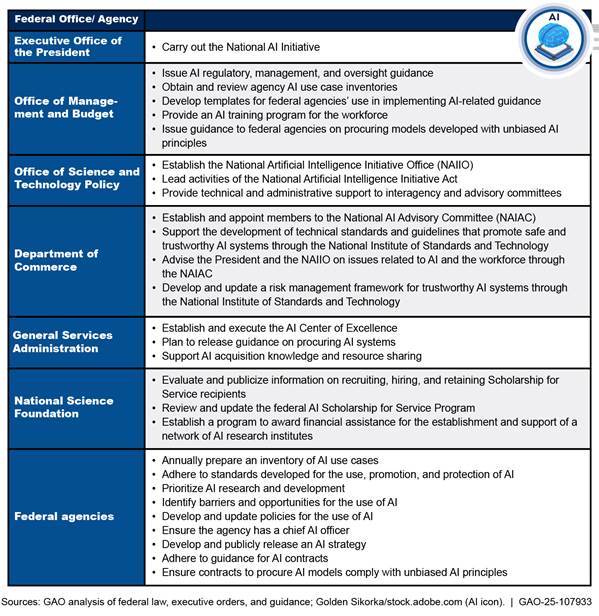

Federal laws, executive orders (EO), and related guidance include various artificial intelligence (AI)-related requirements for agencies. Federal offices and agencies are charged with adhering to AI-related requirements. GAO identified 94 AI-related requirements that were government-wide or had government-wide implications (e.g., the General Services Administration is required to create an AI Center of Excellence which affects the entire federal government). See figure for a summary of the requirements.

Ten executive branch oversight and advisory groups have a role in the implementation and oversight of AI in the federal government, including the National AI Advisory Committee and National AI Initiative Office.

Why GAO Did This Study

AI holds substantial promise for improving the operations of government agencies. This report describes (1) federal agencies’ current AI-related requirements in law, executive orders, and guidance; and (2) the roles and responsibilities of AI oversight and advisory groups.

To address the first objective, GAO identified agency requirements regarding the implementation of AI in federal laws, EOs, and guidance. GAO selected requirements that (1) were current (i.e., those with ongoing or forthcoming deliverables) and (2) were government-wide in scope or had government-wide implications.

To address the second objective, GAO reviewed laws and EOs to identify federal AI oversight and advisory groups. GAO also reviewed laws, EOs, agency documentation, and organization charters to identify and describe the roles and responsibilities of these AI oversight and advisory groups.

|

Abbreviations |

|

|

|

|

|

AI |

artificial intelligence |

|

EOP |

Executive Office of the President |

|

EO |

executive order |

|

GSA |

General Services Administration |

|

NAIAC |

National Artificial Intelligence Advisory Committee |

|

NSTC |

National Science and Technology Council |

|

OMB |

Office of Management and Budget |

|

OSTP |

Office of Science and Technology Policy |

|

R&D |

research and development |

This is a work of the U.S. government and is not subject to copyright protection in the United States. The published product may be reproduced and distributed in its entirety without further permission from GAO. However, because this work may contain copyrighted images or other material, permission from the copyright holder may be necessary if you wish to reproduce this material separately.

September 9, 2025

Congressional Addressees

Artificial intelligence (AI) is rapidly changing our world and has significant potential to transform society and people’s lives.[1] According to the National Institute of Standards and Technology, AI technologies can drive economic growth and support scientific advancements that improve the conditions of our world.[2] It also holds substantial promise for improving the operations of government agencies. However, AI technologies also pose risks that can negatively impact individuals, groups, organizations, communities, society, and the environment.

Given the rapid growth in capabilities and widespread adoption of AI, the federal government has taken action. For example, in October 2023, President Biden issued Executive Order (EO) 14110.[3] The EO included over 100 requirements for federal agencies to address to guide the safe and responsible use of AI.

In January 2025, President Trump issued EO 14148 that rescinded EO 14110.[4] The Office of Management and Budget (OMB) subsequently released two new memoranda to guide agencies in accelerating the use and acquisition of AI.[5]

Given the importance of AI, we performed this work under the statutory authority of the Comptroller General.[6] Our objectives were to (1) describe federal agencies’ current AI-related requirements in laws, executive orders, and guidance; and (2) describe the roles and responsibilities of AI oversight and advisory groups.

To address the first objective, we reviewed recent federal laws, EOs, and guidance to identify agency requirements regarding the implementation of AI. Specifically, we reviewed five AI-related laws, six EOs, and three guidance documents that included requirements for federal agencies:

· AI in Government Act of 2020;[7]

· National Artificial Intelligence Initiative Act of 2020;[8]

· Advancing American AI Act;[9]

· AI Training Act;[10]

· Creating Helpful Incentives to Produce Semiconductors (CHIPS) Act of 2022;[11]

· Executive Order 13859, Maintaining American Leadership in Artificial Intelligence;[12]

· Executive Order 13960, Promoting the Use of Trustworthy Artificial Intelligence in the Federal Government;[13]

· Executive Order 14179, Removing Barriers to American Leadership in Artificial Intelligence;[14]

· Executive Order 14318, Accelerating Federal Permitting of Data Center Infrastructure;[15]

· Executive Order 14319, Preventing Woke AI in the Federal Government;[16]

· Executive Order 14320, Promoting the Export of the American AI Technology Stack;[17]

· OMB’s Guidance for Regulation of Artificial Intelligence Applications, Memorandum M-21-06;[18]

· OMB’s Accelerating Federal Use of AI through Innovation, Governance, and Public Trust, Memorandum M-25-21;[19] and

· OMB’s Driving Efficient Acquisition of Artificial Intelligence in Government, Memorandum M-25-22.[20]

From these laws, EOs, and guidance, we selected requirements that were (1) current at the time of this review (i.e., those that had ongoing or forthcoming deliverables) and (2) government-wide in scope or have government-wide implications (e.g., the General Services Administration [GSA] is required to create an AI Center of Excellence which affects the entire federal government).[21] We summarized the requirements for inclusion in the body of this report. See appendix I for a full list of the requirements identified.

To address the second objective, we reviewed laws and EOs to identify federal AI oversight and advisory groups. In addition, we reviewed laws, EOs, agency documentation, charters, and websites to identify and describe the roles and responsibilities of these AI oversight and advisory groups.

For both objectives, we interviewed relevant officials, such as the Department of Commerce’s Information Technology Laboratory Deputy Director and GSA’s Technology Transformation Services Acting Director, to obtain additional information on the current AI-related requirements and advisory and oversight groups. While officials at OMB and the Office of Science and Technology Policy (OSTP) declined to meet with us regarding this review, officials from OSTP provided written feedback on our findings for each objective.

We conducted this performance audit from November 2024 to September 2025 in accordance with generally accepted government auditing standards. Those standards require that we plan and perform the audit to obtain sufficient, appropriate evidence to provide a reasonable basis for our findings and conclusions based on our audit objectives. We believe that the evidence obtained provides a reasonable basis for our findings and conclusions based on our audit objectives.

Background

AI generally involves computing systems that “learn” how to improve their performance; it is a rapidly growing transformative technology with applications found in every aspect of modern life. AI is used in day-to-day technologies such as video games, web searching, facial recognition technology, spam filtering, and voice recognition. It has applications in business and commerce, agriculture, transportation, and medicine, among other areas.

Legislative and Executive Actions Guide AI Implementation and Responsibilities

Federal agencies’ efforts to implement AI have been guided by a variety of legislative and executive actions, as well as federal guidance. Congress has enacted legislation, and the President has issued EOs, to assist agencies in implementing AI in the federal government. For example,

· In February 2019, President Trump issued EO 13859, establishing the American AI Initiative, which promoted AI research and development (R&D) investment and coordination, among other things.[22]

· In December 2020, President Trump issued EO 13960, Promoting the Use of Trustworthy AI, which focused on operational AI and established a common set of principles for the design, development, acquisition, and use of AI in the federal government.[23]

· In December 2020, the AI in Government Act of 2020 was enacted as part of the Consolidated Appropriations Act, 2021 to ensure that the use of AI across the federal government is effective, ethical, and accountable by providing resources and guidance to federal agencies.[24]

· In August 2022, the CHIPS Act of 2022 was enacted to direct the establishment of a federal assistance program for “the fabrication, assembly, testing, advanced packaging, production, or R&D of semiconductors” and other purposes to be administered by the Department of Commerce, known as the CHIPS Incentives Program.[25]

· In October 2022, the AI Training for the Acquisition Workforce Act was enacted to ensure that the federal workforce has knowledge of the capabilities and risks associated with AI.[26]

· In December 2022, the Advancing American AI Act was enacted as part of the James M. Inhofe National Defense Authorization Act for Fiscal Year 2023 to encourage agency AI-related programs and initiatives; promote adoption of modernized business practices and advanced technologies across the federal government; and test and harness applied AI to enhance mission effectiveness, among other things.[27]

· In October 2023, President Biden issued EO 14110 which aimed to advance a coordinated, federal government-wide approach to the development and safe and responsible use of AI.[28] In January 2025, this EO was rescinded by EO 14148 (discussed below).

· In March 2024, OMB issued a memorandum that directed federal agencies to advance AI governance and innovation while managing risks from the use of AI in the federal government.[29]

· In September 2024, OMB issued a memorandum that directed federal agencies to improve their capacity for the responsible acquisition of AI.[30]

· In January 2025, President Trump issued EO 14148 which rescinded certain existing AI policies and directives, among other things.[31]

· In January 2025, President Trump issued EO 14179 which called for the development of an AI action plan and the revision of OMB memoranda M-24-10 and M-24-18.[32]

· In April 2025, as directed by EO 14179, OMB issued M-25-21 which replaced M-24-10.[33] This memorandum requires agencies to establish generative AI policies, among other AI requirements.

· In April 2025, OMB issued M-25-22 which replaced M-24-18.[34] This memorandum provides guidance to agencies to improve their ability to acquire AI responsibly.

· In July 2025, as directed by EO 14179, the White House issued America’s AI Action plan which includes recommended policy actions for federal agencies.[35]

· In July 2025, President Trump issued EO 14319 which ensures AI models procured by the federal government prioritize truthfulness and ideological neutrality.[36]

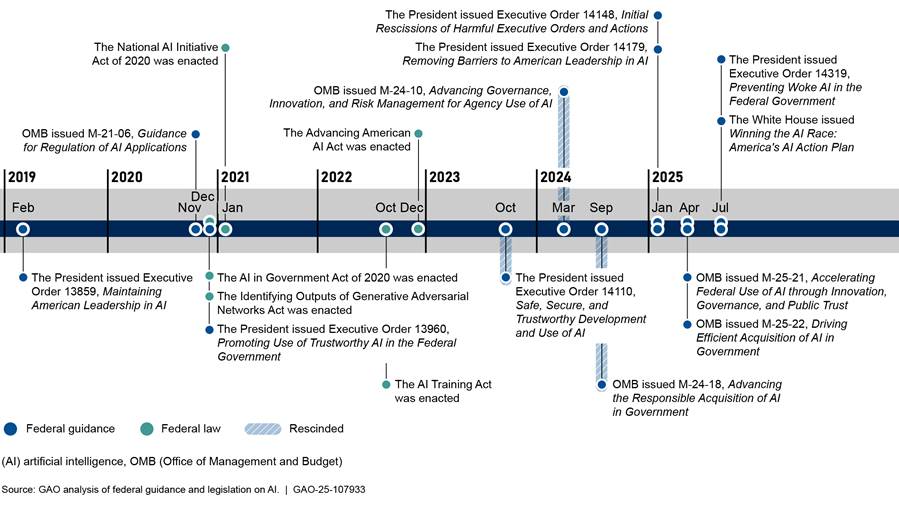

Figure 1 provides a timeline of the issuance and enactment of federal laws, EOs, and federal guidance released over the past six years that included requirements for federal agencies to advance and manage AI.

Figure 1: Timeline of Key Federal Efforts to Advance Artificial Intelligence (AI) with Agency Requirements

GAO Issued an Accountability Framework and Reported on Governance Opportunities

Regarding AI, we have issued an accountability framework, reported on agencies’ use, and made recommendations to improve oversight. For example,

· In June 2021, we published a framework to help managers ensure accountability and the responsible use of AI in government programs and processes.[37] The framework describes four principles—governance, data, performance, and monitoring—and associated key practices to consider when implementing AI systems. Each of the practices contains a set of questions and procedures for auditors and third-party assessors to consider when reviewing efforts.

· In December 2023, we reported that agency AI inventories included about 1,200 current and planned use cases.[38] In addition, we reported that although most agencies developed an inventory, there were instances of incomplete and inaccurate data. We also noted that federal agencies had taken initial steps to comply with AI requirements in executive orders and federal law; however, more work remained to fully implement those requirements. For example, OMB had not developed the required guidance for the agencies’ acquisition and use of AI technologies. In addition, we found that although many agencies utilized AI, several had not developed a plan for inventory updates and compliance with EO 13960.[39] Further, since OMB had not yet issued guidance, agencies were unable to implement a requirement to develop plans to achieve consistency with OMB’s guidance on the acquisition and use of AI.

We made 35 recommendations to 19 agencies, including OMB, to fully implement federal AI requirements. Ten agencies agreed with the recommendations, three agencies partially agreed with one or more recommendations, four agencies neither agreed nor disagreed, and two agencies did not agree with one of the recommendations. As of July 2025, three agencies (OMB, the Office of Personnel Management, and the Department of Transportation) had implemented four recommendations. For example, the Office of Personnel Management created an inventory of federal rotational programs and determined how the programs could help expand the number of employees with AI expertise. In addition, Transportation developed and submitted to OMB its plans to achieve consistency with OMB guidance. The remaining 16 agencies have not yet implemented their recommendations.

Federal Laws, EOs, and Guidance Include AI-related Requirements for Agencies

Within federal laws, EOs, and related guidance, we

identified 94 AI-related government-wide requirements (or requirements with

government-wide implications) for agencies, as of July 2025. Table 1 below

provides a summary of the requirements. Appendix I provides additional details

regarding the 94 requirements, responsible parties, and frequency of the

requirements.

Table 1: Summary of Government-Wide Artificial Intelligence (AI) Requirements for Federal Agencies Identified in Federal Laws, Executive Orders, and Guidance, as of July 2025

|

Federal agency |

Requirement |

|

Executive Office of the President |

· Carry out the National AI Initiative to guide federal agencies’ use of AI |

|

Office of Management and Budget |

· Provide guidance to federal agencies on AI use case inventories, management, oversight, regulation, and related best practices · Obtain and review agency-provided AI use case inventories · Develop templates for federal agencies’ use in implementing AI-related guidance · Provide an AI training program for the workforce · Issue guidance to federal agencies on procuring models developed with unbiased AI principles by November 20, 2025 |

|

Office of Science and Technology Policy |

· Establish the National Artificial Intelligence Initiative Office · Lead activities of the National Artificial Intelligence Initiative Act · Provide technical and administrative support to interagency and advisory committees |

|

Department of Commercea |

· Establish and appoint members to the National AI Advisory Committee · Support the development of technical standards and guidelines that promote safe and trustworthy AI through the National Institute of Standards and Technology · Advise the President and the National AI Initiative Office on issues related to AI and the workforce through the National AI Advisory Committee · Develop and update a risk management framework for trustworthy AI systems through the National Institute of Standards and Technology |

|

General Services Administration |

· Establish and carry out activities of the AI Center of Excellence · Develop a plan to release guidance to the acquisition workforce on procuring AI systems · Support agencies in AI acquisition knowledge and resource sharing |

|

National Science Foundation |

· Evaluate and publicize information on recruiting, hiring, and retaining Scholarship for Service recipients in the public sector for AI · Review and update the federal AI Scholarship for Service Program · Establish a program to award financial assistance for the establishment and support of a network of AI research institutes |

|

Federal agenciesb |

· Annually prepare an inventory of AI use cases · Adhere to standards developed for the use, promotion, and protection of AI · Prioritize AI research and development · Identify barriers and opportunities for the use of AI · Develop and update policies for the use of AI · Ensure the agency has a chief AI officer · Develop and publicly release an AI strategy by September 30, 2025 · Adhere to guidance for AI contracts by September 30, 2025, and thereafter · Ensure contracts to procure AI models (i.e., large language models) comply with unbiased AI principles |

Source: GAO analysis of federal laws, executive orders, and guidance. | GAO‑25‑107933

aThese actions are to be completed through the National Institute of Standards and Technology and the National AI Advisory Committee.

bThe requirements detailed in laws, EOs, and guidance have differing scopes of applicable agencies. Please see Appendix I for additional details of applicability.

Executive Branch AI Oversight and Advisory Groups Have Various Roles and Responsibilities

Ten executive branch oversight and advisory groups are to have a role in the implementation and oversight of AI in the federal government. These groups were established through or pursuant to federal law, EOs, and White House and committee action. Table 2 describes how each group was established, its position within the federal government, membership, and the responsibilities it has in the implementation of AI in the federal government.

|

Oversight or advisory group |

Description |

|

Office of Science and Technology Policy (OSTP) |

· Established by Congress in 1976 through the National Science and Technology Policy, Organization, and Priorities Act. · Located within the Executive Office of the President (EOP). · Responsibilities include: · advising the President on the effects of science and technology on domestic and international affairs. · consulting on science and technology matters with non-federal sectors and communities, including state and local officials, foreign and international entities and organizations, professional groups, universities, and industry. · coordinating federal research and development (R&D) programs and policies to ensure that R&D efforts are properly leveraged and focused on research in areas that will advance national priorities. |

|

National Science and Technology Council |

· Established in November 1993 by Executive Order 12881, Establishment of the National Science and Technology Council (NSTC). · Members include the President, the director of OSTP, cabinet secretaries, and agency heads with significant science and technology responsibilities, and heads of other White House offices. · Responsibilities include: · advising the President on science and technology and has multiple functions under a number of chartered committees and subcommittees. · coordinating the science and technology policymaking process, including the development and implementation of federal policies and programs. |

|

Subcommittee on Machine Learning and Artificial Intelligence |

· Established in May 2016 by the NSTC Committee on Technology. · Reports to the NSTC Committee on Technology. · Consists of officials from components of the EOP and NSTC. · Serves as the operations and implementation arm of the Select Committee on AI. · Responsibilities include: · coordinating the use of, and fostering the sharing of, knowledge and best practices about AI by the federal government. · advising the Committee on Technology, the NSTC, and other coordination bodies of the EOP on policies, procedures, and plans for federal efforts on AI and machine learning. · coordinating the application of AI technologies in the operation of the federal government. · ensuring that AI is incorporated into federal efforts to increase the diversity of the workforce. · annually reporting to the Assistant to the President for Science and Technology, and other responsible officials on the status of unclassified AI applications across the federal government. |

|

Select Committee on Artificial Intelligence |

· Established in May 2018 by the White House. · Co-chaired by the Director of OSTP, and on an annually rotating basis, a representative from the Department of Commerce, the National Science Foundation, or the Department of Energy. · Responsibilities include: · advising the White House on interagency AI R&D priorities and improving the coordination of federal AI efforts. · facilitating the coordination and communication of AI R&D among federal departments and agencies. · identifying, defining, and advising the NSTC on priority areas of AI research, and recommending options for administration R&D priorities. · encouraging agency AI-related programs and initiatives for R&D, demonstration, and education and workforce training. · identifying opportunities to collaborate with academia, industry, civil society, and international allies to advance AI activities. |

|

Committee on Science and Technology Enterprise |

· Established in July 2018 by the NSTC. · Co-chaired by OSTP, Department of Energy, National Institute of Standards and Technology, and National Science Foundation and includes members from the Office of Management and Budget (OMB), and the Departments of Agriculture, Commerce, Defense, Health and Human Services, and Homeland Security. · Responsibilities include: · advising and assisting the NSTC to increase the overall effectiveness and productivity of federal R&D efforts in the areas of science and technology aimed at accomplishing multiple national goals. · facilitating planning, coordination, and communication among federal departments and agencies involved in supporting the science and technology enterprise and related efforts. · helping to identify, define, and advise the NSTC on federal priorities and plans for crosscutting science and technology issues. |

|

Networking and Information Technology Research and Development AI R&D Interagency Working Group |

· Established in July 2018 to coordinate federal AI R&D investment in AI. · Located within the Networking and Information Technology Research and Development Artificial Intelligence Research and Development program under OSTP. · Members include senior representatives of the 23 federal member agencies and departments that invest in IT-related R&D programs, as well as representatives of OSTP and OMB. · Responsibilities include: · coordinating federal AI R&D across 32 participating agencies. · coordinating AI activities to advance the mission of the National Artificial Intelligence Research and Development Strategic Plan. · understanding and addressing the ethical, legal, and societal implications of AI. · ensuring the safety and security of AI systems. · better understanding and growing the national AI R&D workforce. · establishing a principled and coordinated approach to international collaboration in AI research. |

|

General Services Administration (GSA) AI Center of Excellence |

· Established in the fall of 2019 by the AI in Government Act of 2020. · Located within the GSA Technology Transformation Services. · Staffed by the GSA Administrator and may share other staff of the GSA, including those from other agency centers of excellence, and detailees from other agencies; fellows from nonprofit organizations, think tanks, institutions of higher education, and industry. · Responsibilities include: · regularly convening individuals from agencies, industry, federal laboratories and others to discuss recent developments in AI. · advising the GSA Administrator, the Director of OMB, and agencies on the acquisition and use of AI. · consulting with agencies that operate programs, create standards and guidelines, fund internal projects, or coordinate between public and private sector relating to AI. · advising the Directors of OMB and OSTP on developing policy related to AI. |

|

National Artificial Intelligence Initiative Office |

· Established in January 2021, in accordance with the National Artificial Intelligence Initiative Act of 2020. · Located within OSTP. · Staffed by a director and staff detailed from the federal departments and agencies. · Responsibilities include: · serving as the point of contact on federal AI activities for federal departments and agencies, and such other persons as the Initiative Office considers appropriate to exchange technical and programmatic information. · promoting access to the technologies, innovations, best practices, and expertise derived from Initiative activities to agency missions and systems across the federal government. |

|

National Artificial Intelligence Advisory Committee |

· Established in April 2022 by the National Artificial Intelligence Initiative Act of 2020. · Consists of leaders with a broad and interdisciplinary range of AI-relevant expertise from across academia, non-profits, civil society, and the private sector. · Responsibilities include: · advising the President and the National Artificial Intelligence Initiative Office on matters related to the Initiative, to include recommendations related to: · the current state of the United States competitiveness and leadership in AI. · the management, coordination, and activities of the National Artificial Intelligence Initiative. · issues related to AI workforce. · opportunities for international cooperation with strategic allies on AI research. · accountability and legal rights, including matters relating to oversight of AI systems. |

|

President’s Council of Advisors on Science and Technology |

· Re-established in January 2025. · Co-chaired by the Assistant to the President for Science and Technology and the Special Advisor for AI & Crypto. · Members include individuals and representatives from sectors outside the federal government with diverse perspectives and expertise in science, technology, education, and innovation. · Responsibilities include advising the President on matters involving science, technology, education, and innovation policy. |

Source: GAO analysis of federal AI oversight and advisory groups. | GAO‑25‑107933

As described in Table 2 above, the executive branch oversight and advisory groups are to establish guidance, oversight, and support for the implementation of AI in the federal government. Specifically,

· The EOP oversees multiple AI oversight groups. For example, the National AI Advisory Committee, National Science and Technology Council (NSTC) Select Committee on AI, and the NSTC Subcommittee on Machine Learning and AI report to the EOP.[40]

· Located within the EOP, OSTP and OMB also have roles and responsibilities regarding AI. For example, OSTP oversees additional oversight and advisory groups such as the National AI Initiative Office and NSTC. In addition, NSTC oversees the Select Committee on AI and the Committee on Science and Technology Enterprise.

· Several groups provide support and assistance to other groups. For example, the National AI Initiative Office provides technical and administrative support to the NSTC’s Select Committee on AI.

Agency Comments

We provided a draft of this report to OMB, OSTP, the Department of Commerce, GSA, and National Science Foundation for their review and comment. Three agencies (OSTP, Commerce, and National Science Foundation) provided technical comments, which we incorporated as appropriate. One agency (GSA) did not have comments on the report. OMB did not respond to our request for comments.

We are sending copies of this report to the appropriate congressional committees; the Directors of the Office of Management and Budget, Office of Science and Technology Policy, and National Science Foundation; the Administrator of the General Services Administration; the Secretary of Commerce; and other interested parties. In addition, the report is available at no charge on the GAO website at https://www.gao.gov.

If you or your staff have any questions about this report, please contact Kevin Walsh at WalshK@gao.gov. Contact points for our Offices of Congressional Relations and Public Affairs may be found on the last page

of this report. GAO staff who made key contributions to this report are listed in appendix II.

Kevin Walsh

Director, Information Technology and Cybersecurity

The Honorable James Comer

Chairman

The Honorable Robert Garcia

Ranking Member

Committee on Oversight and Government Reform

House of Representatives

The Honorable Brian Babin

Chairman

The Honorable Zoe Lofgren

Ranking Member

Committee on Science, Space, and Technology

House of Representatives

The Honorable Jay Obernolte

Chairman

Subcommittee on Research and Technology

Committee on Science, Space, and Technology

House of Representatives

We identified 94 AI-related government-wide requirements (or requirements with government wide implications) for federal agencies identified in federal laws, executive orders (EO), and guidance, as of July 2025. This appendix provides those requirements by responsible party. Each table lists a category that describes the requirement at a high level, the requirement and source, the responsible party(ies), and due date, as applicable.

Executive Office of the President

The Executive Office of the President (EOP) is comprised of offices and councils that support and advise the President through coordinating key policies and implementing oversight for federal AI requirements. We identified one current government-wide AI-related requirement for EOP in federal laws, EOs, and guidance.

Table 3 provides EOP’s AI-related requirements identified in federal laws, EOs, and guidance, as of July 2025.

Table 3: Executive Office of the President’s Artificial Intelligence (AI)-related Requirements from Federal Laws, Executive Orders (EO), and Guidance, as of July 2025

|

Requirement category |

Requirement and source |

Responsible |

Due date |

|

Leadership duties |

Carry out activities that include the following: (1) Sustained and consistent support for AI research and development (R&D) through grants, cooperative agreements, testbeds, and access to data and computing resources. (2) Support for K-12 education and postsecondary educational programs, including workforce training and career and technical education programs, and informal education programs to prepare the American workforce and the general public to be able to create, use, and interact with AI systems. (3) Support for interdisciplinary research, education, and workforce training programs for students and researchers that promote learning in the methods and systems used in AI; and to foster interdisciplinary perspectives and collaborations among subject matter experts in relevant fields, including computer science, mathematics, statistics, engineering, social sciences, health, psychology, behavioral science, ethics, security, legal scholarship, and other disciplines that will be necessary to advance AI R&D responsibly. (4) Interagency planning and coordination of federal AI research, development, demonstration, standards engagement, and other activities under the Initiative, as appropriate. (5) Outreach to diverse stakeholders, including citizen groups, industry, and civil rights and disability rights organizations, to ensure public input is taken into account in the activities of the Initiative. (6) Leveraging existing federal investments to advance objectives of the Initiative. (7) Support for a network of interdisciplinary AI research institutes. (8) Support opportunities for international cooperation with strategic allies, as appropriate, on the R&D, assessment, and resources for trustworthy AI systems. (National Artificial Intelligence Initiative Act of 2020 § 5101: National Artificial Intelligence Initiative Office) |

The President, through the Initiative Office, Interagency Committee, and agency heads as the President considers appropriate |

No due date. This requirement sunsets in January 2031. |

Source: GAO analysis of federal AI laws, executive orders, and guidance. | GAO‑25‑107933

Note: In some cases, these entries have been condensed or

summarized for space or clarity. For the actual language of the requirement,

see the source listed in each row.

Office of Management and Budget

The Office of Management and Budget (OMB) is responsible for issuing guidance that informs federal agencies’ policies and plans for the development, acquisition, management, and use of AI. We identified 15 current government-wide AI-related requirements for OMB in federal laws, EOs, and guidance.

Table 4 shows OMB’s AI-related requirements identified in federal laws, EOs, and guidance, as of July 2025.

Table 4: Office of Management and Budget’s (OMB) Artificial Intelligence (AI)-related Requirements from Federal Laws, Executive Orders (EO), and Guidance, as of July 2025

|

Requirement category |

Requirement and source |

Responsible |

Due date |

|

Guidance |

Issue updates to the memorandum that (a) informs agencies’ policy development related to the acquisition and use of technologies enabled by AI, (b) recommends approaches to remove barriers for AI use and (c) identifies best practices for addressing discriminatory impact on the basis of any classification protected under federal nondiscrimination laws (AI in Government Act of 2020 § 4: Guidance for Agency Use of Artificial Intelligence) |

Director of OMB |

Not later than April 3, 2027, and every two years until April 3, 2037 |

|

AI training program |

Develop and implement or otherwise provide an AI training program for the covered workforce that includes information relating to the science underlying AI, including how AI works; introductory concepts relating to the technological features of AI systems; the ways in which AI can benefit the federal government; the risks posed by AI, including discrimination and risks to privacy; the ways to mitigate risks, including efforts to create and identify AI that is reliable, safe, and trustworthy; and future trends in AI, including trends for homeland and national security and innovation. (Artificial Intelligence Training Act § 2: Artificial Intelligence Training Programs) |

Director of OMB, in coordination with the General Services Administration (GSA) Administrator and any other person deemed relevant by the OMB Director |

Not later than October 17, 2023, and at least annually thereafter |

|

AI training program |

Update the AI training program to (a) incorporate new information relating to AI and (b) ensure that the AI training program continues to satisfy the requirements under the paragraph that describes the covered workforce including executive agency employees that are responsible for (i) program management, (ii) the planning, research, development, engineering, testing, and evaluation of systems, including quality control and assurance (iii) procurement and contracting, (iv) logistics, (v) cost estimating, and other personnel designated by the head of the executive agency to participate in the AI training program. (Artificial Intelligence Training Act § 2: Artificial Intelligence Training Programs) |

Director of OMB, in coordination with the GSA Administrator and any other person deemed relevant by the OMB Director |

At least once every 2 years |

|

AI training program |

Ensure the existence of a means by which to (a) understand and measure the participation of the covered workforce and (b) receive and consider feedback from participants in the AI training program to improve the AI training program. (Artificial Intelligence Training Act § 2: Artificial Intelligence Training Programs) |

Director of OMB, in coordination with the GSA Administrator and any other person deemed relevant by the OMB Director |

Not later than October 17, 2023, and at least annually thereafter |

|

Guidance |

Develop and provide an AI strategy template for use by agencies to identify and remove barriers to their responsible use of AI and to achieve enterprise-wide improvements in the maturity of their applications. (OMB M-25-21 § 2: Driving AI Innovation) |

OMB |

No due date |

|

Guidance |

Develop and provide a compliance plan template for agencies to achieve consistency with the memorandum on accelerating federal use of AI through innovation, governance, and public trust. (OMB M-25-21 § 3: Improving AI Governance) |

OMB |

No due date |

|

Interagency coordination |

Other than the use of AI in national security systems, convene and chair an interagency council (i.e., Chief AI Officer Council) to coordinate the development and use of AI in agencies’ programs and operations and to advance the implementation of the AI principles established by EO 13960. (OMB M-25-21 § 3: Improving AI Governance) |

OMB |

Before July 2, 2025. This requirement sunsets after 5 years. |

|

Guidance |

Issue detailed instructions for agencies to develop summaries describing each individual determination and waiver, as well as its justification. (OMB M-25-21 § 4: Fostering Public Trust in Federal Use of AI) |

OMB |

No due date |

|

Guidance/AI management and use |

Establish and continuously update a means to ensure that contracts for the acquisition of an AI system or service (i) align with the guidance issued under the AI in Government Act of 2020; (ii) address the protection of privacy and government data and other information; (iii) address the ownership and security of data and other information created, used, processed, stored, maintained, disseminated, disclosed, or disposed of by a contract or subcontractor on behalf of the federal government; and (iv) include considerations for securing the training data, algorithms, and other components of any AI system against misuse, unauthorized alteration, degradation, or rending inoperable. (Advancing American Artificial Intelligence Act (2022) § 7224: Principles and Policies for Use of Artificial Intelligence in Government) |

Director of OMB |

Not later than December 23, 2024, and at least every 2 years thereafter |

|

Congressional update |

Brief the appropriate congressional committees on a means to ensure that contracts for the acquisition of an AI system or service align with the guidance issued under the AI in Government Act of 2020 and address the protection of privacy and government data and other information. (Advancing American Artificial Intelligence Act (2022) § 7224: Principles and Policies for Use of Artificial Intelligence in Government) |

Director of OMB |

Not later than March 23, 2023; and quarterly thereafter until the Director first implements the means to ensure that contracts for the acquisition of an AI system or service (1) align with the guidance issued under the AI in Government Act of 2020, and (2) address the protection of privacy and government data and other information protection. Annually thereafter until December 23, 2027. |

|

AI management and use |

Require the head of each agency to: (1) prepare and maintain an inventory of the AI use cases of the agency, including current and planned uses; (2) share agency inventories with other agencies, to the extent practicable and consistent with applicable law and policy, including those concerning protection of privacy and of sensitive law enforcement, national security, and other protected information; and (3) make agency inventories available to the public, in a manner determined by the Director, and to the extent practicable and in accordance with applicable law and policy, including those concerning the protection of privacy and of sensitive law enforcement, national security, and other protected information. (Advancing American Artificial Intelligence Act (2022) § 7225: Agency Inventories and Artificial Intelligence Use Cases) |

Director of OMB, in consultation with the Chief Information Officers Council, the Chief Data Officers Council, and other interagency bodies as determined to be appropriate by the Director |

Not later than February 23, 2023, and continuously thereafter for 5 years |

|

AI management and use |

Establish an AI capability within each of the four use case pilots under this subsection that (A) solves data access and usability issues with automated technology and eliminates or minimizes the need for manual data cleansing and harmonization efforts; (B) continuously and automatically ingests data and updates domain models in near real-time to help identify new patterns and predict trends, to the extent possible, to help agency personnel to make better decisions and take faster actions; (C) organizes data for meaningful data visualization and analysis so the government has predictive transparency for situational awareness to improve use case outcomes; (D) is rapidly configurable to support multiple applications and automatically adapts to dynamic conditions and evolving use case requirements, to the extent possible; (E) enables knowledge transfer and collaboration across agencies; and (F) preserves intellectual property rights to the data and output for benefit of the federal government and agencies and protects sensitive personally identifiable information. (Advancing American Artificial Intelligence Act (2022) § 7226: Rapid Pilot, Deployment and Scale of Applied Artificial Intelligence Capabilities to Demonstrate Modernization Activities Related to Use Cases) |

Director of OMB, in coordination with the heads of relevant agencies and other officials as the Director determines to be appropriate |

Not later than December 23, 2025 |

|

Congressional update |

Brief the appropriate congressional committees on the activities carried out under this section (discussed above, regarding the four use case pilots) and results of those activities. (Advancing American Artificial Intelligence Act (2022) § 7226: Rapid Pilot, Deployment and Scale of Applied Artificial Intelligence Capabilities to Demonstrate Modernization Activities Related to Use Cases) |

Director of OMB |

Not earlier than September 19, 2023, but not later than December 23, 2023, and annually thereafter until December 23, 2027 |

|

Guidance |

Develop additional “playbooks” specific to various types of AI (e.g., AI-based biometrics, specialized computing infrastructure, and generative AI) designed to highlight the considerations and nuances inherent in these specialized areas. (M-25-22 § 4: AI Acquisition Lifecycle Guidance) |

OMB |

No due date |

|

Guidance |

Issue guidance to agencies that: (i) accounts for technical limitations in complying with this order;(ii) permits vendors to comply with the requirement in the second unbiased AI principle to be transparent about ideological judgments through disclosure of the large language model’s system prompt, specifications, evaluations, or other relevant documentation, and avoid requiring disclosure of specific model weights or other sensitive technical data where practicable; (iii) avoids over-prescription and affords latitude for vendors to comply with the unbiased AI principles and take different approaches to innovation; (iv) specifies factors for agency heads to consider in determining whether to apply the unbiased AI principles to large language models developed by the agencies and to AI models other than large language models; and (v) makes exceptions as appropriate for the use of large language models in national security systems. (EO 14319 § 4: Preventing Woke AI in the Federal Government) |

Director of OMB, in consultation with the Administrator for Federal Procurement Policy, the Administrator of General Services, and the Director of the Office of Science and Technology Policy |

No later than November 20, 2025 |

Source: GAO analysis of federal AI laws, executive orders, and guidance. | GAO‑25‑107933

Note: In some cases, these entries have been condensed or

summarized for space or clarity. For the actual language of the requirement,

see the source listed in each row.

Office of Science and Technology Policy

The Office of Science and Technology Policy (OSTP) leads interagency science and technology policy coordination efforts and assists OMB with an annual review and analysis of federal research and development, including AI, among other things. We identified nine current government-wide AI-related requirements for OSTP in federal laws, EOs, and guidance.

Table 5 shows OSTP’s AI-related requirements identified in federal laws, EOs, and guidance, as of July 2025.

Table 5: Office of Science and Technology Policy’s (OSTP) Artificial Intelligence (AI)-related Requirements from Federal Laws, Executive Orders (EO), and Guidance, as of July 2025

|

Requirement category |

Requirement and source |

Responsible party(ies) |

Due date |

|

Establish leadership |

Establish or designate, and appoint a director of, an office to be known as the ‘‘National Artificial Intelligence Initiative Office’’ to carry out the responsibilities described in subsection (b) including (1) providing technical and administrative support; (2) serving as the point of contact on federal AI activities; (3) conducting public outreach; and promoting access technologies across the federal government (discussed below) with respect to the Initiative. The Initiative Office shall have sufficient staff to carry out such responsibilities, including staff detailed from the federal departments and agencies described in section 5103(c) (i.e. the interagency committee discussed below), as appropriate. (National Artificial Intelligence Initiative Act of 2020 § 5102: National Artificial Intelligence Initiative Office) |

Director of OSTP |

No due date |

|

Leadership duties |

(1) Provide technical and administrative support to the Interagency Committee and the Advisory Committee; (2) serve as the point of contact on federal AI activities for federal departments and agencies, industry, academia, nonprofit organizations, professional societies, state governments, and such other persons as the Initiative Office considers appropriate to exchange technical and programmatic information; (3) conduct regular public outreach to diverse stakeholders, including civil rights and disability rights organizations; and (4) promote access to the technologies, innovations, best practices, and expertise derived from Initiative activities to agency missions and systems across the federal government. (National Artificial Intelligence Initiative Act of 2020 § 5102: National Artificial Intelligence Initiative Office) |

Director of the National AI Initiative Office |

No due date |

|

Budget |

Develop and annually update an estimate of the funds necessary to carry out the activities of the Initiative Coordination Office and submit such estimate with an agreed summary of contributions from each agency to Congress as part of the President’s annual budget request to Congress. (National Artificial Intelligence Initiative Act of 2020 § 5102: National Artificial Intelligence Initiative Office) |

Director of OSTP, in coordination with each participating federal department and agency |

Annually |

|

Interagency coordination |

Establish or designate an Interagency Committee to coordinate federal programs and activities in support of the Initiative. The Interagency Committee shall be co-chaired by the Director of OSTP and, on an annual rotating basis, a representative from the Department of Commerce, the National Science Foundation, or the Department of Energy, as selected by the Director of OSTP. The Committee shall include representatives from federal agencies as considered appropriate by determination and agreement of the Director of OSTP and the head of the affected agency. The Committee shall provide for interagency coordination of federal AI research, development, and demonstration activities and education and workforce training activities and programs of federal departments and agencies undertaken pursuant to the Initiative. (National Artificial Intelligence Initiative Act of 2020 § 5103: Coordination by Interagency Committee) |

Director of OSTP, acting through the National Science and Technology Council |

No due date |

|

Interagency coordination |

Develop a strategic plan for AI that establishes goals, priorities, and metrics for guiding and evaluating how the agencies carrying out the Initiative will (A) determine and prioritize areas of AI research, development, and demonstration requiring federal government leadership and investment; (B) support long-term funding for interdisciplinary AI research, development, demonstration, and education; (C) support research and other activities on ethical, legal, environmental, safety, security, bias, and other appropriate societal issues related to AI; (D) provide or facilitate the availability of curated, standardized, secure, representative, aggregate, and privacy-protected data sets for AI research and development; (E) provide or facilitate the necessary computing, networking, and data facilities for AI research and development; (F) support and coordinate federal education and workforce training activities related to AI; and (G) support and coordinate the network of AI research institutes described in section 5201(b)(7)(B). (National Artificial Intelligence Initiative Act of 2020 § 5103: Coordination by Interagency Committee) |

Interagency Committee |

Not later than 2 years after enactment (January 1, 2023) and be updated not less than every 3 years |

|

Interagency coordination |

Propose an annually coordinated interagency budget, as part of the President’s annual budget request to Congress, for the Initiative to the Office of Management and Budget that is intended to ensure that the balance of funding across the Initiative is sufficient to meet the goals and priorities established for the Initiative. (National Artificial Intelligence Initiative Act of 2020 § 5103: Coordination by Interagency Committee) |

Interagency Committee |

Annually |

|

Interagency coordination |

Take into consideration the recommendations of the National AI Advisory Committee, existing reports on related topics, and the views of academic, state, industry, and other appropriate groups. (National Artificial Intelligence Initiative Act of 2020 § 5103: Coordination by Interagency Committee) |

Interagency Committee |

No due date |

|

Interagency coordination |

Prepare and submit a report that includes a summarized budget in support of the Initiative for such fiscal year and the preceding fiscal year, including a disaggregation of spending and a description of any National AI Research Institutes established for the Department of Commerce, the Department of Defense, the Department of Energy, the Department of Agriculture, the Department of Health and Human Services, and the National Science Foundation to the following committees: the Committee on Science, Space, and Technology, the Committee on Energy and Commerce, the Committee on Transportation and Infrastructure, the Committee on Armed Services, the House Permanent Select Committee on Intelligence, the Committee on the Judiciary, and the Committee on Appropriations of the House of Representatives and the Committee on Commerce, Science, and Transportation, the Committee on Health, Education, Labor, and Pensions, the Committee on Energy and Natural Resources, the Committee on Homeland Security and Governmental Affairs, the Committee on Armed Services, the Senate Select Committee on Intelligence, the Committee on the Judiciary, and the Committee on Appropriations of the Senate. (National Artificial Intelligence Initiative Act of 2020 § 5103: Coordination by Interagency Committee) |

Interagency Committee |

For each fiscal year beginning with fiscal year 2022. To be submitted not later than 90 days after submission of the President’s annual budget request. |

|

Advisory committee |

Provide technical expertise to the National Council for the American Worker on matters regarding AI and the American workforce, as appropriate.a (EO 13859 § 7: AI and the American Workforce) |

Select Committee |

No due date |

Source: GAO analysis of federal AI laws, executive orders, and guidance. | GAO‑25‑107933

Note: In some cases, these entries have been condensed or summarized for space or clarity. For the actual language of the requirement, see the source listed in each row.

aAccording to OSTP, the National Council for the American Worker no longer exists. However, the requirement is included in this table as the associated executive order remains in effect.

Department of Commerce and the National AI Advisory Committee

The Department of Commerce, through the National Institute of Standards and Technology, is charged with leading federal agencies in their efforts to develop AI technical standards, among other things. The National AI Initiative Act of 2020 required the Secretary of Commerce to establish the National AI Advisory Committee (NAIAC). The NAIAC advises the President and the National AI Initiative Office on various matters related to AI. We identified ten current government-wide AI-related requirements for Commerce and NAIAC in federal laws.

Table 6 shows Commerce and NAIAC’s AI-related requirements identified in federal laws, EOs, and guidance, as of July 2025.

Table 6: Department of Commerce and the National Artificial Intelligence Advisory Committee’s (NAIAC) Artificial Intelligence (AI)-related Requirements from Federal Laws, Executive Orders (EO), and Guidance, as of July 2025

|

Requirement category |

Requirement and source |

Responsible |

Due date |

|

Leadership duties |

Continue to support the development of AI and data science, and carry out the activities of the National Artificial Intelligence Initiative Act of 2020, including through (1) expanding the Institute’s capabilities, including scientific staff and research infrastructure; (2) supporting measurement research and development (R&D) for advanced computer chips and hardware designed for AI systems; (3) supporting the development of technical standards and guidelines that promote safe and trustworthy AI systems, such as enhancing the accuracy, explainability, privacy, reliability, robustness, safety, security, and mitigation of harmful bias in AI systems; (4) creating a framework for managing risks associated with AI systems; and (5) developing and publishing cybersecurity tools, encryption methods, and best practices for AI and data science. (CHIPS Act of 2022 § 10232: Artificial Intelligence) |

Director of the National Institute of Standards and Technology |

No due date |

|

Advisory committee |

Establish an advisory committee to be known as the ‘‘National Artificial Intelligence Advisory Committee.” (National Artificial Intelligence Initiative Act of 2020 § 5104: National Artificial Intelligence Advisory Committee) |

Secretary of Commerce, in consultation with the Director of the Office of Science and Technology Policy, the Secretary of Defense, the Secretary of Energy, the Secretary of State, the Attorney General, and the Director of National Intelligence |

No due date |

|

Advisory committee |

Appoint members to the Advisory Committee who are representing broad and interdisciplinary expertise and perspectives, including from academic institutions, companies across diverse sectors, nonprofit and civil society entities. These entities should include civil rights and disability rights organizations, and federal laboratories, who are representing geographic diversity, and who are qualified to provide advice and information on science and technology research, development, ethics, standards, education, technology transfer, commercial application, security, and economic competitiveness related to AI. In addition, while selecting the members of the Advisory Committee, seek and consider recommendations from Congress, industry, nonprofit organizations, the scientific community (including the National Academies of Sciences, Engineering, and Medicine, scientific professional societies, and academic institutions), the defense and law enforcement communities, and other appropriate organizations. (National Artificial Intelligence Initiative Act of 2020 § 5104: National Artificial Intelligence Advisory Committee) |

Secretary of Commerce |

No due date |

|

Advisory committee |

Advise the President and the Initiative Office on matters related to the Initiative, including recommendations related to (1) the current state of United States competitiveness and leadership in AI, including the scope and scale of United States investments in AI R&D in the international context; (2) the progress made in implementing the Initiative, including a review of the degree to which the Initiative has achieved the goals according to the metrics established by the Interagency Committee; (3) the state of the science around AI, including progress toward artificial general intelligence; (4) issues related to AI and the United States workforce, including matters relating to the potential for using AI for workforce training, the possible consequences of technological displacement, and supporting workforce training opportunities for occupations that lead to economic self-sufficiency for individuals with barriers to employment and historically underrepresented populations, including minorities, Indians, low-income populations, and persons with disabilities. (5) how to leverage the resources of the initiative to streamline and enhance operations in various areas of government operations, including health care, cybersecurity, infrastructure, and disaster recovery; (6) the need to update the Initiative; (7) the balance of activities and funding across the Initiative; (8) whether the strategic plan developed or updated by the Interagency Committee is helping to maintain United States leadership in AI; (9) the management, coordination, and activities of the Initiative; (10) whether ethical, legal, safety, security, and other appropriate societal issues are adequately addressed by the Initiative; (11) opportunities for international cooperation with strategic allies on AI research activities, standards development, and the compatibility of international regulations; (12) accountability and legal rights, including matters relating to oversight of AI systems using regulatory and nonregulatory approaches, the responsibility for any violations of existing laws by an AI system, and ways to balance advancing innovation while protecting individual rights; and (13) how AI can enhance opportunities for diverse geographic regions of the United States, including urban, tribal, and rural communities. (National Artificial Intelligence Initiative Act of 2020 § 5104: National Artificial Intelligence Advisory Committee) |

NAIAC |

No due date |

|

Advisory committee |

Establish a subcommittee on matters relating to the development of AI relating to law enforcement matters. The subcommittee shall provide advice to the President on matters relating to the development of AI relating to law enforcement on the following: (A) Bias, including whether the use of facial recognition by government authorities, including law enforcement agencies, is taking into account ethical considerations and addressing whether such use should be subject to additional oversight, controls, and limitations. (B) Security of data, including law enforcement’s access to data and the security parameters for that data. (C) Adoptability, including methods to allow the United States government and industry to take advantage of AI systems for security or law enforcement purposes while at the same time ensuring the potential abuse of such technologies is sufficiently mitigated. (D) Legal standards, including those designed to ensure the use of AI systems are consistent with the privacy rights, civil rights and civil liberties, and disability rights issues raised by the use of these technologies. (National Artificial Intelligence Initiative Act of 2020 § 5104: National Artificial Intelligence Advisory Committee) |

Chairperson of NAIAC |

No due date |

|

Congressional update |

Submit to the President, the Committee on Science, Space, and Technology, the Committee on Energy and Commerce, the House Permanent Select Committee on Intelligence, the Committee on the Judiciary, and the Committee on Armed Services of the House of Representatives, and the Committee on Commerce, Science, and Transportation, the Senate Select Committee on Intelligence, the Committee on Homeland Security and Governmental Affairs, the Committee on the Judiciary, and the Committee on Armed Services of the Senate, a report on the Advisory Committee’s findings and recommendations. (National Artificial Intelligence Initiative Act of 2020 § 5104: National Artificial Intelligence Advisory Committee) |

NAIAC |

Not later than January 1, 2022, and at least once every 3 years thereafter |

|

Mission activities |

Carry out the following activities: (1) advance collaborative frameworks, standards, guidelines, and associated methods and techniques for artificial intelligence; (2) support the development of a risk-mitigation framework for deploying artificial intelligence systems; (3) support the development of technical standards and guidelines that promote trustworthy artificial intelligence systems; and (4) support the development of technical standards and guidelines by which to test for bias in artificial intelligence training data and applications. (National Artificial Intelligence Initiative Act of 2020 § 5301: National Institute of Standards and Technology Activities) |

National Institute of Standards and Technology |

No due date |

|

Risk management framework |

Develop, and periodically update, a voluntary risk management framework for trustworthy artificial intelligence systems. The framework shall (1) identify and provide standards, guidelines, best practices, methodologies, procedures and processes for (A) developing trustworthy artificial intelligence systems; (B) assessing the trustworthiness of artificial intelligence systems; and (C) mitigating risks from artificial intelligence systems; (2) establish common definitions and characterizations for aspects of trustworthiness, including explainability, transparency, safety, privacy, security, robustness, fairness, bias, ethics, validation, verification, interpretability, and other properties related to artificial intelligence systems that are common across all sectors; (3) provide case studies of framework implementation; (4) align with international standards, as appropriate; (5) incorporate voluntary consensus standards and industry best practices; and (6) not prescribe or otherwise require the use of specific information or communications technology products or services. (National Artificial Intelligence Initiative Act of 2020 § 5301: National Institute of Standards and Technology Activities) |

Director of the National Institute of Standards and Technology, in collaboration with other public and private sector organizations, including the National Science Foundation and the Department of Energy |

Not later than 2 years after enactment (January 1, 2023) and periodically update |

|

Standards |

Participate in the development of standards and specifications for artificial intelligence to ensure (A) that standards promote artificial intelligence systems that are trustworthy; and (B) that standards relating to artificial intelligence reflect the state of technology and are fit-for-purpose and developed in transparent and consensus-based processes that are open to all stakeholders. (National Artificial Intelligence Initiative Act of 2020 § 5301: National Institute of Standards and Technology Activities) |

National Institute of Standards and Technology |

No due date |

|

Stakeholder outreach |

Carry out the following activities: (1) solicit input from university researchers, private sector experts, relevant federal agencies, federal laboratories, state, tribal, and local governments, civil society groups, and other relevant stakeholders; (2) solicit input from experts in relevant fields of social science, technology ethics, and law; and (3) provide opportunity for public comment on guidelines and best practices developed as part of the Initiative, as appropriate. (National Artificial Intelligence Initiative Act of 2020 § 5302: Stakeholder Outreach) |

Director of the National Institute of Standards and Technology |

No due date |

Source: GAO analysis of federal AI laws, executive orders, and guidance. | GAO‑25‑107933

Note: In some cases, these entries have been condensed or summarized for space or clarity. For the actual language of the requirement, see the source listed in each row.

General Services Administration

The General Services Administration (GSA) is responsible for helping agencies develop AI solutions and improve the public experience with the federal government. We identified three current government-wide AI-related requirements for GSA in federal laws, EOs, and guidance.

Table 7 shows GSA’s AI-related requirements identified in federal laws, EOs, and federal guidance, as of July 2025.

Table 7: General Services Administration’s (GSA) Artificial Intelligence (AI)-related Requirements from Federal Laws, Executive Orders (EO), and Guidance, as of July 2025

|

Requirement category |

Requirement and source |

Responsible party(ies) |

Due date |

|

Establish leadership |

Create a program known as the “AI Center of Excellence,” which shall (1) facilitate the adoption of AI technologies in the federal government; (2) improve cohesion and competency in the adoption and use of AI within the federal government; (3) complete steps 1 and 2 for the purposes of benefitting the public and enhancing the productivity and efficiency of federal government operations. (AI in Government Act of 2020 § 3: AI Center of Excellence) |

GSA |

No due date. This requirement sunsets in December 2025. |

|

Guidance |

Develop a plan to release publicly available guide(s) to assist the acquisition workforce with the procurement of AI systems to address potential acquisition authorities, approaches, and vehicles, as well as their potential benefits and drawbacks, and any other resources that agencies can immediately leverage for AI procurement. (M-25-22 § 3: Agency-Level Requirements) |

GSA, in collaboration with the Office of Management and Budget (OMB) and relevant interagency councils |

July 12, 2025 |

|

Knowledge sharing |

Develop a web-based repository, available only to executive branch agencies, to facilitate the sharing of information, knowledge, and resources about AI acquisition. Agencies should contribute tools, resources, and data-sharing best practices developed for improved AI acquisition, which may include language for standard contract clauses and negotiated costs for common AI systems and other relevant artifacts. (M-25-22 § 3: Agency-Level Requirements) |

GSA, in coordination with OMB |

October 20, 2025 |

Source: GAO analysis of federal AI laws, executive orders, and guidance. | GAO‑25‑107933

Note: In some cases, these entries have been condensed or summarized for space or clarity. For the actual language of the requirement, see the source listed in each row.

National Science Foundation

The National Science Foundation is responsible for funding foundational AI research and supporting related education and workforce development programs, among other things. We identified five current government-wide AI-related requirements for the National Science Foundation in federal laws, EOs, and guidance.

Table 8 shows the National Science Foundation’s AI-related requirements identified in federal laws, EOs, and guidance, as of July 2025.

Table 8: National Science Foundation’s (NSF) Artificial Intelligence (AI)-related Requirements from Federal Laws, Executive Orders (EO), and Guidance, as of July 2025

|

Requirement category |

Requirement and source |

Responsible agency(ies) |

Due date |

|

Public reporting |

Evaluate and make public, in a manner that protects the personally identifiable information of scholarship recipients, information on the success of recruiting individuals for scholarships under this section and on hiring and retaining those individuals in the public sector AI workforce, including information on (i) placement rates; (ii) where students are placed, including job titles and descriptions; (iii) salary ranges for students not released from obligations under this section; (iv) how long after graduation students are placed; (v) how long students stay in the positions they enter upon graduation; (vi) how many students are released from obligations; and (vii) what, if any, remedial training is required. (CHIPS Act of 2022 § 10313: Graduate STEM Education) |

NSF Director in coordination with Director of the Office of Personnel Management |

Annually |

|

Congressional update |

Submit to the Committee on Homeland Security and Governmental Affairs of the Senate, the Committee on Commerce, Science, and Transportation of the Senate, the Committee on Science, Space, and Technology of the House of Representatives, and the Committee on Oversight and Reform of the House of Representatives a report, including the results of the evaluation listed above and any recent statistics regarding the size, composition, and educational requirements of the Federal AI workforce. (CHIPS Act of 2022 § 10313: Graduate STEM Education) |

NSF Director in coordination with Director of the Office of Personnel Management |

At least every 3 years |

|

Scholarship for service |

Review and update the federal AI Scholarship-for-Service Program to reflect advances in technology. (CHIPS Act of 2022 § 10313: Graduate STEM Education) |

NSF Director in coordination with Director of the Office of Personnel Management |

At least once every 2 years |

|

Advisory committee |

Identify a list of (1) not more than five United States societal, national, and geostrategic challenges that may be addressed by technology to guide activities under this subtitle; and (2) not more than ten key technology focus areas to guide activities under this subtitle. (CHIPS Act of 2022 § 10387: Challenges and Focus Areas) |

NSF Director in consultation with the Assistant Director, the National Science Board, and the National Science Foundation Technology interagency working group |

Annually |

|

AI research institutes |

Subject to the availability of funds, establish a program to award financial assistance for the planning, establishment, and support of a network of AI research institutes. (National Artificial Intelligence Initiative Act of 2020 § 5201 National AI Research Institutes) |

NSF Director |

No due date |

Source: GAO analysis of federal AI laws, executive orders, and guidance. | GAO‑25‑107933

Note: In some cases, these

entries have been condensed or summarized for space or clarity. For the actual

language of the requirement, see the source listed in each row.

Federal Agencies

Federal agencies are responsible for implementing various AI-related requirements outlined in federal laws, EOs, and guidance to ensure to responsible use of AI. We identified 51 current government-wide AI-related requirements for federal agencies in federal laws, EOs, and guidance.

Table 9 shows federal agencies’ AI-related requirements from federal laws, EOs, and federal guidance, as of July 2025.

Table 9: Federal Agencies’ Artificial Intelligence (AI)-Related Requirements from Federal Laws, Executive Orders (EO), and Guidance, as of July 2025

|

Requirement category |

Requirement and source |

Responsible party(ies) |

Due date |

|

AI management and use |

Prepare an inventory of its non-classified and non-sensitive use cases of AI, as defined in the National Defense Authorization Act for Fiscal Year 2019 including current and planned uses, consistent with the agency’s mission with the exception of defense and national security systems. (EO 13960 §5: Agency Inventory of AI Use Cases) |

Federal agencies, as defined in 44 U.S.C. § 3502, excluding independent regulatory agencies |

Annually |

|

AI management and use |

Identify, review, and assess existing AI deployed and operating in support of agency missions for any inconsistencies with this order, as part of their respective inventories of AI use cases. (EO 13960 §5: Agency Inventory of AI Use Cases) |

Federal agencies, as defined in 44 U.S.C. § 3502, excluding independent regulatory agencies |

Annually |

|

Guidance |

Continue to use voluntary consensus standards developed with industry participation, where available, when such use would not be inconsistent with applicable law or otherwise impracticable. Such standards shall also be taken into consideration by the Office of Management and Budget (OMB) when revising or developing AI guidance. (EO 13960 §4: Implementation of Principles) |

Federal agencies, as defined in 44 U.S.C. § 3502, excluding independent regulatory agencies |

No due date |

|

AI research and development |

Pursue six strategic objectives in furtherance of both promoting and protecting American advancements in AI: (a) Promote sustained investment in AI research and development (R&D) in collaboration with industry, academia, international partners and allies, and other non-federal entities to generate technological breakthroughs in AI and related technologies and to rapidly transition those breakthroughs into capabilities that contribute to our economic and national security. (b) Enhance access to high-quality and fully traceable federal data, models, and computing resources to increase the value of such resources for AI R&D, while maintaining safety, security, privacy, and confidentiality protections consistent with applicable laws and policies. (c) Reduce barriers to the use of AI technologies to promote their innovative application while protecting American technology, economic and national security, civil liberties, privacy, and values. (d) Ensure that technical standards minimize vulnerability to attacks from malicious actors and reflect Federal priorities for innovation, public trust, and public confidence in systems that use AI technologies; and develop international standards to promote and protect those priorities. (e) Train the next generation of American AI researchers and users through apprenticeships; skills programs; and education in science, technology, engineering, and mathematics (STEM), with an emphasis on computer science, to ensure that American workers, including federal workers, can take full advantage of the opportunities of AI. (f) Develop and implement an action plan, in accordance with the National Security Presidential Memorandum of February 11, 2019 (Protecting the United States Advantage in Artificial Intelligence and Related Critical Technologies) to protect the advantage of the United States in AI and technology critical to United States economic and national security interests against strategic competitors and foreign adversaries. (EO 13859 §2: Objectives) |

Agencies that conduct foundational AI R&D, develop and deploy applications of AI technologies, provide educational grants, and regulate and provide guidance for applications of AI technologies, as determined by the co-chairs of the National Science and Technology Council (NSTC) Select Committee (implementing agencies). |

No due date |

|

AI research and development |

Consider AI as an agency R&D priority, as appropriate to their respective agencies’ missions, consistent with applicable law and in accordance with the OMB and the Office of Science and Technology Policy (OSTP) R&D priorities memoranda. (EO 13859 §4: Federal Investment in AI Research and Development) |

Heads of implementing agencies, as determined by the co-chairs of the NSTC Select Committee, that also perform or fund R&D. |

No due date |

|

Budget |

Budget an amount for AI R&D that is appropriate for prioritizing AI R&D. (EO 13859 §4: Federal Investment in AI Research and Development) |

Heads of implementing agencies, as determined by the co-chairs of the NSTC Select Committee. |

No due date |

|

AI management and use |

Communicate plans for achieving the prioritization of AI R&D to the OMB Director and the OSTP Director each fiscal year through the Networking and Information Technology Research and Development (NITRD) Program. (EO 13859 §4: Federal Investment in AI Research and Development) |

Heads of implementing agencies, as determined by the co-chairs of the NSTC Select Committee. |