VETERANS AFFAIRS

Key AI Practices Could Help Address Challenges

Statement of Carol Harris, Director, Information Technology and Cybersecurity

Before the Subcommittee on Technology Modernization, Committee on Veterans' Affairs, House of Representatives

For Release on Delivery Expected at 3:00 p.m. ET

United States Government Accountability Office

A testimony before the Subcommittee on Technology Modernization, Committee on Veterans’ Affairs, House of Representatives

For more information, contact: Carol Harris at harriscc@gao.gov.

What GAO Found

Generative artificial intelligence (AI) systems create outputs using algorithms, which are often trained on text and images obtained from the internet. Technological advancements in the underlying systems and architecture, combined with the open availability of AI tools to the public, have led to widespread use.

The Department of Veterans Affairs (VA) increased its number of AI use cases between 2023 and 2024. VA has also identified challenges in using AI—such as difficulty complying with federal policies and guidance, having sufficient technical resources and budget, acquiring generative AI tools, hiring and developing an AI workforce, and securing sensitive data.

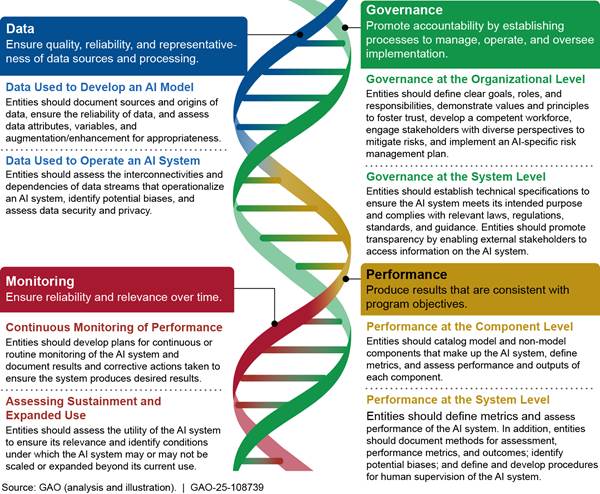

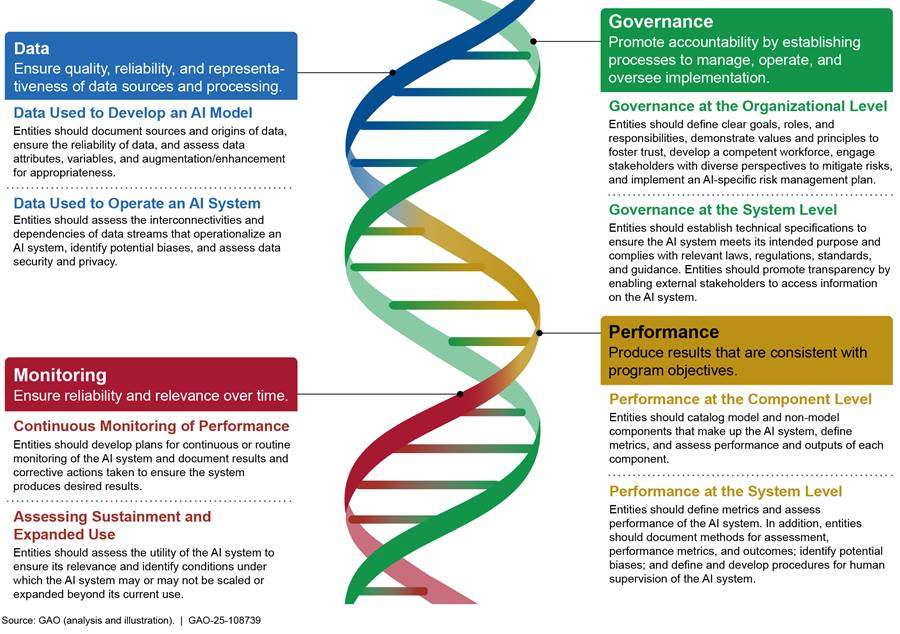

GAO has identified a framework of key practices to help ensure accountability and responsible AI use by federal agencies—including VA—in the design, development, deployment, and continuous monitoring of AI systems. VA and other agencies can use this framework as they consider, select, and implement AI systems (see figure).

Figure: GAO’s Artificial Intelligence (AI) Accountability Framework

VA’s use of the AI accountability framework along with a solid foundation of IT management and AI use cases could enable the department to better position itself to support ongoing and future work involving the technology.

Why GAO Did This Study

Developments in generative AI—which can create text, images, audio, video, and other content when prompted by a user—have revolutionized how the technology can be used in many industries, including healthcare, and at federal agencies including VA.

AI is a transformative technology for government operations, but it also poses unique challenges because the source of information used by AI systems may not always be clear or accurate. These challenges may be difficult for federal agencies including VA to overcome.

In prior reports, GAO found that VA has experienced longstanding challenges in managing its IT projects and programs. This raises questions about the efficiency and effectiveness of its operations and its ability to deliver intended outcomes needed to help advance the department’s mission.

GAO’s statement describes (1) VA’s AI use and challenges, and (2) principles and key practices for federal agencies that are considering implementing AI.

GAO summarized a prior report that described VA’s use of AI. GAO also summarized key practices for federal agencies and other entities that are considering implementing AI systems.

What GAO Recommends

The prior GAO reports described in this statement include 26 recommendations to VA concerning management of its IT resources that have not yet been implemented, and one recommendation to update its AI inventory that has not been implemented.

Chairman Barrett, Ranking Member Budzinski, and Members of the Subcommittee:

Thank you for the opportunity to discuss the use of artificial intelligence (AI) at the Department of Veterans Affairs (VA). Developments in generative AI—which can create text, images, audio, video, and other content when prompted by a user—have revolutionized how the technology can be used in many industries, including the healthcare industry, and at federal agencies including VA. AI can increase risk for agencies, however, and poses unique oversight challenges because the source of information used by AI is not always clear or accurate. Given the fast pace at which AI is evolving, the government must be proactive in understanding its complexities, risks, and societal consequences.

VA has experienced longstanding challenges in managing its IT projects and programs, raising questions about the efficiency and effectiveness of its operations and its ability to deliver intended capabilities. In 2015, we added Managing Risks and Improving VA Health Care to our High-Risk List because of system-wide challenges, including with major modernization initiatives.[1] We also added VA Acquisition Management to our High-Risk List in 2019 due to, among other things, challenges with managing its acquisition workforce and inadequate strategies and policies.[2] Both remain high-risk areas.

For this testimony statement, I will describe (1) VA’s AI use and challenges, and (2) principles and key practices for federal agencies—including VA—that are considering and implementing AI systems.

In developing this testimony, we summarized a prior GAO report that described VA’s use of AI. We also summarized key practices developed by GAO for federal agencies and other entities that are considering and implementing AI systems. Detailed information on the objectives, scope, and methodology of the summarized work can be found in each issued report.

We conducted the work on which this statement is based in accordance with generally accepted government auditing standards. Those standards require that we plan and perform the audit to obtain sufficient, appropriate evidence to provide a reasonable basis for our findings and conclusions based on our audit objectives. We believe that the evidence obtained provides a reasonable basis for our findings and conclusions based on our audit objectives.

Background

AI involves computing systems that “learn” how to improve their performance; it is a rapidly growing technology with applications found in every aspect of modern life. AI is used in day-to-day technologies such as video games, web searching, facial recognition technology, spam filtering, and voice recognition.

AI is a transformative technology with applications ranging from medical diagnostics and precision agriculture to advanced manufacturing and autonomous transportation, to national security and defense.[3] It also holds substantial promise for improving the operations of government agencies.

AI capabilities are evolving and neither the scientific community nor industry agree on a common definition for these technologies. Even within the government, definitions vary. For example, according to the National Artificial Intelligence Initiative Act of 2020, AI is defined as a machine-based system that can, for a given set of human-defined objectives, make predictions, recommendations or decisions influencing real or virtual environments.[4] According to the National Institute of Standards and Technology (NIST) guidance, AI is “an engineered machine-based system that can, for a given set of objectives, generate outputs such as predictions, recommendations, or decisions influencing real or virtual environments. AI systems are designed to operate with varying levels of autonomy.”[5]

Generative AI is a subset of AI and other types of machine learning that can create novel content based on prompts from a user and patterns learned from datasets. Generative AI systems create outputs using algorithms, which are often trained on text and images obtained from the internet. Technological advancements in the underlying systems and architectures since 2017, combined with the open availability of AI tools to the public starting in late 2022, have led to widespread use. The technology is continuously evolving, with rapidly emerging capabilities that could revolutionize entire industries. As we previously reported, generative AI could offer agencies benefits in summarizing information, enabling automation, and improving productivity.[6]

However, despite continued growth in capabilities, generative AI systems are not cognitive, lack human judgment, and may pose numerous risks. In July 2024, NIST published a document defining risks that are novel to or exacerbated by generative AI.[7] For example, NIST stated that generative AI can cause data privacy risks due to unauthorized use, disclosure, or de-anonymization of biometric, health, or other personally identifiable information or sensitive data.

Federal Guidance for AI and Generative AI

Federal agencies’ efforts to implement AI are guided by federal law, executive actions, and federal guidance. Since 2019, various laws have been enacted, and executive orders (EO) and guidance have been issued to assist federal agencies in implementing AI. For example:

· In February 2019, the President issued EO 13859, establishing the American AI Initiative, which promoted AI research and development investment and coordination, among other things.[8]

· In December 2020, the President issued EO 13960, promoting the use of trustworthy AI, which focused on operational AI and established a common set of principles for the design, development, acquisition, and use of AI in the federal government.[9] It also established the foundational requirements for AI use case inventories.[10]

· In December 2022, the Advancing American AI Act, among other things, codified various requirements for agencies’ AI use case inventories.[11] Later, the U.S. Chief Information Officers (CIO) Council updated related guidance on how to create and make public annual AI use case inventories.[12]

· In January 2025, the President issued EO 14148 and EO 14179, which updated U.S. policy on AI and directed the development and submission of an AI action plan by July 22, 2025.[13]

· In April 2025, as directed by EO 14179, OMB issued M-25-21.[14] This memorandum requires agencies to develop a policy for acceptable use of generative AI, among other AI requirements. In addition, OMB issued M-25-22.[15] This memorandum provides guidance to agencies to improve their ability to acquire AI responsibly.

In addition to executive orders and OMB policy memorandums, agencies have published government-wide guidance for AI, and for generative AI specifically.

· General Services Administration AI Guide for Government. This guide is intended to help government decision-makers by offering clarity and guidance on defining AI and understanding its capabilities and limitations, and by explaining how agencies could apply it to their mission areas.[16] For example, the guide identifies key AI terminology and steps agencies could take to develop their AI workforce.

· NIST Secure Software Development Practices for Generative AI and Dual-Use Foundation Models. This document expands on NIST’s Secure Software Development Framework by incorporating practices for generative AI and other advanced general-purpose models.[17] It documents potential generative AI development risk factors and strategies to address them.

VA Has Historically Faced Challenges in Managing IT Resources

As we reported in December 2023, agencies must:

· prepare an AI use case inventory,

· plan for AI inventory updates,

· make the AI use case inventory publicly available, and

· designate an official responsible for AI.[18]

We further reported that VA had completed each of these four requirements. Specifically, VA had prepared and made public an AI use case inventory, developed a plan for inventory updates, and designated a responsible AI official. However, we noted that VA’s inventory of AI use cases did not include required data elements that OMB requested for the inventory or provided incorrect elements. We recommended that VA ensure that the department updates its AI use case inventory to include all the required information, at a minimum, and takes steps to ensure that the data in the inventory aligns with provided instructions. Doing so is critical for the agency to have awareness of its AI capabilities and to make important decisions. Without an accurate inventory, the department’s implementation, oversight, and management of AI could be based on faulty data. As of September 2025, the agency has not implemented the recommendation.

We have also issued multiple reports discussing VA challenges in modernizing IT systems and improving IT resource management. These include challenges, for example, with modernizing its health information and financial management systems, tracking software licenses, managing its cybersecurity workforce, and cloud computing.[19] We continue to monitor 26 prior recommendations that VA has not yet fully implemented related to the challenges we previously identified in managing its IT resources.

In addition, we recently testified about VA’s fiscal year 2026 budget request, which reflects a range of planned reforms that will impact department priorities, staffing, and investments in IT.[20] VA requested about $7.3 billion to fund its IT systems in fiscal year 2026, about a 4 percent decrease from enacted budget levels in fiscal year 2025. VA’s budget request states that a reduction of 931 full-time equivalents is consistent with maturing technology delivery models and a shift toward automation and digital services. In addition, VA’s request reflects a range of planned reforms: investing over $3.5 billion to hasten implementation of its electronic health record modernization; reducing IT expenditures by about $500 million by retiring outdated legacy systems and pausing procurements to reassess IT initiatives; and streamlining administrative practices leading to about $40 million in savings. Our testimony also noted GAO’s prior work on leading practices that federal agencies—including VA—can consider when undertaking agency reform efforts, including efforts to streamline and improve the efficiency and effectiveness of IT operations.[21]

VA Has Reported Increased AI Use Cases, Challenges in Using and Managing AI, and Establishment of AI Policies and Practices

In July 2025, we reported that federal agencies—including VA—had increased their number of AI use cases between 2023 and 2024. We also reported that they had identified challenges in using and managing AI, and had established AI policies and practices.

VA Has Increased Use Cases for AI

In July 2025, we reported that selected agencies had generally increased the number of AI use cases between 2023 and 2024.[22] Regarding VA, the department reported 40 AI use cases in 2023, and 229 AI use cases in 2024.[23] For example, VA is developing a generative AI use to automate various medical imaging processes.

This use may enhance VA’s ability to analyze medical images, integrate existing and new data workflows, and create summary diagnostic reports. In the health and medical sector, agencies have adopted generative AI to advance research and improve public health outcomes, including at VA. As we have previously reported, AI technologies can assist in analyzing complex medical data, leading to more accurate diagnostics and personalized treatment plans.[24] For example, AI models can summarize patient electronic health records, medications, and chronic condition information to aid in clinical decision-making.

VA Has Experienced Challenges in Using and Managing Generative AI

In July 2025, we found that federal agencies including VA have reported that they face several challenges in using and managing generative AI. For instance:

Complying with existing federal policies and guidance. Agencies—including VA—are required to adhere to federal policy and guidance when using generative AI. However, VA officials shared that existing federal AI policy may not account for or could present obstacles to the adoption of generative AI including in the areas of cybersecurity, data privacy, and IT acquisitions. VA officials also noted that existing privacy policy can prohibit information sharing with other agencies, which can prevent effective collaboration on generative AI risks and advancements.

Having sufficient technical resources and budget. Generative AI can require infrastructure with significant computational and technical resources. Agencies—including VA—reported challenges in obtaining or accessing the needed technical resources. In addition, agencies—including VA—reported challenges related to having the funding needed to establish these resources and support desired generative AI initiatives.

Acquiring generative AI tools. VA officials reported experiencing delays in acquiring commercial generative AI products and services, including cloud-based services, because of the time needed to obtain Federal Risk and Authorization Management Program authorizations.[25] According to the literature we reviewed, these delays can be exacerbated when the provider is unfamiliar with federal procurement requirements.

Hiring and developing an AI workforce. Agencies—including VA—reported challenges in attracting and developing individuals with expertise in generative AI. These agencies can also be affected by competition with the private sector for similarly skilled professionals. Furthermore, these agencies reported difficulties in establishing and providing ongoing education and technical skill development for their current workforce.

Securing sensitive data. Agencies are required to ensure that sensitive data used in the training and deployment of generative AI models are kept secure and compliant with federal requirements. However, officials at agencies—including VA—told us that strict data security requirements may prevent them from performing generative AI research in certain agency mission areas.

VA Is Establishing Policies and Practices to Help Address Generative AI Challenges

Officials at VA told us they are working toward implementing the new AI requirements in OMB’s April 2025 memorandum, M-25-21.[26] Doing so will provide opportunities to develop and publicly release AI strategies for identifying and removing barriers and addressing challenges previously cited. These strategies are to include, among other things, plans to address infrastructure and workforce needs, processes to facilitate AI investment or procurement, and plans to ensure access to quality data for AI and data traceability. In addition, the memorandum (1) encourages agencies to promote the trust of AI systems and (2) directs agencies to develop a generative AI policy that establishes safeguards and oversight mechanisms.

As the AI policy landscape evolves, agencies—including VA—are developing and updating their own guidance intended to govern their use and management of generative AI. These policies and practices can help address generative AI challenges previously described. For example, VA and other agencies have developed the following policies and practices to mitigate challenges with the use and management of generative AI:

· Appropriate use. While many of the selected agencies reported challenges with maintaining appropriate use, VA reported that it has established specific guidelines on the appropriate use of AI and provided training to staff on the protection, dissemination, and disposition of federal information while using generative AI.

· Data security. VA has taken action to safeguard sensitive data. Specifically, it prohibits employee use of web-based, publicly available generative AI services with sensitive data.

GAO Has Developed a Framework for Ensuring AI Accountability at Federal Agencies

GAO has identified a framework of key practices to help ensure accountability and responsible AI use by federal agencies in the design, development, deployment, and continuous monitoring of AI systems.[27] VA and other agencies can use this framework as they consider, select, and implement systems. Figure 1 presents the framework organized around four complementary principles that address governance, data, performance, and monitoring.

Figure 1: GAO’s Artificial Intelligence (AI) Accountability Framework

Governance

To help entities promote accountability and responsible use of AI systems, GAO identified nine key practices for establishing governance structures and processes to manage, operate, and oversee the implementation of these systems. The governance principle is grouped in two categories:

· Governance at the organizational level, which helps entities ensure oversight and accountability and manage risks of AI systems. Managers should establish and maintain an environment throughout the entity that sets a positive attitude toward internal controls.

· Governance at the system level, which helps entities ensure AI systems meet performance requirements.

Examples of key practices within the categories include:[28]

· Clear goals: Define clear goals and objectives for the AI system to ensure intended outcomes are achieved.

· Workforce: Recruit, develop, and retain personnel with multidisciplinary skills and experiences in design, development, deployment, assessment, and monitoring of AI systems.

· Specifications: Establish and document technical specifications to ensure the AI system meets its intended purpose.

Data

Data used to train, test, and validate AI systems should be of sufficient quality and appropriate for the intended purpose to ensure the system produces consistent and accurate results. To help entities use data that are appropriate for the intended use of each AI system, GAO identified eight key practices to ensure data are of high quality, reliable, and representative. The data principle is grouped in two categories:

· Data used for model development: This category refers to training data used in developing a probabilistic component, such as a machine learning model for use in an AI system, as well as data sets used to test and validate the model.

· Data used for system operations: This category refers to the various data streams that have been integrated into the operation of an AI system, which may include multiple models.

Examples of key practices within the categories include:[29]

· Sources: Document sources and origins of data used to develop the models underpinning the AI system.

· Dependency: Assess interconnectivities and dependencies of data streams that operationalize the AI system.

· Bias: Assess reliability, quality, and representativeness of all the data used in the system’s operation, including any potential biases, inequities, and other societal concerns associated with the AI system’s data.

Performance

To help entities ensure AI systems produce results that are consistent with program objectives, GAO developed nine key practices for the performance principle, grouped in two categories:

· Component level: Performance assessment at the component level determines whether each component meets its defined objective. The components are technology assets that represent building blocks of an AI system. They include hardware and software that apply mathematical algorithms to data.[30]

· System level: Performance assessment of the system determines whether the components work well as an integrated whole.

Examples of key practices within the categories include:[31]

· Documentation: Catalog model and non-model components, along with operating specifications and parameters.

· Assessment: Assess performance against defined metrics to ensure the AI system functions as intended and is sufficiently robust.

Monitoring

To help entities ensure reliability and relevance of AI systems over time, GAO identified five key practices grouped in two categories for the monitoring principle for assessing sustainment and expanded use.

· Continuous monitoring of performance: This category involves tracking inputs of data, outputs generated from predictive models, and performance parameters to determine whether the results are as expected.

· Assessing sustainment and expanded use: This category involves examining the utility of the AI system, especially when applicable laws, programmatic objectives, and the operational environment may change over time. In some cases, entities may consider scaling the use of the AI system (across geographic locations, for example) or expanding its use in different operational settings.

Examples of key practices within the categories include:[32]

· Planning: Develop plans for continuous or routine monitoring of the AI system to ensure it performs as intended.

· Ongoing assessment: Assess the utility of the AI system to ensure its relevance to the current context.

In summary, AI is a transformative technology for government functions and healthcare operations. However, it also poses unique IT oversight challenges for agencies, including VA, because the data used by AI are not always visible. Our prior recommendations on IT management and considering leading practices for effective reform are critical as VA continues to transform its oversight of IT across the department. If VA implements these recommendations effectively, it will be better positioned to overcome its longstanding challenges in managing its IT resources and will improve its ability to address the rapidly changing AI landscape. Federal guidance has focused on ensuring that AI is responsible, equitable, traceable, reliable, and governable. Consideration of the elements in the key practices described above can help VA guide the performance of assessments and audits of agency AI implementation.

Chairman Barrett, Ranking Member Budzinski, and Members of the Subcommittee, this concludes my prepared statement. I would be happy to answer any questions that you may have at this time.

GAO Contact and Staff Acknowledgments

If you or your staff have any questions about this testimony, please contact Carol C. Harris at harriscc@gao.gov. Contact points for our Offices of Congressional Relations and Public Affairs may be found on the last page of this statement.

GAO staff who made key contributions to this testimony include Jennifer Stavros-Turner (Assistant Director), Kevin Smith (Analyst-in-Charge), Chris Businsky, Jillian Clouse, Doug Harris Jr., Sam Giacinto, Evan Nelson Senie, Scott Pettis, Umesh Thakkar, and Andrew Yarborough.

This is a work of the U.S. government and is not subject to copyright protection in the United States. The published product may be reproduced and distributed in its entirety without further permission from GAO. However, because this work may contain copyrighted images or other material, permission from the copyright holder may be necessary if you wish to reproduce this material separately.

The Government Accountability Office, the audit, evaluation, and investigative arm of Congress, exists to support Congress in meeting its constitutional responsibilities and to help improve the performance and accountability of the federal government for the American people. GAO examines the use of public funds; evaluates federal programs and policies; and provides analyses, recommendations, and other assistance to help Congress make informed oversight, policy, and funding decisions. GAO’s commitment to good government is reflected in its core values of accountability, integrity, and reliability.

Obtaining Copies of GAO Reports and Testimony

The fastest and easiest way to obtain copies of GAO documents at no cost is through our website. Each weekday afternoon, GAO posts on its website newly released reports, testimony, and correspondence. You can also subscribe to GAO’s email updates to receive notification of newly posted products.

Order by Phone

The price of each GAO publication reflects GAO’s actual cost of production and distribution and depends on the number of pages in the publication and whether the publication is printed in color or black and white. Pricing and ordering information is posted on GAO’s website, https://www.gao.gov/ordering.htm.

Place orders by calling (202) 512-6000, toll free (866) 801-7077,

or

TDD (202) 512-2537.

Orders may be paid for using American Express, Discover Card, MasterCard, Visa, check, or money order. Call for additional information.

Connect with GAO

Connect with GAO on X,

LinkedIn, Instagram, and YouTube.

Subscribe to our Email Updates. Listen to our Podcasts.

Visit GAO on the web at https://www.gao.gov.

To Report Fraud, Waste, and Abuse in Federal Programs

Contact FraudNet:

Website: https://www.gao.gov/about/what-gao-does/fraudnet

Automated answering system: (800) 424-5454

Media Relations

Sarah Kaczmarek, Managing Director, Media@gao.gov

Congressional Relations

A. Nicole Clowers, Managing Director, CongRel@gao.gov

General Inquiries

[1]GAO, High-Risk Series: An Update, GAO‑15‑290 (Washington, D.C.: Feb. 11, 2015).

[2]GAO, High-Risk Series: Substantial Efforts Needed to Achieve Greater Progress on High-Risk Areas, GAO‑19‑157SP (Washington, D.C.: Mar. 6, 2019).

[3]Office of Science and Technology Policy (OSTP), Exec. Office of the President, American Artificial Intelligence Initiative: Year One Annual Report, (Feb. 2020).

[4]National Artificial Intelligence Initiative Act of 2020, Division E of the William M. (Mac) Thornberry National Defense Authorization Act for Fiscal Year 2021, Pub. L. No. 116-283, Div. E, § 5002(3), 134 Stat. 3388, 4524 (2021).(codified in relevant part at 15 U.S.C. § 9401(3)).

[5]National Institute of Standards and Technology (NIST), Artificial Intelligence Risk Management Framework, NIST AI 100-1 (Gaithersburg, MD: January 2023).

[6]GAO, Science & Tech Spotlight: Generative AI, GAO‑23‑106782 (Washington, D.C.: June 13, 2023).

[7]National Institute of Standards and Technology (NIST), Artificial Intelligence Risk Management Framework: Generative Artificial Intelligence Profile, NIST AI 600-1 (Gaithersburg, MD: July 2024).

[8]Exec. Order No. 13859, 84 Fed. Reg. 3967, Maintaining American Leadership in Artificial Intelligence (Feb. 11, 2019).

[9]Exec. Order No. 13960, 85 Fed. Reg. 78939, Promoting the Use of Trustworthy Artificial Intelligence in the Federal Government (Dec. 3, 2020)

[10]According to the U.S. Chief Information Officers Council, an AI use case refers to the specific scenario in which AI is designed, developed, procured, or used to advance the execution of agencies' missions and their delivery of programs and services, enhance decision-making, or provide the public with a particular benefit.

[11]Advancing American AI Act, Pub. L. No.117-263 (James M. Inhofe National Defense Authorization Act for Fiscal Year 2023), div. G, title LXXII, subtitle B, §§ 7221-7228,136 Stat. 2395, 3668-3676 (2022) (40 U.S.C. § 11301 note).

[12]U.S. Chief Information Officers Council, Guidance for Creating Agency Inventories of AI Use Cases Per EO 13960 (Washington, D.C.: 2023).

[13]Exec. Order No. 14148, 90 Fed. Reg. 8237, Initial Rescissions of Harmful Executive Orders and Actions (Jan. 20, 2025) and Exec. Order No. 14179, 90 Fed. Reg. 8741, Removing Barriers to American Leadership in Artificial Intelligence (Jan. 23, 2025). These EOs rescinded EO No. 14110; Exec. Order 14110, 88 Fed. Reg. 75191, Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence (Oct. 30, 2023).

[14]Exec. Order No. 14179; Office of Management and Budget (OMB), Accelerating Federal Use of AI through Innovation, Governance, and Public Trust, M-25-21 (Washington, D.C.: Apr. 3, 2025) (rescinding and replacing M-24-10).

[15]OMB, Driving Efficient Acquisition of Artificial Intelligence in Government, M-25-22 (Washington, D.C.: Apr. 3, 2025) (rescinding and replacing M-24-18).

[16]General Services Administration, AI Guide for Government (Washington, D.C.: June 2022).

[17] NIST, Secure Software Development Practices for Generative AI and Dual-Use Foundation Models, SP 800-218A (July 2024), providing generative AI-specific guidance in tandem with NIST, Secure Software Development Framework, SP 800-218 (Gaithersburg, MD: February 2022).

[18]GAO, Artificial Intelligence: Agencies Have Begun Implementation but Need to Complete Key Requirements, GAO‑24‑105980 (Washington, D.C.: Dec. 12, 2023).

[19]GAO, Electronic Health Records: VA Making Incremental Improvements in New System but Needs Updated Cost Estimate and Schedule, GAO‑25‑106874 (Washington, D.C.: Mar. 12, 2025); Electronic Health Records: VA Needs to Address Management Challenges with New System, GAO‑23‑106731 (Washington, D.C.: May 18, 2023); Veterans Affairs: Ongoing Financial Management System Modernization Program Would Benefit from Improved Cost and Schedule Estimating, GAO‑21‑227 (Washington, D.C.: Mar. 24, 2021); Federal Software Licenses: Agencies Need to Take Action to Achieve Additional Savings, GAO‑24‑105717 (Washington, D.C.: Jan. 29, 2024); Cybersecurity Workforce: Departments Need to Fully Implement Key Practices, GAO‑25‑106795 (Washington, D.C.: Jan. 16, 2025); and Cloud Computing: Agencies Need to Address Key OMB Procurement Requirements, GAO‑24‑106137 (Washington, D.C.: Sept. 10, 2024).

[20]GAO, Veterans Affairs: Leading Practices Can Help Achieve IT Reform Goals, GAO‑25‑108627 (Washington, D.C.: July 11, 2025).

[21]GAO, Government Reorganization: Key Questions to Assess Agency Reform Efforts, GAO‑18‑427 (Washington, D.C.: June 13, 2018).

[22]GAO, Artificial Intelligence: Generative AI Use and Management at Federal Agencies, GAO‑25‑107653 (Washington, D.C.: July 29, 2025).

[23]According to the U.S. Chief Information Officers Council, an AI use case refers to the specific scenario in which AI is designed, developed, procured, or used to advance the execution of agencies’ missions and their delivery of programs and services, enhance decision-making, or provide the public with a particular benefit. Of these use cases, VA reported that 1 use case in 2023 was associated specifically with generative AI, and 27 use cases in 2024 were associated specifically with generative AI.

[24]GAO, Science & Tech Spotlight: Generative AI in Health Care, GAO‑24‑107634 (Washington, D.C.: Sept. 9, 2024); Artificial Intelligence in Health Care: Benefits and Challenges of Machine Learning Technologies for Medical Diagnostics, GAO‑22‑104629 (Washington, D.C.: Sept. 29, 2022).

[25]We previously reported in GAO‑24‑106591 that the Office of Management and Budget established the Federal Risk and Authorization Management Program in 2011 to facilitate the adoption and use of cloud services. The program is intended to provide a standardized approach for selecting and authorizing the use of cloud services that meet federal security requirements

[26]OMB, Accelerating Federal Use of AI through Innovation, Governance, and Public Trust, M-25-21 (Washington, D.C.: Apr. 3, 2025).

[27]GAO, Artificial Intelligence: An Accountability Framework for Federal Agencies and Other Entities, GAO‑21‑519SP (Washington, D.C.: June 30, 2021).

[28]The governance principle includes six key practices in the first category and three key practices in the second category.

[29]The data principle includes five key practices in the first category and three key practices in the second category.

[30]In addition to standard computer hardware such as central processing units, an AI system may include additional hardware such as graphic processing units or assets in which the AI is embedded, as in the case of advanced robots and autonomous cars. Software in an AI system is a set of programs designed to enable a computer to perform a particular task or series of tasks.

[31]The performance principle includes four key practices in the first category and five key practices in the second category.

[32]The monitoring principle includes three key practices in the first category and two key practices in the second category.